Categories

Archives

The IPTC is happy to announce that EIDR and IPTC have signed a liaison agreement, committing to work together on projects of mutual interest including media metadata, content distribution technologies and work on provenance and authenticity for media.

The IPTC is happy to announce that EIDR and IPTC have signed a liaison agreement, committing to work together on projects of mutual interest including media metadata, content distribution technologies and work on provenance and authenticity for media.

The Entertainment Identifier Registry Association (EIDR) was established to provide a universal identifier registry that supports the full range of asset types and relationships between assets. Members of EIDR include Apple, Amazon MGM Studios, Fox, the Library of Congress, Netflix, Paramount, Sony Pictures, Walt Disney Studios and many more.

As EIDR said in their release:

EIDR’s primary focus is managing globally unique, curated, and resolvable content identification (which applies equally to news and entertainment media), via the Emmy Award-winning EIDR Content ID, and content delivery services, via the EIDR Video Service ID. In support of this, EIDR is built upon and helps promulgate the MovieLabs Digital Distribution Framework (MDDF), a suite of standards and specifications that address core aspects of digital distribution, including identification, metadata, avails, asset delivery, and reporting.

IPTC’s Video Metadata Hub standard already provides a mapping to EIDR’s Data Fields and the MDDF fields from related organisation MovieLabs. The organisations will work together to keep these mappings up-to-date and to work on future initiatives including making C2PA metadata work for both the news and the entertainment sides of the media industry. IPTC members have already started working in this area via IPTC’s Media Provenance Committee.

“In the Venn diagram of media, there is significant overlap between news and entertainment interests in descriptive metadata standards, globally-unique content identification, and media provenance and authenticity,” said Richard W. Kroon, EIDR’s director of technical operations. “By working together, we each benefit from the other’s efforts and can bring forth useful standards and practices that span the entire commercial media industry.

“Our hope here is to find common ground that can align our respective metadata standards to support seamless metadata management across the commercial media landscape.”

Last week, the IPTC Spring Meeting 2024 brought media industry experts together for three days in New York City to discuss many topics including AI, archives and authenticity.

Hosted by both The New York Times and Associated Press, over 50 attendees from 14 countries participated in person, with another 30+ delegates attending online.

As usual, the IPTC Working Group leads presented a summary of their most recent work, including a new release of NewsML-G2 (version 2.34, which will be released very soon); forthcoming work on ninjs to support events, planned news coverage and live streamed video; updates to NewsCodes vocabularies; more evangelism of IPTC Sport Schema; and further work on Video Metadata Hub, the IPTC Photo Metadata Standard and our emerging framework for a simple way to express common rights statements using RightsML.

We were very happy to hear many IPTC member organisations presenting at the Spring Meeting. We heard from:

- Anna Dickson of recently-joined member Google talked about their work with IPTC in the past and discussed areas where we could collaborate in the future

- Aimee Rinehart of Associated Press presented AP’s recent report on the use of generative AI in local news

- Scott Yates of JournalList gave an update on the trust.txt protocol

- Andreas Mauczka, Chief Digital Officer at Austria Press Agency APA presented on APA’s framework for use of generative AI in their newsroom

- Drew Wanczowski of Progress Software gave a demonstration of how IPTC standards can be implemented in Progress’s tools such as Semaphore and MarkLogic

- Vincent Nibart and Geert Meulenbelt of new IPTC Startup Member Kairntech presented on their recent work with AFP on news categorisation using IPTC Media Topics and other vocabularies

- Mathieu Desoubeaux of IPTC Startup Member IMATAG presented their work, also with AFP, on watermarking images for tracking and metadata retrieval purposes

In addition we heard from guest speakers:

- Jim Duran of the Vanderbilt TV News Archive spoke about how they are using AI to catalog and tag their extensive archive of decades of broadcast news content

- John Levitt of Elvex spoke about their system which allows media organisations to present a common interface (web interface and developer API) to multiple generative AI models, including tracking, logging, cost monitoring, permissions and other governance features which are important to large organisations using AI models.

- Toshit Panigrahi, co-founder of TollBit spoke about their platform for “AI content licensing at scale”, allowing content owners to establish rules and monitoring around how their content should be licensed for both the training of AI models and for retrieval-augmented generation (RAG)-style on-demand content access by AI agents.

- We also heard an update about the TEMS – Trusted European Media Data Space project.

We were also lucky enough to take tours of the Associated Press Corporate Archive on Tuesday and the New York Times archive on Wednesday. Valierie Komor of AP Corporate Archives and Jeff Roth of The New York Times Archival Library (known to staffers as “the morgue”) both gave fascinating insights and stories about how both archives preserve the legacy of these historically important news organisations.

We were also lucky enough to take tours of the Associated Press Corporate Archive on Tuesday and the New York Times archive on Wednesday. Valierie Komor of AP Corporate Archives and Jeff Roth of The New York Times Archival Library (known to staffers as “the morgue”) both gave fascinating insights and stories about how both archives preserve the legacy of these historically important news organisations.

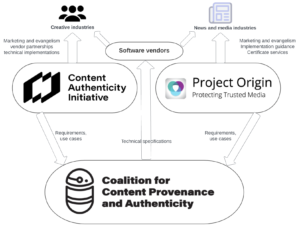

Brendan Quinn, speaking for Judy Parnall of the BBC, also presented an update of the recent work of C2PA and Project Origin and introduced the new IPTC Media Provenance Committee, dedicated to bringing C2PA technology to the news and media industry.

On behalf all attendees, we would like to thank The New York Times and Associated Press for hosting us, and especially to thank Jennifer Parrucci of The New York Times and Heather Edwards of The Associated Press for their hard work in coordinating use of their venues for our meeting.

The next IPTC Member Meeting will be the 2024 Autumn Meeting, which will be held online from Monday September 30th to Wednesday October 2nd, and will include the 2024 IPTC Annual General Meeting. The Spring Meeting 2025 will be held in Western Europe at a location still to be determined.

Yesterday Nick Clegg, Meta’s President of Global Affairs, announced that Meta would be using IPTC embedded photo metadata to label AI-Generated Images on Facebook, Instagram and Threads.

Meta already uses the IPTC Photo Metadata Standard’s Digital Source Type property to label images generated by its platform. The image to the right was generated using Imagine with Meta AI, Meta’s image generation tool. Viewing the image’s metadata with the IPTC’s Photo Metadata Viewer tool shows that the Digital Source Type field is set to “trainedAlgorithmicMedia” as recommended in IPTC’s Guidance on metadata for AI-generated images.

Clegg said that “we do several things to make sure people know AI is involved, including putting visible markers that you can see on the images, and both invisible watermarks and metadata embedded within image files. Using both invisible watermarking and metadata in this way improves both the robustness of these invisible markers and helps other platforms identify them.”

This approach of both direct and indirect disclosure is in line with the Partnership on AI’s Best Practices on signalling the use of generative AI.

Also, Meta are building recognition of this metadata into their tools: “We’re building industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.”

We have previously shared the news that Google, Microsoft, Adobe, Midjourney and Shutterstock will use IPTC metadata in their generated images, either directly in the IPTC Photo Metadata block or using the IPTC Digital Source Type vocabulary as part of a C2PA assertion. OpenAI has just announced that they have started using IPTC via C2PA metadata to signal the fact that images from DALL-E are generated by AI.

A call for platforms to stop stripping image metadata

We at the IPTC agree that this is a great step towards end-to-end support of indirect disclosure of AI-generated content.

As the Meta and OpenAI posts points out, it is possible to strip out both IPTC and C2PA metadata either intentionally or accidentally, so this is not a solution to all problems of content credibility.

Currently, one of the main ways metadata is stripped from images is when they are uploaded to Facebook or other social media platforms. So with this step, we hope that Meta’s platforms will stop stripping metadata from images when they are shared – not just the fields about generative AI, but also the fields regarding accessibility (alt text), copyright, creator’s rights and other information embedded in images by their creators.

Video next?

Meta’s post indicates that this type of metadata isn’t commonly used for video or audio files. We agree, but to be ahead of the curve, we have added Digital Source Type support to IPTC Video Metadata Hub so videos can be labelled in the same way.

We will be very happy to work with Meta and other platforms on making sure IPTC’s standards are implemented correctly in images, videos and other areas.

As we wrap up 2023, we thought it would be useful to give an update you on the IPTC’s work in 2023, including updates to most of our standards.

Two successful member meetings, one in person!

This year we finally held our first IPTC Member meeting in person since 2019, in Tallinn Estonia. We had around 30 people attend in person and 50 attended online from over 40 organisations. Presentations and discussions ranged from the e-Estonia digital citizen experience to building re-usable news content widgets with Web Components, and of course included generative AI, credibility and fact checking, and more. Here’s our report on the IPTC 2023 Spring Meeting.

For our Autumn Meeting we went back to an online format, with over 50 attendees, and more watching the recordings afterwards (which are available to all members). Along with discussions of generative AI and content licensing at this year’s meetings, it was great to hear the real-world implementation experience of the ASBU Cloud project from the Arab States Broadcasting Union. The system was created by IPTC members Broadcast Solutions, based on NewsML-G2. The DPP Live Production Exchange, led by new members Arqiva, will be another real-world implementation coming soon. We heard about the project’s first steps at the Autumn Meeting.

Also at this years Autumn Meeting we also heard from Will Kreth of the HAND Identity platform and saw a demo of IPTC Sport Schema from IPTC member Progress Software (previously MarkLogic). More on IPTC Sport Schema below! All news from the Autumn Meeting is summed up in our post AI, Video in the cloud, new standards and more: IPTC Autumn Meeting 2023

We’re very happy to say that the IPTC Spring Meeting 2024 will be held in New York from April 15 – 17. All IPTC member delegates are welcome to attend the meeting at no cost. If you are not a member but would like to present your work at the meeting, please get in touch using our Contact Us form.

IPTC Photo Metadata Conference, 7 May 2024: save the date!

Due to several issues, we were not able to run a Photo Metadata Conference in 2023, but we will be back with an online Photo Metadata Conference on 7th May 2024. Please mark the date in your calendar!

As usual, the event will be free and open for anyone to attend.

If you would like to present to the people most interested in photo metadata from around the world, please let us know!

Presentations at other conferences and work with other organisations

IPTC was represented at the CEPIC Congress in France, the EBU DataTech Seminar in Geneva, Sports Video Group Content Management Forum in New York and the DMLA’s International Digital Media Licensing Conference in San Francisco.

We also worked with CIPA, the organisation behind the Exif photo metadata standard, on aligning Exif with IPTC Photo Metadata, and supported them in their work towards Exif 3.0 which was announced in June.

The IPTC will be advising the TEMS project which is an EU-funded initiative to build a “media data space” for Europe, and possibly beyond: IPTC working with alliance to build a European Media Data Space.

IPTC’s work on Generative AI and media

Of course the big topic for media in 2023 has been Generative AI. We have been looking at this topic for several years, since it was known as “synthetic media” and back in 2022 we created a taxonomy of “digital source types” that can be used to describe various forms of machine-generated and machine-assisted content creation. This was a joint effort across our NewsCodes, Video Metadata and Photo Metadata Working Groups.

It turns out that this was very useful, and the IPTC Digital Source Type taxonomy has been adopted by Google, Midjourney, C2PA and others as a way to describe content. Here are some of our news posts from 2023 on this topic:

- IPTC publishes metadata guidance for AI-generated “synthetic media”

- Google announces use of IPTC metadata for generative AI images

- Midjourney and Shutterstock AI sign up to use of IPTC Digital Source Type to signal generated AI content

- Microsoft announces signalling of generative AI content using IPTC and C2PA metadata

- Royal Society/BBC workshop on Generative AI and content provenance

- New “digital source type” term added to support inpainting and outpainting in Generative AI

- IPTC releases technical guidance for creating and editing metadata, including DigitalSourceType

IPTC’s work on Trust and Credibility

After a lot of drafting work over several years, we released the Guidelines for Expressing Trust and Credibility signals in IPTC standards that shows how to embed trust infiormation in the form of “trust indicators” such as those from The Trust Project into content marked up using IPTC standards such as NewsML-G2 and ninjs. The guideline also discusses how media can be signed using C2PA specification.

We continue to work with C2PA on the underlying specification allowing signed metadata to be added to media content so that it becomes “tamper-evident”. However C2PA specification in its current form does not prescribe where the certificates used for signing should come from. To that end, we have been working with Microsoft, BBC, CBC / Radio Canada and The New York Times on the Steering Committee of Project Origin to create a trust ecosystem for the media industry. Stay tuned for more developments from Project Origin during 2024.

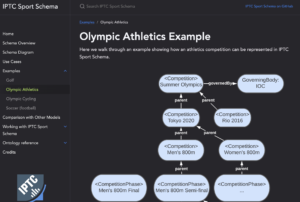

IPTC’s newest standard: IPTC Sport Schema

After years of work, the IPTC Sports Content Working Group released version 1.0 of IPTC Sport Schema. IPTC Sport Schema takes the experience of IPTC’s 10+ years of maintaining the XML-based SportsML standard and applies it to the world of the semantic web, knowledge graphs and linked data.

Paul Kelly, Lead of the IPTC Sports Content Working Group, presented IPTC Sport Schema to the world’s top sports media technologists: IPTC Sport Schema launched at Sports Video Group Content Management Forum.

Take a look at out dedicated site https://sportschema.org/ to see how it works, look at some demonstration data and try out a query engine to explore the data.

If you’re interested in using IPTC Sport Schema as the basis for sports data at your organisation, please let us know. We would be very happy to help you to get started.

Standard and Working Group updates

- Our IPTC NewsCodes vocabularies had two big updates, the NewsCodes 2023-Q1 update and the NewsCodes Q3 2023 update. For our main subject taxonomy Media Topics, over the year we added 12 new concepts, retired 73 under-used terms, and modified 158 terms to make their labels and/or descriptions easier to understand. We also added or updated vocabularies such as Digital Source Type and Authority Status.

- The News in JSON Working Group released ninjs 2.1 and ninjs 1.5 in parallel, so that people who cannot move from the 1.x schema can still get the benefits of new additions. The group is currently working on adding events and planning items to ninjs based on requirements the DPP Live Production Exchange project: expect to see something released in 2024.

- NewsML-G2 2.32 and NewsML-G2 v2.33 were released this year, including support for Generative AI via the Digital Source Type vocabulary.

- The IPTC Photo Metadata Standard 2023.1 allows rightsholders to express whether or not they are willing to allow their content to be indexed by search engines and data mining crawlers, and whether the content can be used as training data for Generative AI. This work was done in partnership with the PLUS Coalition. We also updated the IPTC Photo Metadata Mapping Guidelines to accommodate Exif 3.0.

- Through discussions and workshops at our Member Meetings in 2022 and 2023, we have been working on making RightsML easier to use and easier to understand. Stay tuned for more news on RightsML in 2024.

- Video Metadata Hub 1.5 adds the same properties to allow content to be excluded from generative AI training data sets. We have also updated the Video Metadata Hub Generator tool to generate C2PA-compliant metadata “assertions”.

New faces at IPTC

Ian Young of Alamy / PA Media Group stepped up to become the lead of the News in JSON Working Group, taking over from Johan Lindgren of TT who is winding down his duties but still contributes to the group.

We welcomed Bonnier News, Newsbridge, Arqiva, the Australian Broadcasting Corporation and Neuwo.ai as new IPTC members, plus a very well known name who will be joining at the start of 2024. We’re very happy to have you all as members!

We are always happy to work with more organisations in the media and related industries. If you would like to talk to us about joining IPTC, please complete our membership enquiry form.

Here’s to a great 2024!

Thanks to everyone who gave IPTC your support, and we look forward to working with you in the coming year.

If you have any questions or comments (and especially if you would like to speak at one of our events in 2024!), you can contact us via our contact form.

Best wishes,

Brendan Quinn

Managing Director, IPTC

and the IPTC Board of Directors: Dave Compton (LSE Group), Heather Edwards (The Associated Press), Paul Harman (Bloomberg LP), Gerald Innerwinkler (APA), Philippe Mougin (Agence France-Presse), Jennifer Parrucci (The New York Times), Robert Schmidt-Nia of DATAGROUP (Chair of the Board), Guowei Wu (Xinhua)

Made with Bing Image Creator. Powered by DALL-E.

Following the IPTC’s recent announcement that Rights holders can exclude images from generative AI with IPTC Photo Metadata Standard 2023.1 , the IPTC Video Metadata Working Group is very happy to announce that the same capability now exists for video, through IPTC Video Metadata Hub version 1.5.

The “Data Mining” property has been added to this new version of IPTC Video Metadata Hub, which was approved by the IPTC Standards Committee on October 4th, 2023. Because it uses the same XMP identifier as the Photo Metadata Standard property, the existing support in the latest versions of ExifTool will also work for video files.

Therefore, adding metadata to a video file that says it should be excluded from Generative AI indexing is as simple as running this command in a terminal window:

exiftool -XMP-plus:DataMining="Prohibited for Generative AI/ML training" example-video.mp4

(Please note that this will only work in ExifTool version 12.67 and above, i.e. any version of ExifTool released after September 19, 2023)

The possible values of the Data Mining property are listed below:

| PLUS URI | Description (use exactly this text with ExifTool) |

| Unspecified – no prohibition defined | |

| Allowed | |

| Prohibited for AI/ML training | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-GENAIMLTRAINING |

Prohibited for Generative AI/ML training |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-EXCEPTSEARCHENGINEINDEXING |

Prohibited except for search engine indexing |

| Prohibited | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEECONSTRAINT |

Prohibited, see plus:OtherConstraints |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEEEMBEDDEDRIGHTSEXPR |

Prohibited, see iptcExt:EmbdEncRightsExpr |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEELINKEDRIGHTSEXPR |

Prohibited, see iptcExt:LinkedEncRightsExpr |

A corresponding new property “Other Constraints” has also been added to Video Metadata Hub v1.5. This property allows plain-text human-readable constraints to be placed on the video when using the “Prohibited, see plus:OtherConstraints” value of the Data Mining property.

The Video Metadata Hub User Guide and Video Metadata Hub Generator have also been updated to include the new Data Mining property added in version 1.5.

We look forward to seeing video tools (and particularly crawling engines for generative AI training systems) implement the new properties.

Please feel free to discuss the new version of Video Metadata Hub on the public iptc-videometadata discussion group, or contact IPTC via the Contact us form.

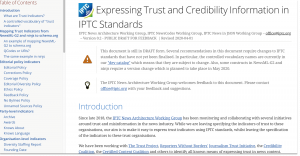

The IPTC is proud to announce that after intense work by most of its Working Groups, we have published version 1.0 of our guidelines document: Expressing Trust and Credibility Information in IPTC Standards.

The culmination of a large amount of work over the past several years across many of IPTC’s Working Groups, the document represents a guide for news providers as to how to express signals of trust known as “Trust Indicators” into their content.

Trust Indicators are ways that news organisations can signal to their readers and viewers that they should be considered as trustworthy publishers of news content. For example, one Trust Indicator is a news outlet’s corrections policy. If the news outlet provides (and follows) a clear guideline regarding when and how it updates its news content.

The IPTC guideline does not define these trust indicators: they were taken from existing work by other groups, mainly the Journalism Trust Initiative (an initiative from Reporters Sans Frontières / Reporters Without Borders) and The Trust Project (a non-profit founded by Sally Lehrman of UC Santa Cruz).

The first part of the guideline document shows how trust indicators created by these standards can be embedded into IPTC-formatted news content, using IPTC’s NewsML-G2 and ninjs standards which are both widely used for storing and distributing news content.

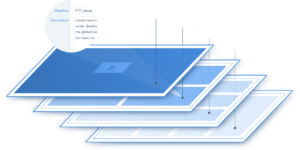

The second part of the IPTC guidelines document describes how cryptographically verifiable metadata can be added to media content. This metadata may express trust indicators but also more traditional metadata such as copyright, licensing, description and accessibility information. This can be achieved using the C2PA specification, which implements the requirements of the news industry via Project Origin and of the wider creative industry via the Content Authenticity Initiative. The IPTC guidelines show how both IPTC Photo Metadata and IPTC Video Metadata Hub metadata can be included in a cryptographically signed “assertion”

We expect these guidelines to evolve as trust and credibility standards and specifications change, particularly in light of recent developments in signalling content created by generative AI engines. We welcome feedback and will be happy to make changes and clarifications based on recommendations.

The IPTC sends its thanks to all IPTC Working Groups that were involved in creating the guidelines, and to all organisations who created the trust indicators and the frameworks upon which this work is based.

Feedback can be shared using the IPTC Contact Us form.

The IPTC NewsCodes Working Group has approved an addition to the Digital Source Type NewsCodes vocabulary.

The new term, “Composite with Trained Algorithmic Media“, is intended to handle situations where the “synthetic composite” term is not specific enough, for example a composite that is specifically made using an AI engine’s “inpainting” or “outpainting” operations.

The full Digital Source Type vocabulary can be accessed from https://cv.iptc.org/newscodes/digitalsourcetype. It can be downloaded in NewsML-G2 (XML), SKOS (RDF/XML, Turtle or JSON-LD) to be integrated into content management and digital asset management systems.

The new term can be used immediately with any tool or standard that supports IPTC’s Digital Source Type vocabulary, including the C2PA specification, the IPTC Photo Metadata Standard and IPTC Video Metadata Hub.

Information on the new term will soon be added to IPTC’s Guidance on using Digital Source Type in the IPTC Photo Metadata User Guide.

The IPTC has updated its Photo Metadata User Guide to include some best practice guidelines for how to use embedded metadata to signal “synthetic media” content that was created by generative AI systems.

After our work in 2022 and the draft vocabulary to support synthetic media, the IPTC NewsCodes Working Group, Video Metadata Working Group and Photo Metadata Working Group worked together with several experts and organisations to come up with a definitive list of “digital source types” that includes various types of machine-generated content, or hybrid human and machine-generated media.

Since publishing the vocabulary, the work has been picked up by the Coalition for Content Provenance and Authenticity (C2PA) via the use of digitalSourceType in Actions and in the IPTC Photo and Video Metadata assertion. But the primary use case is for adding metadata to image and video files

Here is a direct link to the new section on Guidance for using Digital Source Type, including examples for how the various terms can be used to describe media created in different formats – audio, video, images and even text.

IPTC recommends that software creating images using trained AI algorithms uses the “Digital Source Type” value of “trainedAlgorithmicMedia” is added to the XMP data packet in generated image and video files. Alternatively, it may be included in a C2PA manifest as described in the IPTC assertion documentation in the C2PA specification.

The official URL for the full vocabulary is http://cv.iptc.org/newscodes/digitalsourcetype, so the complete URI for the recommended Trained Algorithmic Media term is http://cv.iptc.org/newscodes/digitalsourcetype/trainedAlgorithmicMedia.

Other terms in the vocabulary include:

- Composite with synthetic elements – https://cv.iptc.org/newscodes/digitalsourcetype/compositeSynthetic – covering a composite image that contains some synthetic and some elements captured with a camera;

- Digital Art – https://cv.iptc.org/newscodes/digitalsourcetype/digitalArt – covering art created by a human using digital tools such as a mouse or digital pencil, or computer-generated imagery (CGI) video

- Virtual recording – https://cv.iptc.org/newscodes/digitalsourcetype/virtualRecording – a recording of a virtual event which may or may not contain synthetic elements, such as a Fortnite game or a Zoom meeting

- and several other options – see the full list with examples in the IPTC Photo Metadata User Guide.

Of course, the original digital source type values covering photographs taken on a digital camera or phone (digitalCapture), scan from negative (negativeFilm), and images digitised from print (print) are also valid and may continue to be used. We have, however, retired the generic term “softwareImage” which is now deemed to be too generic. We recommend using one of the newer terms in its place.

If you are considering implementing this guidance in AI image generation software, we would love to hear about it so we can offer advice and tell others. Please contact us using the IPTC contact form.

Here is a wrap-up of IPTC has been up to in 2022, covering our latest work, including updates to most of our key standards.

Two successful member meetings and five member webinars

This year we again held our member meetings online, in May and October. We had over 70 registered attendees each time, from over 40 organisations, which is well over half of our member organisations so it shows that the virtual format works well.

This year we had guests from United Robots, Kairntech, EDRLab, Axate, HAND Identity, RealityDefender.ai, synthetic media consultant Henrik de Gyor and metaverse expert Toby Allen, as well as member presentations from The New York Times, Agence France-Presse, Refinitiv (an LSE Group company), DATAGROUP Consulting, TT Sweden, iMatrics and more. And that’s not even counting our regular Working Group presentations! So we had a very busy three days in May and October.

We also had some very interesting members-only webinars including a deep dive into ninjs 2.0, JournalList and the trust.txt protocol, a joint webinar with the EBU on how Wikidata and IPTC Media Topics can be used together, and a great behind the scenes question-and-answer session with a product manager from Wikidata itself.

Recordings of all presentations and webinars are available to IPTC members in the Members-Only Zone.

A fascinating Photo Metadata Conference

This year’s IPTC Photo Metadata Conference was held online in November and we had over 150 registrants and 19 speakers from Microsoft, CBC Radio Canada, BBC, Adobe, Content Authenticity Initiative, the Smithsonian and more. The general theme was bringing the IPTC Photo Metadata Standard to the real world, focussing on adoption of the recently-introduced accessibility properties, looking at adoption and interoperability between different software tools, including a new comparison tool that we have introduced; use of C2PA and Content Authenticity in newsroom workflows, with demos from the BBC and CBC (with Microsoft Azure).

We also had an interesting session discussing the future of AI-generated images and how metadata could help to identify which images are synthetic, the directions and algorithms used to create them, and whether or not the models were trained on copyrighted images.

Recordings of all sessions are available online.

Presentations at other conferences, work with other organisations

IPTC was represented at the CEPIC Congress in Spain, the DigiTIPS conference run by imaging.org, the Sports Video Group’s content management group, and several Project Origin events.

Our work with C2PA is progressing well. As of version 1.2 of the C2PA Specification, assertions can now include any property from IPTC Photo Metadata Standard and/or IPTC Video Metadata Hub. C2PA support is growing in tools and is now available in Adobe Photoshop.

IPTC is also working with Project Origin on enabling C2PA in the news industry.

We had an IPTC member meet-up at the NAB Show in Las Vegas in May.

We also meet regularly with Google, schema.org, CIPA (the camera-makers behind the Exif standard), ISO, CEPIC and more.

Standard and Working Group updates

- Our IPTC NewsCodes vocabularies had regular updates each quarter, including 12 new terms at least 20 retired terms. See the details in our news posts about the September Update, July Update, May Update, and the February Update (in time for the Winter Olympics). We also extended the Digital Source Type vocabulary specifically to address “synthetic media” or AI-generated content.

- The News in JSON Working Group released ninjs 1.4, a parallel release for those who can’t upgrade to ninjs 2.0 which was released in 2021. We published a case study showing how Alamy uses ninjs 2.0 for its content API.

- NewsML-G2 v2.31 includes support for financial instruments without the need to attach them to organisations.

- Photo Metadata Standard 2022.1 includes a Contributor structure aligned with Video Metadata Hub which can handle people who worked on a photograph but did not press the shutter, such as make-up artists, stylists or set designers;

- The Sports Content Working Group is working on the IPTC Sport Schema, which is pre-release but we are showing it to various stakeholders before a wider release for feedback. If you are interested, please let me know!

- Video Metadata Hub 1.4 includes new properties for accessibility, content warnings, AI-generated content, and clarifies the meanings of many other properties.

New faces at IPTC

We waved farewell to Johan Lindgren of TT as a Board Member, after five years of service. Thankfully Johan is staying on as Lead of the News in JSON Working Group.

We welcomed long-time member Heather Edwards of The Associated Press as our newest board member.

We welcomed Activo, Data Language, Denise Kremer, MarkLogic, Truefy, Broadcast Solutions and Access Intelligence as new IPTC members, plus Swedish publisher Bonnier News who are joining at the start of 2023. We’re very happy to have you all as members!

If you are interested in joining, please fill out our membership enquiry form.

Web site updates

We launched a new, comprehensive navigation bar on this website, making it easier to find our most important content.

We have also just launched a new section highlighting the “themes” that IPTC is watching across all of our Working Groups:

We would love to hear what you think about the new sections, which hopefully bring the site to life.

Best wishes to all for a successful 2023!

Thanks to everyone who has supported IPTC this year, whether as members, speakers at our events, contributors to our standards development or software vendors implementing our standards. Thanks for all your support, and we look forward to working with you more in the coming year.

If you have any questions or comments, you can contact me directly at mdirector@iptc.org.

Best wishes,

Brendan Quinn

Managing Director, IPTC

The IPTC Video Metadata Working Group is proud to announce the release of version 1.4 of the group’s standard, Video Metadata Hub.

See the updated properties table, updated mappings table and updated guidelines document.

All changes can be used immediately in Video Metadata Hub use cases, particularly in C2PA assertions (described in our recent post).

Version 1.4 introduces several new properties and several changes to add accessibility properties and to align it more closely with the IPTC Photo Metadata Standard:

-

Content Warning: signals to viewers such as drug references, nudity or violence, and health warnings such as flashing lights.

-

Digital Source Type: whether the content was created by a digital camera, scanned from film, or created using or with the assistance of a computer.

-

Review Rating: a third-party review such as a film’s rating on Rotten Tomatoes.

-

Workflow Rating: equivalent to the XMP rating field, a numeric scale generally used by creators to tag shots/takes/videos that are of higher quality.

-

Alt Text (Accessibility): Short text describing the video to those who cannot view it.

-

Extended Description (Accessibility): Longer description more fully describing the purpose and meaning of the video, elaborating on the information given in the Alt Text (Accessibility) property.

-

Timed Text Link: refers to an external file used for text with timing information using a standard such as WebVTT or W3C Timed Text, usually used for subtitles, closed captions or audio description

-

Date Created: modified description to align it with Photo Metadata.

-

Rating: modified description to make it more specifically about audience content classifications such as MPA ratings

-

Data Displayed on Screen: changed description.

-

Keywords: Pluralised label to match the equivalent in Photo Metadata.

-

Title: changed data type to allow multiple languages

-

Transcript: changed data type to allow multiple languages

-

Copyright Year: changed description to add “year of origin of the video”

-

Embedded Encoded Rights Expression: property label changed from “Rights and Licensing Terms (1)” to clarify and align with Photo Metadata

-

Linked Encoded Rights Expression: property label changed from “Rights and Licensing Terms (2)” to clarify and align with Photo Metadata

-

Copyright Owner: label change label from “Rights Owner” to align with Photo Metadata

-

Source (Supply Chain): Change label and XMP property to align it with Photo Metadata

-

Qualified Link with Language: used by Times Text Link, specifies an external file along with its role (eg “audio description”) and human language (eg “Spanish”)

-

“Embedded Encoded Rights Expression Structure” changed label from “Embedded Rights Expression Structure” to align with Photo Metadata

-

“Linked Encoded Rights Expression Structure” changed label from “Linked Rights Expression Structure” to align with Photo Metadata

-

Data type of “Role” in the “Entity with Role” structure was changed from URI to Text to align with Photo Metadata

The Video Metadata Hub mapping tables also include mappings to the DPP AS-11 and MovieLabs MDDF formats.

The Video Metadata Hub Generator can be used to explore the properties in the standard.

Please contact IPTC or the public Video Metadata discussion group with any questions or suggestions.