Categories

Archives

Made with Bing Image Creator. Powered by DALL-E.

Following the IPTC’s recent announcement that Rights holders can exclude images from generative AI with IPTC Photo Metadata Standard 2023.1 , the IPTC Video Metadata Working Group is very happy to announce that the same capability now exists for video, through IPTC Video Metadata Hub version 1.5.

The “Data Mining” property has been added to this new version of IPTC Video Metadata Hub, which was approved by the IPTC Standards Committee on October 4th, 2023. Because it uses the same XMP identifier as the Photo Metadata Standard property, the existing support in the latest versions of ExifTool will also work for video files.

Therefore, adding metadata to a video file that says it should be excluded from Generative AI indexing is as simple as running this command in a terminal window:

exiftool -XMP-plus:DataMining="Prohibited for Generative AI/ML training" example-video.mp4

(Please note that this will only work in ExifTool version 12.67 and above, i.e. any version of ExifTool released after September 19, 2023)

The possible values of the Data Mining property are listed below:

| PLUS URI | Description (use exactly this text with ExifTool) |

| Unspecified – no prohibition defined | |

| Allowed | |

| Prohibited for AI/ML training | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-GENAIMLTRAINING |

Prohibited for Generative AI/ML training |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-EXCEPTSEARCHENGINEINDEXING |

Prohibited except for search engine indexing |

| Prohibited | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEECONSTRAINT |

Prohibited, see plus:OtherConstraints |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEEEMBEDDEDRIGHTSEXPR |

Prohibited, see iptcExt:EmbdEncRightsExpr |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEELINKEDRIGHTSEXPR |

Prohibited, see iptcExt:LinkedEncRightsExpr |

A corresponding new property “Other Constraints” has also been added to Video Metadata Hub v1.5. This property allows plain-text human-readable constraints to be placed on the video when using the “Prohibited, see plus:OtherConstraints” value of the Data Mining property.

The Video Metadata Hub User Guide and Video Metadata Hub Generator have also been updated to include the new Data Mining property added in version 1.5.

We look forward to seeing video tools (and particularly crawling engines for generative AI training systems) implement the new properties.

Please feel free to discuss the new version of Video Metadata Hub on the public iptc-videometadata discussion group, or contact IPTC via the Contact us form.

The IPTC is proud to announce that after intense work by most of its Working Groups, we have published version 1.0 of our guidelines document: Expressing Trust and Credibility Information in IPTC Standards.

The culmination of a large amount of work over the past several years across many of IPTC’s Working Groups, the document represents a guide for news providers as to how to express signals of trust known as “Trust Indicators” into their content.

Trust Indicators are ways that news organisations can signal to their readers and viewers that they should be considered as trustworthy publishers of news content. For example, one Trust Indicator is a news outlet’s corrections policy. If the news outlet provides (and follows) a clear guideline regarding when and how it updates its news content.

The IPTC guideline does not define these trust indicators: they were taken from existing work by other groups, mainly the Journalism Trust Initiative (an initiative from Reporters Sans Frontières / Reporters Without Borders) and The Trust Project (a non-profit founded by Sally Lehrman of UC Santa Cruz).

The first part of the guideline document shows how trust indicators created by these standards can be embedded into IPTC-formatted news content, using IPTC’s NewsML-G2 and ninjs standards which are both widely used for storing and distributing news content.

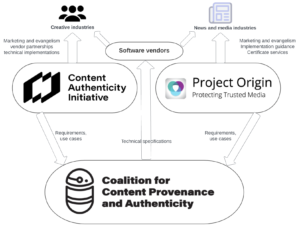

The second part of the IPTC guidelines document describes how cryptographically verifiable metadata can be added to media content. This metadata may express trust indicators but also more traditional metadata such as copyright, licensing, description and accessibility information. This can be achieved using the C2PA specification, which implements the requirements of the news industry via Project Origin and of the wider creative industry via the Content Authenticity Initiative. The IPTC guidelines show how both IPTC Photo Metadata and IPTC Video Metadata Hub metadata can be included in a cryptographically signed “assertion”

We expect these guidelines to evolve as trust and credibility standards and specifications change, particularly in light of recent developments in signalling content created by generative AI engines. We welcome feedback and will be happy to make changes and clarifications based on recommendations.

The IPTC sends its thanks to all IPTC Working Groups that were involved in creating the guidelines, and to all organisations who created the trust indicators and the frameworks upon which this work is based.

Feedback can be shared using the IPTC Contact Us form.

The IPTC NewsCodes Working Group has approved an addition to the Digital Source Type NewsCodes vocabulary.

The new term, “Composite with Trained Algorithmic Media“, is intended to handle situations where the “synthetic composite” term is not specific enough, for example a composite that is specifically made using an AI engine’s “inpainting” or “outpainting” operations.

The full Digital Source Type vocabulary can be accessed from https://cv.iptc.org/newscodes/digitalsourcetype. It can be downloaded in NewsML-G2 (XML), SKOS (RDF/XML, Turtle or JSON-LD) to be integrated into content management and digital asset management systems.

The new term can be used immediately with any tool or standard that supports IPTC’s Digital Source Type vocabulary, including the C2PA specification, the IPTC Photo Metadata Standard and IPTC Video Metadata Hub.

Information on the new term will soon be added to IPTC’s Guidance on using Digital Source Type in the IPTC Photo Metadata User Guide.

The IPTC Video Metadata Working Group is proud to announce the release of version 1.4 of the group’s standard, Video Metadata Hub.

See the updated properties table, updated mappings table and updated guidelines document.

All changes can be used immediately in Video Metadata Hub use cases, particularly in C2PA assertions (described in our recent post).

Version 1.4 introduces several new properties and several changes to add accessibility properties and to align it more closely with the IPTC Photo Metadata Standard:

-

Content Warning: signals to viewers such as drug references, nudity or violence, and health warnings such as flashing lights.

-

Digital Source Type: whether the content was created by a digital camera, scanned from film, or created using or with the assistance of a computer.

-

Review Rating: a third-party review such as a film’s rating on Rotten Tomatoes.

-

Workflow Rating: equivalent to the XMP rating field, a numeric scale generally used by creators to tag shots/takes/videos that are of higher quality.

-

Alt Text (Accessibility): Short text describing the video to those who cannot view it.

-

Extended Description (Accessibility): Longer description more fully describing the purpose and meaning of the video, elaborating on the information given in the Alt Text (Accessibility) property.

-

Timed Text Link: refers to an external file used for text with timing information using a standard such as WebVTT or W3C Timed Text, usually used for subtitles, closed captions or audio description

-

Date Created: modified description to align it with Photo Metadata.

-

Rating: modified description to make it more specifically about audience content classifications such as MPA ratings

-

Data Displayed on Screen: changed description.

-

Keywords: Pluralised label to match the equivalent in Photo Metadata.

-

Title: changed data type to allow multiple languages

-

Transcript: changed data type to allow multiple languages

-

Copyright Year: changed description to add “year of origin of the video”

-

Embedded Encoded Rights Expression: property label changed from “Rights and Licensing Terms (1)” to clarify and align with Photo Metadata

-

Linked Encoded Rights Expression: property label changed from “Rights and Licensing Terms (2)” to clarify and align with Photo Metadata

-

Copyright Owner: label change label from “Rights Owner” to align with Photo Metadata

-

Source (Supply Chain): Change label and XMP property to align it with Photo Metadata

-

Qualified Link with Language: used by Times Text Link, specifies an external file along with its role (eg “audio description”) and human language (eg “Spanish”)

-

“Embedded Encoded Rights Expression Structure” changed label from “Embedded Rights Expression Structure” to align with Photo Metadata

-

“Linked Encoded Rights Expression Structure” changed label from “Linked Rights Expression Structure” to align with Photo Metadata

-

Data type of “Role” in the “Entity with Role” structure was changed from URI to Text to align with Photo Metadata

The Video Metadata Hub mapping tables also include mappings to the DPP AS-11 and MovieLabs MDDF formats.

The Video Metadata Hub Generator can be used to explore the properties in the standard.

Please contact IPTC or the public Video Metadata discussion group with any questions or suggestions.

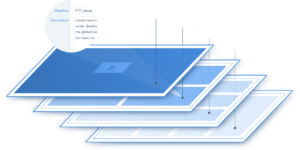

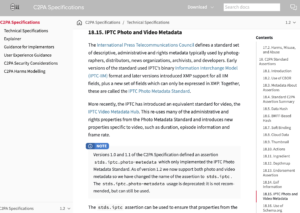

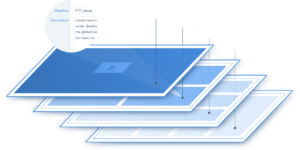

We are happy to announce that IPTC’s work with C2PA, the Coalition for Content Provenance and Authority, continues to bear fruit. The latest development is that C2PA assertions can now include properties from both the IPTC Photo Metadata Standard and our video metadata standard, IPTC Video Metadata Hub.

Version 1.2 of the C2PA Specification describes how metadata from either the photo or video standard can be added, using the XMP tag for each field in the JSON-LD markup for the assertion.

For IPTC Photo Metadata properties, the XMP tag name to be used is shown in the “XMP specs” row in the table describing each property in the Photo Metadata Standard specification. For Video Metadata Hub, the XMP tag can be found in the Video Metadata Hub properties table under the “XMP property” column.

We also show in the example assertion how the new accessibility properties can be added using the Alt Text (Accessibility) field which is available in Photo Metadata Standard and will soon be available in a new version of Video Metadata Hub.

The National Association of Broadcasters (NAB) Show wrapped up its first face-to-face event in three years last week in Las Vegas. In spite of the name, this is an internationally attended trade conference and exhibition showcasing equipment, software and services for film and video production, management and distribution. There were 52,000 attendees, down from a typical 90-100k, with some reduction in booth density; overall the show was reminiscent of pre-COVID days. A few members of IPTC met while there: Mark Milstein (vAIsual), Alison Sullivan (MGM Resorts), Phil Avner (Associated Press) and Pam Fisher (The Media Institute). Kudos to Phil for working, showcasing ENPS on the AP stand, while others walked the exhibition stands.

NAB is a long-running event and several large vendors have large ‘anchor’ booths. Some such as Panasonic and Adobe reduced their normal NAB booth size, while Blackmagic had their normal ‘city block’-sized presence, teeming with traffic. In some ways the reduced booth density was ideal for visitors: plenty of tables and chairs populated the open areas making more meeting and refreshment space available. The NAB exhibition is substantially more widely attended than the conference, and this year several theatres were provided on the show floor for sessions any ‘exhibits only’ attendee could watch. Some content is now available here: https://nabshow.com/2022/videos-on-demand/

For the most part this was a show of ‘consolidation’ rather than ‘innovation’. For example, exhibitors were enjoying welcoming their partners and customers face-to-face rather than launching significant new products. Codecs standardised during the past several years were finally reaching mainstream support, with AV1, VP9 and HEVC well-represented across vendors. SVT-AV1 (Scalable Vector Technology) was particularly prevalent, having been well optimised and made available to use license-free by the standard’s contributors. VVC (Versatile Video Coding), a more recent and more advanced standard, is still too computationally intensive for commercial use, though a small set made mention of it on their stands (e.g. Fraunhofer).

IP is now fairly ubiquitous within broadcast ecosystems. To consolidate further, an IP Showcase booth illustrating support across standards bodies and professional organisations championed more sophisticated adoption. A pyramid graphic showing a cascade of ‘widely available’ to ‘rarely available’ sub-systems encouraged deeper adoption.

Super Resolution – raising the game for video upscaling

One of the show floor sessions – “Improving Video Quality with AI” – presented advances by iSIZE and Intel. The Intel technology may be particularly interesting to IPTC members, and concerns “Super Resolution.” Having followed the subject for over 20 years, for me this was a personal highlight of the show.

Super Resolution is a technique for creating higher resolution content from smaller originals. For example, achieving a professional quality 1080p video from a 480p source, or scaling up a social media-sized image for feature use.

A few years ago a novel and highly effective new Super Resolution method was innovated (“RAISR”, see https://arxiv.org/abs/1606.01299); this represented a major discontinuity in the field, albeit with the usual mountain of investment and work needed to take the ‘R’ (research) to ‘D’ (development).

This is exactly what Intel have done, and the resulting toolsets will be made available at no cost at the company’s Open Visual Cloud repository at the end of May.

Intel invested four years in improving the AI/ML algorithms (having created a massive ground truth library for learning), optimising to CPUs for performance and parallelisation, and then engineering the ‘applied’ tools developers need for integration (e.g. Docker containers, FFmpeg and GStreamer plug-ins). Performance will now be commercially robust.

The visual results are astonishing, and could have a major impact on the commercial potential of photographic and film/video collections needing to reach much higher resolutions or even to repair ‘blurriness’.

Next year’s event is the centennial of the first NAB Show and takes place from April 15th-19th in Las Vegas.

– Pam Fisher – Lead, IPTC Video Metadata Working Group

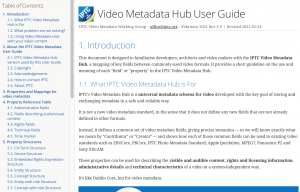

The IPTC Video Metadata Working Group is happy to announce the 1.0 version of the IPTC Video Metadata Hub User Guide.

The IPTC Video Metadata Working Group is happy to announce the 1.0 version of the IPTC Video Metadata Hub User Guide.

The guide introduces IPTC’s Video Metadata Hub recommendation and explains how it can be used to solve metadata management problems in any organisation that processes video content, from news agencies to advertising agencies; libraries, galleries and museums; long-form video producers such as broadcasters and movie studios; and stock video services.

As well as explaining the details of each field in the IPTC Video Metadata Hub standard, it shows through a set of use cases how it can be used in a variety of common scenarios to store rights, descriptive and administrative metadata for video content.

Pam Fisher, group lead, and the IPTC’s Video Metadata Working Group welcome feedback on the document. If your organisation handles video content, please read it and let us know what you think and what can be explained better. Comments can be send via this site’s Contact Us form or to the public Video Metadata Hub discussion list at https://groups.io/g/iptc-videometadata.

The guide can be seen at https://iptc.org/std/videometadatahub/userguide/.

On July 1st 2020, IPTC was invited to participate in an online workshop held by the Arab States Broadcasting Union (ASBU).

On July 1st 2020, IPTC was invited to participate in an online workshop held by the Arab States Broadcasting Union (ASBU).

In a joint presentation, Brendan Quinn (IPTC Managing Director) and Robert Schmidt-Nia (Chair of IPTC and consultant with DATAGROUP Consulting Services) spoke on behalf of IPTC and Jürgen Grupp (data architect with the German public broadcaster SWR) spoke on behalf of the European Broadcasting Union.

The invitation was extended to IPTC and EBU because ASBU is looking at creating a common framework for sharing content between ASBU member broadcasters.

Jürgen Grupp started with an overview of why metadata is important in broadcasting and media organisations, and introduced the EBU’s high-level architecture for media, the EBU Class Conceptual Data Model (CCDM). and the EBUCore metadata set. Jürgen then gave examples of how CCDM and EBUCore are implemented by some European broadcasters.

Next, Brendan Quinn introduced IPTC and the IPTC News Architecture, the underlying logical model behind all of IPTC’s standards. We then took a deep dive into some video-related NewsML-G2 constructs like partMeta (used to describe metadata for parts of a video such as the rights and descriptive metadata for multiple time-based shots within a single video file) and contentSet (used to link to multiple renditions of the same video in different formats, resolutions or quality levels).

Then Robert Schmidt-Nia described some real-world examples of implementation of NewsML-G2 and the IPTC News Architecture at broadcasters and news agencies in Europe, in particular touching on the real-world issues of whether to “push” content or to create a “content API” that customers can use to select and download the content that they would like.

A common theme throughout our presentations was that the representation of the data in XML, RDF, JSON or some other format is relatively easy to change, but the important decision is what logical model to use and how to agree on the meaning (semantics) of terms and vocabularies.

A robust question and answer period touched on wide-ranging issues from the choices between XML, RDF and JSON, extending standardised models and vocabularies, and what decisions should be made to decide how to proceed.

This was one of the first meetings of ASBU on this topic and we look forward to assisting them further on their journey to metadata-based content sharing between their members.

The IPTC Video Metadata Working Group is proud to release the latest version of its mapping standard, Video Metadata Hub. Version 1.3 was approved by the IPTC Standards Committee on 13 May 2020.

“We are pleased to release the new version of IPTC Video Metadata Hub,” said Pam Fisher, Lead of the Video Metadata Working Group. “The changes bring improved clarity, add compatibility with and mapping to EIDR (Entertainment ID Registry), and update the mapping to EBUcore for 2020. These changes are part of our commitment to friction-free adoption across all sectors of the media landscape, supporting video interchange.”

Video Metadata Hub includes two components: a core set of recommended metadata properties to be used across all video content, and mappings that show how to implement those core properties in a series of other video standards, including Apple Quicktime, MPEG-7, Sony XDCAM MXF and SMPTE P2 from Panasonic, Canon VideoClip XML, XMP, IPTC Photo Metadata and NewsML-G2, PBCore, schema.org, In VideoMetadataHub 1.3 we now add a mapping to EIDR Data Fields 2.0.

All feedback on Video Metadata Hub should be directed to the public discussion group at https://groups.io/g/iptc-videometadata.

This post is part of a series about the IPTC Spring Meeting 2019 in Lisbon, Portugal. See day 1 writeup and the day 3 writeup.

Tuesday was our biggest day in terms of content and also in terms of people! We had 40 people in the meeting room which was a tight squeeze, thanks to everyone for your understanding!

The topic focus for Day 2 was Photo and Video, so it was natural that the day was kicked off by Michael Steidl, lead of the IPTC Photo and Video Working Groups. As we had a lot of new members and new attendees in the audience, Michael gave an overview of how IPTC Photo Metadata has come to where it is today, used by almost all photography providers and even used in Google Image Search results (see our post from last year on that subject). The Photo Metadata Working Group is currently conducting a survey of Photo Metadata usage across publishers, photo suppliers (such as stock photo agencies and news wires), and software makers. Michael gave a quick preview of some of the results but we won’t spoil anything here, you will have to wait for the full results to be revealed at the 2019 IPTC Photo Metadata Conference in Paris this June. Brendan Quinn also presented a status report on the IPTC Photo Metadata Crawler which examines usage of IPTC Photo Metadata fields at news providers around the world. This will also be revealed at the Photo Metadata Conference.

Next, invited visitors Ilkka Järstä and Marina Ekroos from Frameright presented their solution to the problem of cropping images for different outlets, for example all of the different sizes required for various social media. They embed the crop regions using embedded metadata which is of great interest to the Photo Metadata Working Group, as we are looking at various options for allowing region-based metadata to cover not only an image as a whole but a region within an image, in a standardised way.

We had a workshop / discussion session on the recently ratified EU Copyright Directive which will impact all media companies in the next two years. Voted through by the European Parliament this month after intense lobbying from both sides, it could easily be bigger than GDPR, so it’s important for media outlets around the world. Discussion included how and whether IPTC standards could be used to help companies comply with the law. No doubt we will be hearing more about this in the future.

Michael then presented the Video Metadata Working Group‘s status report, including promotional activities at conferences and investigations to see what use cases we can gather from various users of video metadata amongst our members and in the wider media industry.

Then Abdul Hakim from DPP showed a practical use of video metadata in the DPP Metadata for News Exchange initiative which is based on NewsML-G2. An end-to-end demonstration of metadata being carried through from shot planning through the production process all the way to distribution via Reuters Connect. See our blog post about the Metadata for News Exchange project for more details.

Then Andy Read from BBC presented the BBC’s “Data flow for News” project, taking the principles of metadata being carried through the newsroom along with the content, looking at how to track the cost of production of each item of content and also its “audience value” across platforms to calculate a return on investment figure for all types of content. Iain Smith showed the other side of this project via a live demonstration of the BBC’s newsroom audience measurement system.

After lunch, Gan Lu and Kitty Lan from new IPTC member Yuanben presented their approach to rights protection using blockchain technology. Yuanben run a blockchain-based image registry plus a scanner that detects copyright infringements on the web. Using blockchain as proof of existence has been around for a while but it’s great to see it being used in such a practical context, very relevant for the media industry.

Lastly, another new member Shutterstock was represented by Lúí Smyth who gave us an overview of Shutterstock’s current projects relating to large-scale image management: they have over 260 million images, with over 1 million images added each week! Shutterstock are using the opportunity of refreshing their systems to re-align with IPTC standards and to learn what their suppliers, partners and distributors expect, and we look forward to helping them tackle shared challenges together.