Categories

Archives

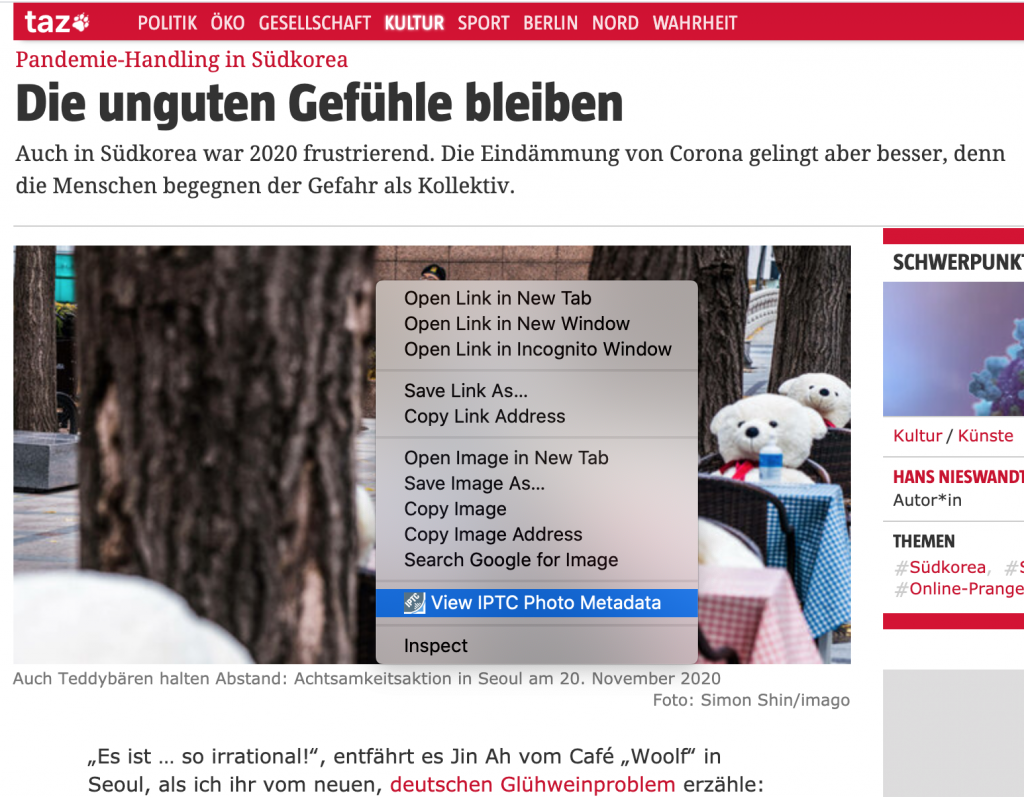

Today we announce the launch of two new browser extensions for viewing IPTC Photo Metadata on web pages.

The GetPMD tool is one of IPTC’s most popular online resources. With the GetPMD tool, users can view the embedded IPTC metadata of any image on the web, whether it was embedded using either the IPTC IIM or the ISO XMP format. But up to now, users must copy and paste an image’s URL into the tool, or install a browser “bookmarklet”.

To make that a little bit easier, we have created the IPTC Photo Metadata Inspector, a simple browser extension that currently works with the Google Chrome and Mozilla Firefox browsers.

With the extension installed, a context menu will appear when you right-click on an image anywhere on the Web, with a menu option, “View IPTC Photo Metadata.” If you select that option, you will be taken to getpmd.iptc.org where you can see the embedded metadata for that image.

Please note that the Photo Metadata Inspector only works with simple images: it won’t work with embedded video thumbnails or tweets, for example.

The browser extensions are open source, the code is available from the IPTC’s GitHub repository.

Ideas for fixes and new features are welcome.

If you have feedback, please raise an issue on our GitHub repository, post suggestions to the iptc-photometadata@groups.io public discussion list, or contact us via the form on this site.

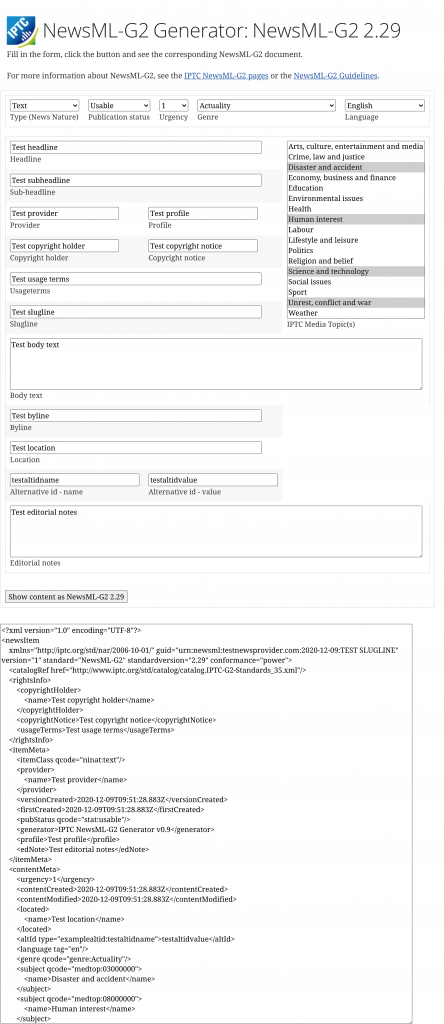

We are pleased to announce the release of the NewsML-G2 Generator, a simple tool to help understand the structure and layout of NewsML-G2 files.

To see how easy it can be to create a valid NewsML-G2 file, simply visit https://iptc.org/std/NewsML-G2/generator/, fill in the form and press the button labelled “Show content as NewsML-G2 2.29”.

Then the box below the form will be filled in with a valid NewsML-G2 document.

The tool demonstrates several key features of NewsML-G2:

- Adding copyright and rights information through the

<copyrightHolder/>,<copyrightNotice/>and<usageTerms/>elements - Adding news-item metadata via the

<itemMeta>container, such as<firstCreated/>,<versionCreated/>, item type (text, audio, video, graphic or composite, selected via a drop-down), publication status (usage, cancelled or withheld, selected via a drop-down) - Adding subject metadata using IPTC Media Topics, via a selection with all of the top-level categories enabled. Subjects are added using the

<subject/>construct within the<contentMeta>container. - Referring to the IPTC catalog that declares standard metadata vocabularies, using the

<catalogRef/>tag - Adding the body content using embedded NITF. In the future, we will add a radio button so users can select whether to embed the news content using NITF or XHTML, which is the other common format used by IPTC members to mark up news content.

Your test content is never saved and only exists within your browser.

The source code of the generator is available in the NewsML-G2 GitHub repository.

This is a simple 1.0 version, and only scratches the surface of the capabilities of NewsML-G2. It is based on the successful ninjs generator used to demonstrate our ninjs standard, which was launched along with ninjs 1.3 earlier this year.

In the future, we are thinking of adding features such as:

- Switch between NITF and XHTML for the content body

- Demonstrate referring to images and video files using

<remoteContent/> - Switch between using qcodes and URIs for metadata

- Demonstrate multiple language support in NewsML-G2

- Demonstrate usage of partMeta to show adding metadata to segments in files, such as audio and video

- Integrate the tool with the ninjs generator so users can switch between ninjs and NewsML-G2 with one click!

If you have any more ideas, please raise an issue on the GitHub repository, or contact us via the IPTC Contact Us form.

To learn more about NewsML-G2, the global standard used for distributing news content, see our introduction to NewsML-G2, or the NewsML-G2 Guidelines.

schema.org is the technology used by web site owners around the world to make metadata available to search engines and other third-party services. It is widely used to embed machine-readable data in websites for products, store opening times and much more.

It is also used as one of the sources of metadata for the Google search results. The schema.org “license”, “acquireLicensePage” and “creator” properties in a page’s HTML code are used in addition to IPTC Photo Metadata embedded in image files to populate the image panel.

schema.org version 11 was released this week. It contains two new properties on the CreativeWork type (and therefore its subtypes such as ImageObject) that were created to match their equivalent properties in IPTC Photo Metadata: copyrightNotice, which matches the IPTC Photo Metadata Copyright Notice property, and creditText, which matches the IPTC Photo Metadata Credit Line property.

The new fields are not yet supported by Google images search, but hopefully will be soon.

After the recent update, the current properties mapped to schema.org and used in Google images search results are:

| IPTC Photo Metadata property | Matching schema.org property | Used in Google search results? |

| Creator | ImageObject -> creator | Yes |

| Copyright Notice | ImageObject -> copyrightNotice | Not yet |

| Credit Line | ImageObject -> creditText | Not yet |

| Web Statement of Rights | ImageObject -> license | Yes |

| Licensor / Licensor URL | ImageObject -> acquireLicensePage | Yes |

The IPTC Photo Metadata Working Group is working on a more comprehensive document showing all possible IPTC Photo Metadata fields with their schema.org and EXIF equivalents. The full mapping document will be released soon.

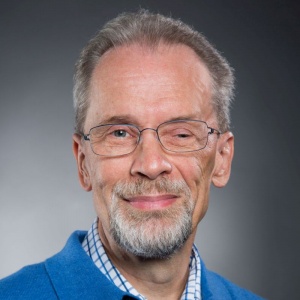

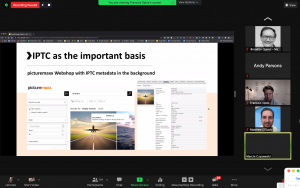

Yesterday Michael Steidl, Lead of the IPTC Photo Metadata Working Group, gave a webinar to Bundesverband professioneller Bildanbieter (BVPA), the Federal Association of Professional Image Providers in Germany.

The webinar focused on the recently introduced image license information for Google image searches and the possible opportunities and risks for the professional image business.

“This year, Google introduced the so-called Licensable Badge for its image search. This feature enables images to be linked to license information and to be displayed in the image search results with a corresponding link. Image seekers from advertising, editorial offices and corporate PR can follow the link to obtain further information on how to use the image. This turns Google image search into a potential marketplace. But how can image providers use the new tool for themselves? Is it worth the effort of storing the necessary metadata? Are there any economic risks involved? Will Google soon become a meta picture agency?”

In the first part of the webinar, Michael Steidl explained which image metadata must be stored in order to display photo credits and “licensable” badges on Google. He also informed participants about the problem that certain software and web platforms deletes image metadata after upload.

In the second part, Alexander Karst explains the possibilities for increasing visibility through the new features and gives an assessment of the effects on the image market.

Thanks to BVPA for hosting Michael for the webinar.

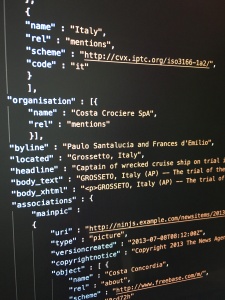

Based on discussions at the recent IPTC Autumn Meeting, the IPTC News in JSON Working Group is updating its view of the use of ninjs and other forms of JSON for handling news content.

Based on discussions at the recent IPTC Autumn Meeting, the IPTC News in JSON Working Group is updating its view of the use of ninjs and other forms of JSON for handling news content.

If your organisation uses JSON in any way for handling news content, we would like to hear from you.

We are looking for input from IPTC members and non-members, from agencies, publishers, broadcasters and software vendors.

Please help us by filling in the short survey via this Google Form.

Bill Kasdorf, principal at Kasdorf & Associates and individual member of IPTC, has published his latest column at Publishers Weekly, “News You Can Use”, where he promotes IPTC standards including IPTC Photo Metadata and IPTC Media Topics.

Bill Kasdorf, principal at Kasdorf & Associates and individual member of IPTC, has published his latest column at Publishers Weekly, “News You Can Use”, where he promotes IPTC standards including IPTC Photo Metadata and IPTC Media Topics.

As Bill says, “I recently attended the IPTC Autumn Meeting, and at virtually every session, I thought, “People in other sectors of publishing ought to know about what the IPTC has to offer them.”

Bill goes on to discuss IPTC’s work with Google on exposing IPTC Photo Metadata in Google search results and the Licensable Images feature in Google Images search, explaining how those in the publishing industry can use those features to find out who owns the copyright on an image they might want to re-use, and how to obtain a license to use it.

He also talks about IPTC’s Media Topics subject taxonomy, and how publishers could use it for press releases, so they can “be sure the terms you use are the ones the news industry itself uses”.

You can view the article on the Publisher’s Weekly website.

Thanks Bill for sharing your thoughts and for promoting the IPTC cause!

IPTC Managing Director Brendan Quinn spoke at the FIBEP World Media Intelligence Congress 2020 on Wednesday 18th November.

FIBEP is the industry body for the “media intelligence” industry, including media monitoring, public relations and marketing organisations.

FIBEP was founded over 65 years ago (so it is even older than IPTC!) and the FIBEP World Media Intelligence Congress has become one of the largest events for communications, public relations, technology, social media monitoring and marketing professionals alike. It brings together communications professionals from around the world to share best practices, discuss industry developments and innovations, present the latest technology and network through a variety of presentations and panel discussions from industry leaders. So it is in many ways similar to IPTC for the technical side of the news industry.

This year’s theme was Exploring and Expanding the Media Intelligence World and the program included a wide range of best practices and topics relevant for media intelligence and communication professionals including social media monitoring, privacy, and data integrity, copyright, the evolution of data consumption, measurement, PR trends, technological developments and future outlooks for communications and media intelligence industries.

Brendan was invited to speak about IPTC’s view of the news ecosystem, particularly with a view to online misinformation and disinformation and how the news industry can work together to combat those problems. Brendan discussed IPTC’s work on trust and credibility, including the content of the recent IPTC webinar on Trust and Credibility.

Questions from the media intelligence community included what individuals could do to avoid misinformation and spreading false news on social media. Brendan’s advice to those who want to learn more about misinformation are in the table:

Educate your teams to “think before you share” on social media |

|

Reuters has put together a course on “manipulated media” including “deep fake” videos: https://www.reuters.com/manipulatedmedia The EU has created a “Think before you share” campaign: https://euvsdisinfo.eu/think-before-you-share/ |

Stay in touch with fact checking organisations |

| Fact checking organisations such as FullFact, PolitiFact, FactCheck.org and Snopes often release information about topics that are often the subject of disinformation and misinformation such as vaccines, elections and conspiracy theories. Many local organisations can be found via the International Fact-Checking Network. |

Thanks very much to FIBEP, especially Romina Gersuni, for inviting us to present. We realised during the preparations for the event that IPTC and FIBEP have a lot in common, so hopefully this will be the first of many collaborations between the two organisations!

It is with great sadness that we report that Andrew Read passed away suddenly on Sunday 8 November, 2020.

It is with great sadness that we report that Andrew Read passed away suddenly on Sunday 8 November, 2020.

Andy was a passionate member of the IPTC for over 20 years, first through Reuters, then Thomson Reuters and most recently as the BBC’s main representative at the IPTC.

Andy contributed to NewsML-G2 and the IPTC News Architecture, RightsML and other rights-related work, and followed our other work including Photo Metadata and our sports standards. A frequent attendee and speaker at our face-to-face IPTC member meetings, Andy also helped to organise IPTC’s London meetings, including the special Rights Day in 2013 and Rights Management in News day in 2017.

A committed believer in the benefit of industry organisations, he also contributed to the EBU’s metadata activities and organised collaborations with the DPP. Just a few weeks ago at the IPTC Autumn 2020 Meeting, Andy presented his most recent project at the BBC, an adaptation of the Guardian’s open-source digital asset management system for use as the BBC’s main image asset library. He was always making connections between IPTC members and outside organisations, research projects and startups, and loved bringing people together to discuss what technology can bring to the media industry.

Andy will be fondly remembered by all of his IPTC colleagues for his friendly, supportive manner and willingness to help anyone with anything.

When IPTC members get together it often feels like a family reunion, and Andy has been a key part of the IPTC family for the past 20 years. He will be sorely missed.

UPDATE: If you would like to share your memories of Andy or make a donation to his preferred charity, please see the tribute site: https://andyread.muchloved.com/

We’re very happy that we can make public some of the video recordings from the recent IPTC Photo Metadata Conference 2020, held on Tuesday 13 October 2020.

Thanks to all who attended – we had over 200 registrations for the webinar.

The videos are embedded below or can be viewed directly on YouTube by following the link above the embedded video.

Introduction

Brendan Quinn, Managing Director of IPTC, opened the day with an introduction to IPTC and an overview of what was to come (10 minutes):

Michael Steidl, Photo Metadata WG lead on IPTC Photo Metadata

Michael Steidl presented on why we should care about photo metadata in his presentation “About IPTC Photo Metadata” (48 minutes including Q&A)

Google’s Licensable Images features

Francois Spies, a Product Manager for Google Images in Mountain View, presented on the Licensable Images features which they developed in consultation with IPTC this year.

After Francois’ presentation, Matthew O’Such, VP SEO for Getty Images and Marcin Czyzewski, CTO and Picturemaxx joined us to share their views on implementing the changes to IPTC Photo Metadata required to power the Google Licensable Images feature. Then we had a Q&A session including Michael, Francois, Matthew and Marcin.

Unfortunately, Google asked us not to make a recording of their presentation or the panel available. However the resources that Francois shared are all available via our Quick Guide to IPTC Photo Metadata and Google Images.

Andy Parsons on the Content Authenticity Initiative

Next up, Andy Parsons (Adobe) introduced the Content Authenticity Initiative (47 minutes including Q&A and a wrap-up of the day from Brendan Quinn):

Thanks again to all our speakers and panellists for their contributions. We’re already looking forward to next year’s event!

Currently next year’s IPTC Photo Metadata Conference is scheduled to be in late May 2021 in Mallorca, Spain in conjunction with the CEPIC Congress 2021. If that proves impractical then we will host another online event.

The Digital Media Licensing Association (DMLA) is holding its annual conference this week. IPTC and IPTC members have a strong presence – particularly IPTC’s Video Metadata Hub.

Mark Milstein of IPTC member Microstocksolutions joined in hosting the opening “virtual cocktail party” on Sunday 25 October. Mark is leading efforts to promote IPTC’s Video Metadata Hub at DMLA, see his recent post on DMLA’s site.

Angela Weiss, a staff photographer with IPTC member Agence France-Presse, took part in a panel “Tales from the Trenches – True Stories from Working Photojournalists” on Monday. Then Mark Milstein was back on the “Hot Topics in Tech” panel along with Matthew O’Such of IPTC member Getty Images. Matthew also spoke on our panel at the IPTC Photo Metadata Conference two weeks ago.

On Tuesday, Andy Parsons of IPTC member Adobe is presenting a keynote on the Content Authenticity Initiative. Of course IPTC members already heard Andy speak at the Photo Metadata Conference, and at the Adobe MAX conference last week. Andy is very busy getting the word out!

On Wednesday, Mathieu Desoubeaux of new IPTC member IMATAG speaks on the “Image Protection – Creating a More Secure Ecosystem” panel.

On Thursday, Matthew O’Such of Getty Images is back along with Francois Spies of Google giving a reprise of his IPTC Photo Metadata Conference talk on the Google search “Licensable Images” features. Also on the panel is Roxana Stingu of Alamy, part of IPTC member PA Media.

Thursday afternoon, IPTC metadata gets a front-row seat at DMLA with the “Taming Video Metadata” panel, moderated by Mark Milstein of Microstocksolutions and featuring a presentation by Pam Fisher, IPTC individual member and lead of the IPTC Video Metadata Working Group. On the panel, Zach Bernstein of Storyblocks will be speaking about his implementation of IPTC’s Video Metadata Hub.

The conference also features panels on synthetic content, the legal aspects of the photo licensing industry, artificial intelligence and more.

Thanks to DMLA for putting together such an interesting event!

Categories

Archives

- February 2026

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- February 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- December 2019

- November 2019

- October 2019

- September 2019

- July 2019

- June 2019

- May 2019

- April 2019

- February 2019

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- January 2018

- November 2017

- October 2017

- September 2017

- August 2017

- June 2017

- May 2017

- April 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- June 2015

- April 2015

- March 2015

- February 2015

- November 2014