Categories

Archives

The latest update to IPTC NewsCodes, the 2024-Q1 release, was published on Thursday 28th March.

This release includes many updates to our Media Topic subject vocabulary, plus changes to Content Production Party Role, Horse Position, Tournament Phase, Soccer Position, Genre, User Action Type and Why Present.

UPDATE on 11 April: we released a small update to the Media Topics, including Norwegian (no-NB and no-NN) translations of the newly added terms, thanks to Norwegian news agency NTB.

We also made one label change in German: medtop:20000257 from “Alternative-Energie” to “Erneuerbare Energie,” This change was made at the request of German news agency dpa.

Changes to Media Topics vocabulary

As part of the regular review undertaken by the NewsCodes Working Group, many changes were made to the economy, business and finance branch of Media Topics. In addition, a number of changes were made to the conflict, war and peace branch in response to suggestions made by new IPTC member ABC Australia.

5 new concepts: sustainability, profit sharing, corporate bond, war victims, missing in action.

12 retired concepts: justice, restructuring and recapitalisation, bonds, budgets and budgeting, consumers, consumer issue, credit and debt, economic indicator, government aid, investments, prices, soft commodities market.

55 modified concepts: peacekeeping force, genocide, disarmament, prisoners of war, war crime, judge, economy, economic trends and indicators, business enterprise, central bank, consumer confidence, currency, deflation, economic growth, gross domestic product, industrial production, inventories, productivity, economic organisation, emerging market, employment statistics, exporting, government debt, importing, inflation, interest rates, international economic institution, international trade, trade agreements, balance of trade, trade dispute, trade policy, monetary policy, mortgages, mutual funds, recession, tariff, market and exchange, commodities market, energy market, debt market, foreign exchange market, loan market, loans and lending, study of law, disabilities, mountaineering, sport shooting, sport organisation, recreational hiking and climbing, start-up and entrepreneurial business, sharing economy, small and medium enterprise, sports officiating, bmx freestyle.

48 concepts with modified names/labels: judge, emergency incident, transport incident, air and space incident, maritime incident, railway incident, road incident, restructuring and recapitalisation, economic trends and indicators, exporting, importing, interest rates, balance of trade, mortgages, commodities market, soft commodities market, loans and lending, study of law, disabilities, mountain climbing, mountaineering, sport shooting, sport organisation, recreational hiking and climbing, start-up and entrepreneurial business, sports officiating, bmx freestyle, tsunami, healthcare industry, developmental disorder, depression, anxiety and stress, public health, pregnancy and childbirth, fraternal and community group, cyber warfare, public transport, taxi and ride-hailing, shared transport, business reporting and performance business restructuring commercial real estate residential real estate podcast, financial service, business service, news industry, diversity, equity and inclusion.

57 modified definitions: war crime, economy, economic trends and indicators, business enterprise, central bank, consumer confidence, currency, deflation, economic growth, economic organisation, emerging market, employment statistics, exporting, government debt, importing, inflation, interest rates, international economic institution, trade agreements, trade dispute, trade policy, mortgages, recession, tariff, market and exchange, commodities market, energy market, soft commodities market, debt market, foreign exchange market, loan market, loans and lending, disabilities, mountaineering, sport organisation, start-up and entrepreneurial business, sharing economy, small and medium enterprise, tsunami, healthcare industry, developmental disorder, depression, anxiety and stress, public health, pregnancy and childbirth, cyber warfare, public transport, taxi and ride-hailing, shared transport, business reporting and performance, business restructuring, commercial real estate, residential real estate, podcast, financial service, business service, news industry.

22 modified broader terms (hierarchy moves): peacekeeping force, genocide, disarmament, prisoners of war, business enterprise, central bank, consumer confidence, currency, gross domestic product, industrial production, inventories, productivity, economic organisation, emerging market, interest rates, international economic institution, international trade, monetary policy, mutual funds, tariff, loans and lending, bmx freestyle.

These changes are already available in the en-GB, en-US and Swedish (se) language variants. Thanks go to TT and Bonnier News for their work on the Swedish translation.

If you would like to contribute or update a translation to your language, please contact us.

Sports-related NewsCodes updates

We also made some changes to our sports NewsCodes vocabularies, which are mostly used by SportsML and IPTC Sport Schema.

New vocabulary: Horse Position

New entries in Tournament Phase vocabulary: Heat, Round of 16

New entry in Soccer Position: manager,

News-related NewsCodes updates

Content Production Party Role: new term Generative AI Prompt Writer which can also be used in Photo Metadata Contributor to declare who wrote the prompt that was used to generate an image.

Genre: new term User-Generated Content.

Why Present: new term associated.

The User Action Type vocabulary, mostly used by NewsML-G2, has had some major changes.

Previously this vocabulary defined terms related to specific social media services or interactions. We have retired/deprecated all site-specific terms (Facebook Likes, Google’s +1, Twitter re-tweets, Twitter tweets).

Instead, we have defined some generic terms: Like, Share, Comment. The pageviews term has been broadened into simply views (although the ID remains as “pageviews” for backwards-compatibility)

Thanks to the NewsCodes Working Group for their work on this release, and to all members and non-members who have suggested changes.

Google has added Digital Source Type support to Google Merchant Center, enabling images created by generative AI engines to be flagged as such in Google’s products such as Google search, maps, YouTube and Google Shopping.

In a new support post, Google reminds merchants who wish their products to be listed in Google search results and other products that they should not strip embedded metadata, particularly the Digital Source Type field which can be used to signal that content was created by generative AI.

We at the IPTC fully endorse this position. We have been saying for years that website publishers should not strip metadata from images. This should also include tools for maintaining online product inventories, such as Magento and WooCommerce. We welcome contact from developers who wish to learn more about how they can preserve metadata in their images.

Here’s the full text of Google’s recommendation:

The IPTC News Architecture Working Group is happy to announce that the NewsML-G2 Guidelines and NewsML-G2 Specification documents have been updated to align with version 2.33 of NewsML-G2, which was approved in October 2023.

The changes include:

Specification changes:

- Adding the newest additions authoritystatus and digitalsourcetype added in NewsML-G2 versions 2.32 and 2.33

- Clarification on how @uri, @qcode and @literal attributes should be treated throughout

- Clarification on how roles should be added to infosource element when an entity plays more than one role

- Clarifying and improving cross-references and links throughout the document

Guidelines changes:

- Documentation of the Authority Status attrribute and its related vocabulary, added in version 2.32

- Documentation of the Digital Source Type element and its related vocabulary, added in version 2.33

- Clarification on how @uri, @qcode and @literal attributes should be treated throughout

- Clarifying and improving cross-references and links throughout the document

- Improved the additional resources section including links to related IPTC standards and added links to the SportsML-G2 Guidelines

- See the What’s New in NewsML-G2 2.32 and 2.33 section for full details.

We always welcome feedback on our specification and guideline documents: please use the Contact Us form to ask for clarifications or suggest changes.

Yesterday Nick Clegg, Meta’s President of Global Affairs, announced that Meta would be using IPTC embedded photo metadata to label AI-Generated Images on Facebook, Instagram and Threads.

Meta already uses the IPTC Photo Metadata Standard’s Digital Source Type property to label images generated by its platform. The image to the right was generated using Imagine with Meta AI, Meta’s image generation tool. Viewing the image’s metadata with the IPTC’s Photo Metadata Viewer tool shows that the Digital Source Type field is set to “trainedAlgorithmicMedia” as recommended in IPTC’s Guidance on metadata for AI-generated images.

Clegg said that “we do several things to make sure people know AI is involved, including putting visible markers that you can see on the images, and both invisible watermarks and metadata embedded within image files. Using both invisible watermarking and metadata in this way improves both the robustness of these invisible markers and helps other platforms identify them.”

This approach of both direct and indirect disclosure is in line with the Partnership on AI’s Best Practices on signalling the use of generative AI.

Also, Meta are building recognition of this metadata into their tools: “We’re building industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.”

We have previously shared the news that Google, Microsoft, Adobe, Midjourney and Shutterstock will use IPTC metadata in their generated images, either directly in the IPTC Photo Metadata block or using the IPTC Digital Source Type vocabulary as part of a C2PA assertion. OpenAI has just announced that they have started using IPTC via C2PA metadata to signal the fact that images from DALL-E are generated by AI.

A call for platforms to stop stripping image metadata

We at the IPTC agree that this is a great step towards end-to-end support of indirect disclosure of AI-generated content.

As the Meta and OpenAI posts points out, it is possible to strip out both IPTC and C2PA metadata either intentionally or accidentally, so this is not a solution to all problems of content credibility.

Currently, one of the main ways metadata is stripped from images is when they are uploaded to Facebook or other social media platforms. So with this step, we hope that Meta’s platforms will stop stripping metadata from images when they are shared – not just the fields about generative AI, but also the fields regarding accessibility (alt text), copyright, creator’s rights and other information embedded in images by their creators.

Video next?

Meta’s post indicates that this type of metadata isn’t commonly used for video or audio files. We agree, but to be ahead of the curve, we have added Digital Source Type support to IPTC Video Metadata Hub so videos can be labelled in the same way.

We will be very happy to work with Meta and other platforms on making sure IPTC’s standards are implemented correctly in images, videos and other areas.

As we wrap up 2023, we thought it would be useful to give an update you on the IPTC’s work in 2023, including updates to most of our standards.

Two successful member meetings, one in person!

This year we finally held our first IPTC Member meeting in person since 2019, in Tallinn Estonia. We had around 30 people attend in person and 50 attended online from over 40 organisations. Presentations and discussions ranged from the e-Estonia digital citizen experience to building re-usable news content widgets with Web Components, and of course included generative AI, credibility and fact checking, and more. Here’s our report on the IPTC 2023 Spring Meeting.

For our Autumn Meeting we went back to an online format, with over 50 attendees, and more watching the recordings afterwards (which are available to all members). Along with discussions of generative AI and content licensing at this year’s meetings, it was great to hear the real-world implementation experience of the ASBU Cloud project from the Arab States Broadcasting Union. The system was created by IPTC members Broadcast Solutions, based on NewsML-G2. The DPP Live Production Exchange, led by new members Arqiva, will be another real-world implementation coming soon. We heard about the project’s first steps at the Autumn Meeting.

Also at this years Autumn Meeting we also heard from Will Kreth of the HAND Identity platform and saw a demo of IPTC Sport Schema from IPTC member Progress Software (previously MarkLogic). More on IPTC Sport Schema below! All news from the Autumn Meeting is summed up in our post AI, Video in the cloud, new standards and more: IPTC Autumn Meeting 2023

We’re very happy to say that the IPTC Spring Meeting 2024 will be held in New York from April 15 – 17. All IPTC member delegates are welcome to attend the meeting at no cost. If you are not a member but would like to present your work at the meeting, please get in touch using our Contact Us form.

IPTC Photo Metadata Conference, 7 May 2024: save the date!

Due to several issues, we were not able to run a Photo Metadata Conference in 2023, but we will be back with an online Photo Metadata Conference on 7th May 2024. Please mark the date in your calendar!

As usual, the event will be free and open for anyone to attend.

If you would like to present to the people most interested in photo metadata from around the world, please let us know!

Presentations at other conferences and work with other organisations

IPTC was represented at the CEPIC Congress in France, the EBU DataTech Seminar in Geneva, Sports Video Group Content Management Forum in New York and the DMLA’s International Digital Media Licensing Conference in San Francisco.

We also worked with CIPA, the organisation behind the Exif photo metadata standard, on aligning Exif with IPTC Photo Metadata, and supported them in their work towards Exif 3.0 which was announced in June.

The IPTC will be advising the TEMS project which is an EU-funded initiative to build a “media data space” for Europe, and possibly beyond: IPTC working with alliance to build a European Media Data Space.

IPTC’s work on Generative AI and media

Of course the big topic for media in 2023 has been Generative AI. We have been looking at this topic for several years, since it was known as “synthetic media” and back in 2022 we created a taxonomy of “digital source types” that can be used to describe various forms of machine-generated and machine-assisted content creation. This was a joint effort across our NewsCodes, Video Metadata and Photo Metadata Working Groups.

It turns out that this was very useful, and the IPTC Digital Source Type taxonomy has been adopted by Google, Midjourney, C2PA and others as a way to describe content. Here are some of our news posts from 2023 on this topic:

- IPTC publishes metadata guidance for AI-generated “synthetic media”

- Google announces use of IPTC metadata for generative AI images

- Midjourney and Shutterstock AI sign up to use of IPTC Digital Source Type to signal generated AI content

- Microsoft announces signalling of generative AI content using IPTC and C2PA metadata

- Royal Society/BBC workshop on Generative AI and content provenance

- New “digital source type” term added to support inpainting and outpainting in Generative AI

- IPTC releases technical guidance for creating and editing metadata, including DigitalSourceType

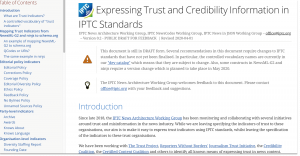

IPTC’s work on Trust and Credibility

After a lot of drafting work over several years, we released the Guidelines for Expressing Trust and Credibility signals in IPTC standards that shows how to embed trust infiormation in the form of “trust indicators” such as those from The Trust Project into content marked up using IPTC standards such as NewsML-G2 and ninjs. The guideline also discusses how media can be signed using C2PA specification.

We continue to work with C2PA on the underlying specification allowing signed metadata to be added to media content so that it becomes “tamper-evident”. However C2PA specification in its current form does not prescribe where the certificates used for signing should come from. To that end, we have been working with Microsoft, BBC, CBC / Radio Canada and The New York Times on the Steering Committee of Project Origin to create a trust ecosystem for the media industry. Stay tuned for more developments from Project Origin during 2024.

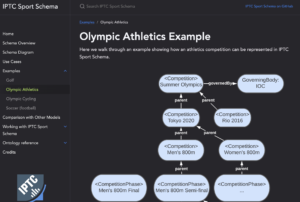

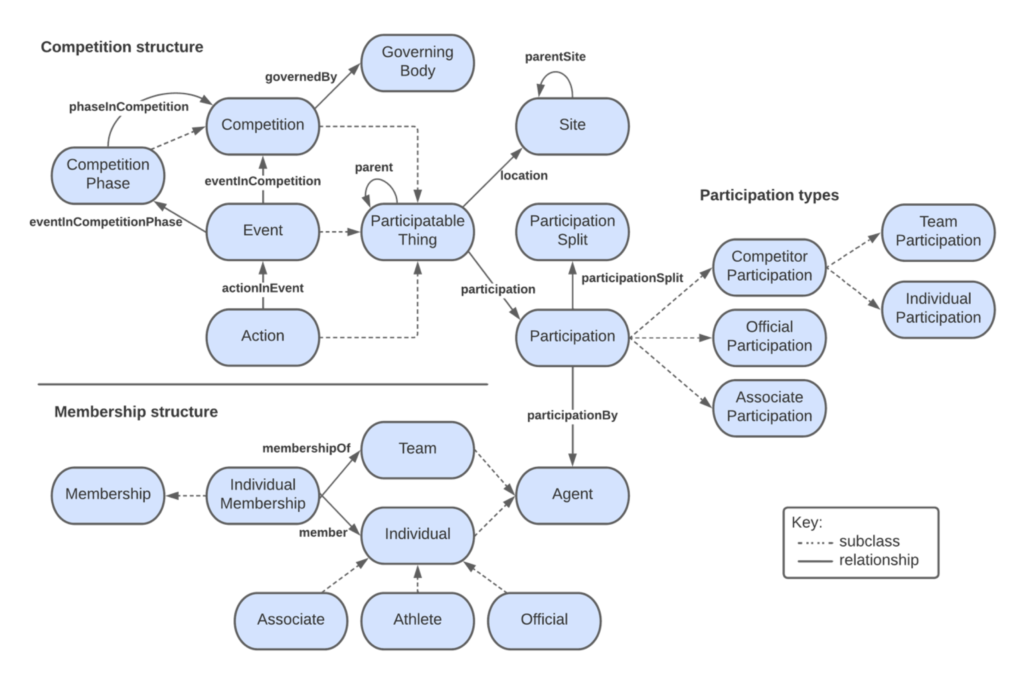

IPTC’s newest standard: IPTC Sport Schema

After years of work, the IPTC Sports Content Working Group released version 1.0 of IPTC Sport Schema. IPTC Sport Schema takes the experience of IPTC’s 10+ years of maintaining the XML-based SportsML standard and applies it to the world of the semantic web, knowledge graphs and linked data.

Paul Kelly, Lead of the IPTC Sports Content Working Group, presented IPTC Sport Schema to the world’s top sports media technologists: IPTC Sport Schema launched at Sports Video Group Content Management Forum.

Take a look at out dedicated site https://sportschema.org/ to see how it works, look at some demonstration data and try out a query engine to explore the data.

If you’re interested in using IPTC Sport Schema as the basis for sports data at your organisation, please let us know. We would be very happy to help you to get started.

Standard and Working Group updates

- Our IPTC NewsCodes vocabularies had two big updates, the NewsCodes 2023-Q1 update and the NewsCodes Q3 2023 update. For our main subject taxonomy Media Topics, over the year we added 12 new concepts, retired 73 under-used terms, and modified 158 terms to make their labels and/or descriptions easier to understand. We also added or updated vocabularies such as Digital Source Type and Authority Status.

- The News in JSON Working Group released ninjs 2.1 and ninjs 1.5 in parallel, so that people who cannot move from the 1.x schema can still get the benefits of new additions. The group is currently working on adding events and planning items to ninjs based on requirements the DPP Live Production Exchange project: expect to see something released in 2024.

- NewsML-G2 2.32 and NewsML-G2 v2.33 were released this year, including support for Generative AI via the Digital Source Type vocabulary.

- The IPTC Photo Metadata Standard 2023.1 allows rightsholders to express whether or not they are willing to allow their content to be indexed by search engines and data mining crawlers, and whether the content can be used as training data for Generative AI. This work was done in partnership with the PLUS Coalition. We also updated the IPTC Photo Metadata Mapping Guidelines to accommodate Exif 3.0.

- Through discussions and workshops at our Member Meetings in 2022 and 2023, we have been working on making RightsML easier to use and easier to understand. Stay tuned for more news on RightsML in 2024.

- Video Metadata Hub 1.5 adds the same properties to allow content to be excluded from generative AI training data sets. We have also updated the Video Metadata Hub Generator tool to generate C2PA-compliant metadata “assertions”.

New faces at IPTC

Ian Young of Alamy / PA Media Group stepped up to become the lead of the News in JSON Working Group, taking over from Johan Lindgren of TT who is winding down his duties but still contributes to the group.

We welcomed Bonnier News, Newsbridge, Arqiva, the Australian Broadcasting Corporation and Neuwo.ai as new IPTC members, plus a very well known name who will be joining at the start of 2024. We’re very happy to have you all as members!

We are always happy to work with more organisations in the media and related industries. If you would like to talk to us about joining IPTC, please complete our membership enquiry form.

Here’s to a great 2024!

Thanks to everyone who gave IPTC your support, and we look forward to working with you in the coming year.

If you have any questions or comments (and especially if you would like to speak at one of our events in 2024!), you can contact us via our contact form.

Best wishes,

Brendan Quinn

Managing Director, IPTC

and the IPTC Board of Directors: Dave Compton (LSE Group), Heather Edwards (The Associated Press), Paul Harman (Bloomberg LP), Gerald Innerwinkler (APA), Philippe Mougin (Agence France-Presse), Jennifer Parrucci (The New York Times), Robert Schmidt-Nia of DATAGROUP (Chair of the Board), Guowei Wu (Xinhua)

The IPTC is happy to announce that it will be working as an affiliate organisation of the TEMS project to build a Trusted European Media Data Space.

The product of a two-year-long tender and award process with the European Commission, TEMS is a joint undertaking of 43 organisations representing hundreds of stakeholders from 14 countries in the cultural and creative sectors, which aims to conceive and implement a common media data space across Europe.

The initiative is supported by the European Commission’s Digital Europe Programme (DIGITAL) and is a core element in the implementation of the European Data Strategy. With an investment of EUR 16.5 million, the consortium represents a milestone in the way the media sector will be able to share and extract value from data. By doing so, TEMS aims to support the economic development and future growth of local and regional media ecosystems across Europe.

Concretely, TEMS will lead the way for the large-scale deployment of cutting-edge services, infrastructures, and platforms. It will also address fighting misinformation, audience analysis, improving data flows in production chains, and supporting the adoption of AI and Virtual Reality technologies.

TEMS will evolve existing media platforms, embryonic data space infrastructures and provide open access to a common data space for any interested media stakeholder from any media sub-sector. This will help digital transformation and improve the competitiveness of the European media industry.

IPTC’s role is an advisory one. We will be working with TEMS partners on structuring data models and metadata formats, re-using and extending existing IPTC standards to create the framework the will power the data sharing for the media industry and beyond.

The TEMS partner organisations range from global news organisations to data platform providers, broadcasters, archives and media innovation units, as can be seen below.

To follow news from the TEMS project, see http://www.tems-dataspace.eu/ or follow @TEMS_EU on X/Twitter.

The IPTC is happy to announce the latest version of our guidance for mapping between photo metadata standards.

The IPTC is happy to announce the latest version of our guidance for mapping between photo metadata standards.

Following our publication of IPTC’s rules for mapping photo metadata between IPTC, Exif and schema.org standards in 2022, the IPTC Photo Metadata Working Group has been monitoring updates in the photo metadata world.

In particular, the IPTC gave support and advice to CIPA while it was working on Exif 3.0 and we have updated our mapping rules to work with the latest changes to Exif expressed in Exif 3.0.

As well as guidelines for individual properties between IPTC Photo Metadata Standard (in both the older IIM form and the newer XMP embedding format), Exif and schema.org, we have included some notes on particular considerations for mapping contributor, copyright notice, dates and IDs.

The IPTC encourages all developers who previously consulted the out-of-date Metadata Working Group guidelines (which haven’t been updated since 2008 and are no longer published) to use this guide instead.

On Tuesday 12 November 2023, a group of news, journalism and media organisations released what they call the “Paris Charter on AI and Journalism.” Created by 17 organisations brought together by Reporters sans frontières and chaired by journalist and Nobel Peace Prize laureate Maria Ressa, the Charter aims to give journalism organisations some guidelines that they can use to navigate the intersection of Artificial Intelligence systems and journalism.

On Tuesday 12 November 2023, a group of news, journalism and media organisations released what they call the “Paris Charter on AI and Journalism.” Created by 17 organisations brought together by Reporters sans frontières and chaired by journalist and Nobel Peace Prize laureate Maria Ressa, the Charter aims to give journalism organisations some guidelines that they can use to navigate the intersection of Artificial Intelligence systems and journalism.

The IPTC particularly welcomes the Charter because it aligns well with several of our ongoing initiatives and recent projects. IPTC technologies and standards give news organisations a way to implement the Charter simply and easily in their existing newsroom workflows.

In particular, we have some comments to offer on some principles:

Principle 3: AI SYSTEMS USED IN JOURNALISM UNDERGO PRIOR, INDEPENDENT EVALUATION

“The AI systems used by the media and journalists should undergo an independent, comprehensive, and thorough evaluation involving journalism support groups. This evaluation must robustly demonstrate adherence to the core values of journalistic ethics. These systems must respect privacy, intellectual property and data protection laws.”

We particularly agree that AI systems must respect intellectual property laws. To support this, we have recently released the Data Mining property in the IPTC Photo Metadata Standard which allows content owners to express any permissions or restrictions that they apply regarding the use of their content in Generative AI training or other data mining purposes. The Data Mining property is also supported in IPTC Video Metadata Hub.

Principle 5: MEDIA OUTLETS MAINTAIN TRANSPARENCY IN THEIR USE OF AI SYSTEMS.

“Any use of AI that has a significant impact on the production or distribution of journalistic content should be clearly disclosed and communicated to everyone receiving information alongside the relevant content. Media outlets should maintain a public record of the AI systems they use and have used, detailing their purposes, scopes, and conditions of use.”

To enable clear declaration of generated content, we have created extra terms in the Digital Source Type vocabulary to express content that was created or edited by AI. These values can be used in both IPTC Photo Metadata and IPTC Video Metadata Hub.

Principle 6: MEDIA OUTLETS ENSURE CONTENT ORIGIN AND TRACEABILITY.

“Media outlets should, whenever possible, use state-of-the-art tools that guarantee the authenticity and provenance of published content, providing reliable details about its origin and any subsequent changes it may have undergone. Any content not meeting these authenticity standards should be regarded as potentially misleading and should undergo thorough verification.”

Through IPTC’s work with Project Origin, C2PA and the Content Authenticity Initiative, we are pushing forward in making provenance and authenticity technology available and accessible to journalists and newsrooms around the world.

In conclusion, the Charter says: “In affirming these principles, we uphold the right to information, champion independent journalism, and commit to trustworthy news and media outlets in the era of AI.”

The IPTC Sports Content Working Group is happy to announce the release of IPTC Sport Schema version 1.0.

The first new IPTC standard to be released in more than 10 years, IPTC Sport Schema is a comprehensive model for the storage, transmission and querying of sports data. It has been tested on real-world use cases that are common in any newsroom or sports organisation.

IPTC Sport Schema has evolved from its predecessor SportsML. In contrast to the document-oriented nature of SportsML, IPTC Sport Schema takes a data-centric approach which is better suited to systems dealing with large volumes of data and also helps with integration across data sets.

“We reached out to many companies dealing with sports content and built up a clear picture of their needs,” says IPTC Sports Content Working Group lead Paul Kelly. “They wanted up-to-date formats, easy querying, the ability to handle e-sports and the ability to cross-reference between different media and data silos. IPTC Sport Schema addresses those requirements with a new basic model at the abstract end, and adhering to common use cases to keep things grounded.”

Content in Sports Schema is represented in the W3C’s universal Resource Description Framework (RDF), which renders any kind of data as a triple in the form of subject->predicate->object. Each component of a Sports Schema triple has a reference to an ontology, which defines the model at the heart of the standard. Querying is done using the W3C’s SPARQL standard, a kind of SQL for RDF.

“The IPTC has been working on RDF and semantic web standards for more than 10 years, going back to rNews and RightsML,” said IPTC Managing Director Brendan Quinn. “So we are very happy to release another semantic standard that can help organisations to publish and share sports data in a vendor-neutral, interoperable way.”

Being RDF-based, IPTC Sport Schema can be rendered in XML, JSON and the simple Turtle format, and can be converted easily between all three formats using free tools such as Apache Jena.

“Those familiar with SportsML or SportsJS should recognise the basic components of Sport Schema,” says Kelly, “both in the ontology and in the sports vocabularies introduced with SportsML 3.0, which were designed specifically with semantic technologies in mind.”

To support take-up and share information about the new standard, the IPTC has created a dedicated website, sportschema.org. The site contains:

- a list of use cases which were used to help design the schema and data structures

- example instance diagrams for various sports to help understand how the model can be applied to team, individual and other types of sports

- a data dictionary comparing IPTC Sport Schema to other prominent sport schemas (SportsML, ODF, BBC Ontology, etc.)

- A detailed and comprehensive IPTC Sport Schema ontology reference showing all classes, relationships and properties.

- A tool to validate Sport Schema data using the SHACL format to ensure RDF triples adhere to the specification (equivalent to XML Schema or JSON Schema)

- A tool to covert SportsML documents to IPTC Sport Schema data

- A set of unit tests and sample data files that were used to develop and maintain Sport Schema, including a bespoke unit test framework that ensures our example SPARQL queries continue to satisfy our use cases as the model evolves.

Those wishing to try out some SPARQL queries against some sports data should visit Sport Schema’s query endpoint. It includes example queries showing how to build a team roster, league standings and more from our sample data sets.

For more information on IPTC Sport Schema, see the IPTC’s landing pages on the IPTC Sport Schema standard, the standalone site sportschema.org, or the project’s GitHub repository.

If you are interested in joining those who are working on implementing IPTC Sport Schema in your project or your organisation, we would love to hear from you. Please contact us via IPTC’s contact form.

Made with Bing Image Creator. Powered by DALL-E.

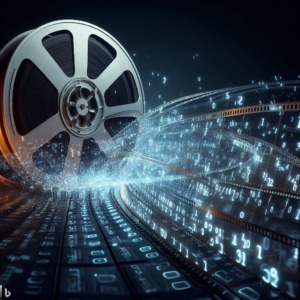

Following the IPTC’s recent announcement that Rights holders can exclude images from generative AI with IPTC Photo Metadata Standard 2023.1 , the IPTC Video Metadata Working Group is very happy to announce that the same capability now exists for video, through IPTC Video Metadata Hub version 1.5.

The “Data Mining” property has been added to this new version of IPTC Video Metadata Hub, which was approved by the IPTC Standards Committee on October 4th, 2023. Because it uses the same XMP identifier as the Photo Metadata Standard property, the existing support in the latest versions of ExifTool will also work for video files.

Therefore, adding metadata to a video file that says it should be excluded from Generative AI indexing is as simple as running this command in a terminal window:

exiftool -XMP-plus:DataMining="Prohibited for Generative AI/ML training" example-video.mp4

(Please note that this will only work in ExifTool version 12.67 and above, i.e. any version of ExifTool released after September 19, 2023)

The possible values of the Data Mining property are listed below:

| PLUS URI | Description (use exactly this text with ExifTool) |

| Unspecified – no prohibition defined | |

| Allowed | |

| Prohibited for AI/ML training | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-GENAIMLTRAINING |

Prohibited for Generative AI/ML training |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-EXCEPTSEARCHENGINEINDEXING |

Prohibited except for search engine indexing |

| Prohibited | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEECONSTRAINT |

Prohibited, see plus:OtherConstraints |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEEEMBEDDEDRIGHTSEXPR |

Prohibited, see iptcExt:EmbdEncRightsExpr |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEELINKEDRIGHTSEXPR |

Prohibited, see iptcExt:LinkedEncRightsExpr |

A corresponding new property “Other Constraints” has also been added to Video Metadata Hub v1.5. This property allows plain-text human-readable constraints to be placed on the video when using the “Prohibited, see plus:OtherConstraints” value of the Data Mining property.

The Video Metadata Hub User Guide and Video Metadata Hub Generator have also been updated to include the new Data Mining property added in version 1.5.

We look forward to seeing video tools (and particularly crawling engines for generative AI training systems) implement the new properties.

Please feel free to discuss the new version of Video Metadata Hub on the public iptc-videometadata discussion group, or contact IPTC via the Contact us form.

Categories

Archives

- February 2026

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- February 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- December 2019

- November 2019

- October 2019

- September 2019

- July 2019

- June 2019

- May 2019

- April 2019

- February 2019

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- January 2018

- November 2017

- October 2017

- September 2017

- August 2017

- June 2017

- May 2017

- April 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- June 2015

- April 2015

- March 2015

- February 2015

- November 2014

![Preserving metadata tags for AI-generated images in Merchant Center

February 2024

If you’re using AI-generated images in Merchant Center, Google requires that you preserve any metadata tags which indicate that the image was created using generative AI in the original image file.

Don't remove embedded metadata tags such as trainedAlgorithmicMedia from such images. All AI-generated images must contain the IPTC DigitalSourceType trainedAlgorithmicMedia tag. Learn more about IPTC photo metadata.

These requirements apply to the following image attributes in Merchant Center Classic and Merchant Center Next:

Image link [image_link]

Additional image link [additional_image_link]

Lifestyle image link [lifestyle_image_link]

Learn more about product data specifications.](https://iptc.org/wp-content/uploads/2024/02/Screenshot-2024-02-20-at-09.56.52-1024x724.png)