Categories

Archives

The IPTC has signed a liaison agreement with the Japanese camera-makers organisation and creators of the Exif metadata standard, CIPA.

CIPA members include all of the major camera manufacturers, including Nikon, Canon, Sony, Panasonic, FUJIFILM and more. Several software vendors who work with imaging are also members, including Adobe, Apple and Microsoft.

CIPA publishes guidelines and standards for camera manufacturers and imaging software developers. The most important of these from an IPTC point of view is the Exif standard for photographic metadata.

The IPTC and CIPA have had an informal relationship for many years, staying in touch regularly regarding developments in the world of image metadata. Given that the two organisations manage two of the most important standards for embedding metadata into image and video files, it’s important that we keep each other up to date.

Now the relationship has been formalised, meaning that the organisations can request to observe each other’s meetings, exchange members-only information when needed, and share information about forthcoming developments and industry requirements for new work in the field of media metadata and in related areas.

The news has also been announced by CIPA. According to the news post on CIPA’s website, “CIPA has signed a liaison agreement regarding the development of technical standards for metadata attached to captured image with International Press Telecommunications Council (IPTC), the international organization consists of the world’s leading news agencies, publishers and industry vendors.”

The Internet Architecture Board (IAB), a Committee of the Internet Engineering Task Force (IETF) which decides on standards and protocols that are used to govern the workings of Internet infrastructure, is having a workshop in September on “AI Control”. Discussions will include whether one or more new IETF standards should be defined to govern how AI systems work with Internet content.

As part of the lead-up to this workshop, the IAB and IETF have put out a call for position papers on AI opt-out techniques.

Accordingly, the IPTC Photo Metadata Working Group, in association with partner organisation the PLUS Coalition, submitted a position paper discussing in particular the Data Mining property which was added to the IPTC Photo Metadata Standard last year.

In the paper, the IPTC and PLUS set out their position that data mining opt-out information embedded in the metadata of media files is an essential part of any opt-out solution.

Here is a relevant section of the IPTC submission:

We respectfully suggest that Robots.txt alone is not a viable solution. Robots.txt may allow for communication of rights information applicable to all image assets on a website, or within a web directory, or on specific web pages. However, it is not an efficient method for communicating rights information for individual image files published to a web platform or website; as rights information typically varies from image to image, and as the publication of images to websites is increasingly dynamic.

In addition, the use of robots.txt requires that each user agent must be blocked separately, repeating all exclusions for each AI engine crawler robot. As a result, agents can only be blocked retrospectively — after they have already indexed a site once. This requires that publishers must constantly check their server logs, to search for new user agents crawling their data, and to identify and block bad actors.

In contrast, embedding rights declaration metadata directly into image and video files provides media-specific rights information, protecting images and video resources whether the site/page structure is preserved by crawlers — or the image files are scraped and separated from the original page/site. The owner, distributor, or publisher of an image can embed a coded signal into each image file, allowing downstream systems to read the embedded XMP metadata and to use that information to sort/categorise images and to comply with applicable permissions, prohibitions and constraints.

IPTC, PLUS and XMP metadata standards have been widely adopted and are broadly supported by software developers, as well as in use by major news media, search engines, and publishers for exchanging images in a workflow as part of an “operational best practice.” For example, Google Images currently uses a number of the existing IPTC and PLUS properties to signal ownership, licensor contact info and copyright. For details see https://iptc.org/standards/photo-metadata/quick-guide-to-iptc-photo-metadata-and-google-images/

The paper in PDF format can be downloaded from the IPTC site.

Thanks to David Riecks, Margaret Warren, Michael Steidl from the IPTC Photo Metadata Working Group and to Jeff Sedlik from PLUS for their work on the paper.

A few months in, things are going very well for the IPTC’s new Media Provenance Committee.

The IPTC Committee was initiated to continue the work started by Project Origin to bring the benefits of C2PA provenance technology to the news media industry. The Committee is chaired by Bruce MacCormack of CBC / Radio Canada.

The Committee has initiated three Working Groups who will be looking at specific issues:

- The Provenance Governance Working Group, led by Charlie Halford of the BBC.

- The Provenance Best Practices and Implementation Working Group, led by Helge O. Svela of Media City Bergen.

- The Provenance Advocacy and Education Working Group, led by Judy Parnall of the BBC.

We have also started the process of onboarding participants for the next phase of the Origin Verified News Publishers List, and have had several organisations already apply.

The first publishers on the list, BBC and CBC/Radio Canada, have already published some C2PA-signed content:

- The BBC has published some images and video with C2PA-embedded metadata showing the fact-checking process that has been undertaken by the BBC Verify fact-checking team.

- CBC / Radio Canada has published images signed with the CBC certificate on the cbc.ca website.

We are planning several events in the future to promote our work and encourage more in the media industry to get involved. Look out for news about IPTC Media Provenance work at the IBC Conference in Amsterdam in September and at other events.

If your organisation would like to be added to the list in the next phase or in the future, please get in touch!

The International Press Telecommunications Council, in conjunction with Project Origin, has established a working group to create and manage a C2PA compatible list of verified news publishers.

The open C2PA 2.0 Content Credentials standard for media provenance is widely supported as a strong defence against misinformation. Recent announcements by OpenAI, Meta, Google and others have confirmed the value of an interoperable, tamper-evident way of confirming the source and technical integrity of digital media content.

Project Origin, as a co-founder of the C2PA, has brought the needs of the news publishing community to the forefront of the creation of this standard. This now includes the creation of a C2PA 2.0 compatible Origin Verified Publisher Certificate to be used by publishers to securely create a cryptographic seal on their content. The signing certificates will be available through the IPTC, who will work with C2PA validators to gain widespread acceptance. These signing certificates will be issued by the IPTC to broadcast, print and digital native media publishers.

Origin Verified Publisher Certificates will ensure that the identity of established news organisations are protected from imposters. The certificates confirm organisational identity and do not make any judgement on editorial position. Liaison agreements with other groups in the media ecosystem will be used to accelerate the distribution of certificates.

The initial implementation uses TruePic as a certificate authority, with the BBC and CBC/Radio-Canada as trial participants.

“As a founding partner of Project Origin, CBC/Radio-Canada is proud to be one of the first media organisations to trial Origin Verified Publisher Certificates,” said Claude Galipeau, Executive Vice-President, Corporate Development, CBC/Radio-Canada. “This initiative will provide our audiences with a new and easy way of confirming that the content they’re consuming is legitimately from Canada’s national public broadcaster. It’s an important step in our adoption of the Content Credentials standard and in our fight against misinformation and disinformation.”

Jatin Aythora, Director of BBC R&D, and vice chair for Partnership on AI, said “Media provenance increases trust and transparency in news, and so is an essential tool in the fight against disinformation. That fight has never been more important, and so we hope many more media organisations will join us in securing their own Origin Verified Publisher Certificate.”

Publishers interested in working cooperatively to advance the implementation of the C2PA standard in the news ecosystem are invited to join the Media Provenance Committee of the IPTC.

For further information please contact:

- Judy Parnall – judy.parnall@bbc.co.uk – representing the BBC

- Bruce MacCormack – bruce@neuraltransform.com – representing CBC/Radio-Canada

- Brendan Quinn – mdirector@iptc.org – representing the IPTC

The IPTC Photo Metadata Working Group has updated the IPTC Photo Metadata User Guide, including guidance for accessibility and for tagging AI-generated images with metadata.

The updates to the User Guide are across several areas:

- A guide to using the accessibility fields added in IPTC Photo Metadata Standard version 2021.1 (Alt Text (Accessibility) and Extended Description (Accessibility) has been added

-

A new section with guidance for applying metadata to AI-generated images has been added

-

Guides for new fields added: Event Identifier, Product/Identifier, Contributor, Data Mining

-

The Metadata Usage Examples section has been updated to reflect some of the recently-added fields

-

The guidance on fields and topics has generally been reviewed and updated

Please let us know if you spot any other areas of the user guide that should be updated or if you have suggestions for more guidance that we could give.

After many years of working together in various areas related to media metadata, IPTC, the global technical standards body of the news media, today announces that Google LLC has joined IPTC as a Voting Member.

After many years of working together in various areas related to media metadata, IPTC, the global technical standards body of the news media, today announces that Google LLC has joined IPTC as a Voting Member.

As a Voting Member, Google will take part in all decisions regarding IPTC standards and delegates will contribute to shaping the standards as they evolve. This important work will happen alongside IPTC’s 26 other Voting Member companies.

“Google has worked with IPTC standards for many years, so it is great to see them join IPTC so that they can take part in shaping those standards in the future,” said Robert Schmidt-Nia of DATAGROUP, Chair of the Board of IPTC. “We look forward to working together with Google on our shared goals of making information usable and accessible.”

“Google has a long history of working with the IPTC, and we are very happy to now have joined the organization,” Anna Dickson, Product Manager at Google, said. “Joining aligns with our efforts to help provide more information and context to people online. We think this is critical to increasing trust in the digital ecosystem as AI becomes more ubiquitous.”

Google’s work together with IPTC started back in 2010 when schema.org, a joint project managed by Google on behalf of search engines, adopted IPTC’s rNews schema as the basis for schema.org’s news properties such as NewsArticle and CreativeWork. In 2016, the IPTC was a recipient of a Google News Initiative grant to develop the EXTRA rules-based metadata classification engine.

Google staff spoke at the Photo Metadata Conference (co-hosted with CEPIC) in 2018, which led to Google and the IPTC working together (along with CEPIC) on adding support for copyright, credit and licensing information in Google image search results. This has continued to include support for the Digital Source Type property which will now be used to signal content created by Generative AI engines.

The latest update to IPTC NewsCodes, the 2024-Q1 release, was published on Thursday 28th March.

This release includes many updates to our Media Topic subject vocabulary, plus changes to Content Production Party Role, Horse Position, Tournament Phase, Soccer Position, Genre, User Action Type and Why Present.

UPDATE on 11 April: we released a small update to the Media Topics, including Norwegian (no-NB and no-NN) translations of the newly added terms, thanks to Norwegian news agency NTB.

We also made one label change in German: medtop:20000257 from “Alternative-Energie” to “Erneuerbare Energie,” This change was made at the request of German news agency dpa.

Changes to Media Topics vocabulary

As part of the regular review undertaken by the NewsCodes Working Group, many changes were made to the economy, business and finance branch of Media Topics. In addition, a number of changes were made to the conflict, war and peace branch in response to suggestions made by new IPTC member ABC Australia.

5 new concepts: sustainability, profit sharing, corporate bond, war victims, missing in action.

12 retired concepts: justice, restructuring and recapitalisation, bonds, budgets and budgeting, consumers, consumer issue, credit and debt, economic indicator, government aid, investments, prices, soft commodities market.

55 modified concepts: peacekeeping force, genocide, disarmament, prisoners of war, war crime, judge, economy, economic trends and indicators, business enterprise, central bank, consumer confidence, currency, deflation, economic growth, gross domestic product, industrial production, inventories, productivity, economic organisation, emerging market, employment statistics, exporting, government debt, importing, inflation, interest rates, international economic institution, international trade, trade agreements, balance of trade, trade dispute, trade policy, monetary policy, mortgages, mutual funds, recession, tariff, market and exchange, commodities market, energy market, debt market, foreign exchange market, loan market, loans and lending, study of law, disabilities, mountaineering, sport shooting, sport organisation, recreational hiking and climbing, start-up and entrepreneurial business, sharing economy, small and medium enterprise, sports officiating, bmx freestyle.

48 concepts with modified names/labels: judge, emergency incident, transport incident, air and space incident, maritime incident, railway incident, road incident, restructuring and recapitalisation, economic trends and indicators, exporting, importing, interest rates, balance of trade, mortgages, commodities market, soft commodities market, loans and lending, study of law, disabilities, mountain climbing, mountaineering, sport shooting, sport organisation, recreational hiking and climbing, start-up and entrepreneurial business, sports officiating, bmx freestyle, tsunami, healthcare industry, developmental disorder, depression, anxiety and stress, public health, pregnancy and childbirth, fraternal and community group, cyber warfare, public transport, taxi and ride-hailing, shared transport, business reporting and performance business restructuring commercial real estate residential real estate podcast, financial service, business service, news industry, diversity, equity and inclusion.

57 modified definitions: war crime, economy, economic trends and indicators, business enterprise, central bank, consumer confidence, currency, deflation, economic growth, economic organisation, emerging market, employment statistics, exporting, government debt, importing, inflation, interest rates, international economic institution, trade agreements, trade dispute, trade policy, mortgages, recession, tariff, market and exchange, commodities market, energy market, soft commodities market, debt market, foreign exchange market, loan market, loans and lending, disabilities, mountaineering, sport organisation, start-up and entrepreneurial business, sharing economy, small and medium enterprise, tsunami, healthcare industry, developmental disorder, depression, anxiety and stress, public health, pregnancy and childbirth, cyber warfare, public transport, taxi and ride-hailing, shared transport, business reporting and performance, business restructuring, commercial real estate, residential real estate, podcast, financial service, business service, news industry.

22 modified broader terms (hierarchy moves): peacekeeping force, genocide, disarmament, prisoners of war, business enterprise, central bank, consumer confidence, currency, gross domestic product, industrial production, inventories, productivity, economic organisation, emerging market, interest rates, international economic institution, international trade, monetary policy, mutual funds, tariff, loans and lending, bmx freestyle.

These changes are already available in the en-GB, en-US and Swedish (se) language variants. Thanks go to TT and Bonnier News for their work on the Swedish translation.

If you would like to contribute or update a translation to your language, please contact us.

Sports-related NewsCodes updates

We also made some changes to our sports NewsCodes vocabularies, which are mostly used by SportsML and IPTC Sport Schema.

New vocabulary: Horse Position

New entries in Tournament Phase vocabulary: Heat, Round of 16

New entry in Soccer Position: manager,

News-related NewsCodes updates

Content Production Party Role: new term Generative AI Prompt Writer which can also be used in Photo Metadata Contributor to declare who wrote the prompt that was used to generate an image.

Genre: new term User-Generated Content.

Why Present: new term associated.

The User Action Type vocabulary, mostly used by NewsML-G2, has had some major changes.

Previously this vocabulary defined terms related to specific social media services or interactions. We have retired/deprecated all site-specific terms (Facebook Likes, Google’s +1, Twitter re-tweets, Twitter tweets).

Instead, we have defined some generic terms: Like, Share, Comment. The pageviews term has been broadened into simply views (although the ID remains as “pageviews” for backwards-compatibility)

Thanks to the NewsCodes Working Group for their work on this release, and to all members and non-members who have suggested changes.

The IPTC News Architecture Working Group is happy to announce that the NewsML-G2 Guidelines and NewsML-G2 Specification documents have been updated to align with version 2.33 of NewsML-G2, which was approved in October 2023.

The changes include:

Specification changes:

- Adding the newest additions authoritystatus and digitalsourcetype added in NewsML-G2 versions 2.32 and 2.33

- Clarification on how @uri, @qcode and @literal attributes should be treated throughout

- Clarification on how roles should be added to infosource element when an entity plays more than one role

- Clarifying and improving cross-references and links throughout the document

Guidelines changes:

- Documentation of the Authority Status attrribute and its related vocabulary, added in version 2.32

- Documentation of the Digital Source Type element and its related vocabulary, added in version 2.33

- Clarification on how @uri, @qcode and @literal attributes should be treated throughout

- Clarifying and improving cross-references and links throughout the document

- Improved the additional resources section including links to related IPTC standards and added links to the SportsML-G2 Guidelines

- See the What’s New in NewsML-G2 2.32 and 2.33 section for full details.

We always welcome feedback on our specification and guideline documents: please use the Contact Us form to ask for clarifications or suggest changes.

The IPTC is happy to announce the latest version of our guidance for mapping between photo metadata standards.

The IPTC is happy to announce the latest version of our guidance for mapping between photo metadata standards.

Following our publication of IPTC’s rules for mapping photo metadata between IPTC, Exif and schema.org standards in 2022, the IPTC Photo Metadata Working Group has been monitoring updates in the photo metadata world.

In particular, the IPTC gave support and advice to CIPA while it was working on Exif 3.0 and we have updated our mapping rules to work with the latest changes to Exif expressed in Exif 3.0.

As well as guidelines for individual properties between IPTC Photo Metadata Standard (in both the older IIM form and the newer XMP embedding format), Exif and schema.org, we have included some notes on particular considerations for mapping contributor, copyright notice, dates and IDs.

The IPTC encourages all developers who previously consulted the out-of-date Metadata Working Group guidelines (which haven’t been updated since 2008 and are no longer published) to use this guide instead.

We at IPTC receive many requests for help and advice regarding editing embedded photo and video metadata, and this has only increased with the recent news about the IPTC Digital Source Type property being used to identify content created by a generative AI engine.

In response, we have created some guidance: Developers’ and power users’ guide to reading and writing IPTC Photo Metadata

This takes the form of a wiki, so that it can be easily maintained and extended with more information and examples.

In its initial form, the documentation focuses on:

- Using the ExifTool command-line tool to read and write IPTC Photo Metadata,. ExifTool is commonly used by developers and power users to explore embedded metadata;

- Reading and writing image metadata in Python using the pyexiftool module;

- Reading and writing metadata in JavaScript (or TypeScript) using the node-exiftool module.

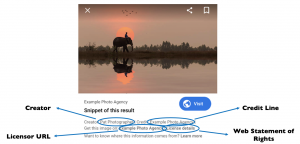

In each guide, we advise on how to read and create DigitalSourceType metadata for generative AI images, and also how to read and write the Creator, Credit Line, Web Statement of Rights and Licensor information that is currently used by Google image search to expose copyright information alongside search results.

We hope that these guides will help to demystify image metadata and encourage more developers to include more metadata in their image editing and publishing workflows.

We will add more guidance over the coming months in more programming languages, libraries and frameworks. Of particular interest are guides to reading and writing IPTC Photo Metadata in PHP, C and Rust.

Contributions and feedback are welcome. Please contact us if you are interested in contributing.