Categories

Archives

… the image business in a changing environment

By Sarah Saunders

The web is a Wild West environment for images, with unauthorised uses on a massive scale, and a perception by many users that copyright is no longer relevant. So what is a Smart Photo in this environment? The IPTC Photo Metadata Conference 2018 addressed the challenges for the photo industry and looked at some of the solutions.

Isabel Doran, Chair of UK image Library association BAPLA kicked off the conference with some hard facts. The use of images – our images – has created multibillion dollar industries for social media platforms and search engines, while revenues for the creative industry are diminishing in an alarming way. It has long been been said that creators are the last to benefit from use of their work; the reality now is that creators and their agents are in danger of being squeezed out altogether.

Take this real example of image use: An image library licenses an image of a home interior to a company for use on their website. The image is right-click downloaded from the company’s site, and uploaded to a social media platform. From there it is picked up by a commercial blog which licenses the image to a US real estate newsfeed – without permission. Businesses make money from online advertising, but the image library and photographer receive nothing. The image is not credited and there is no link to the site that licensed the image legitimately, or to the supplier agency, or to the photographer.

Social media platforms encourage sharing and deep linking (where an image is shown through a link back to the social media platform where the image is posted, so is not strictly copied). Many users believe they can use images found on the web for free in any way they choose. The link to the creator is lost, and infringements, where found, are hard to pursue with social media platforms.

Tracking and enforcement – a challenge

The standard procedure for tracking and enforcement involves upload of images to the site of a service provider, which maintains a ‘registry’ of identified images (often using invisible watermarks) and runs automated matches to images on the web to identify unauthorised uses. After licensed images have been identified, the image provider has to decide how to enforce their rights for unauthorised uses in what can only be called a hostile environment. How can the tracking and copyright enforcement processes be made affordable for challenged image businesses, and who is responsible for the cost?

The Copyright Hub was created by the UK Government and now creates enabling technologies to protect Copyright and encourage easier content licensing in the digital environment. Caroline Boyd from Copyright Hub demonstrated the use of the Hub copyright icon for online images. Using the icon (like this one ![]() ) promotes copyright awareness, and the user can click on the icon for more information on image use and links back to the creator. Creating the icon involves adding a Hub Key to the image metadata. Abbie Enock, CEO of software company Capture and a board member of the Copyright Hub, showed how image management software can incorporate this process seamlessly into the workflow. The cost to the user should be minimal, depending on the software they are using.

) promotes copyright awareness, and the user can click on the icon for more information on image use and links back to the creator. Creating the icon involves adding a Hub Key to the image metadata. Abbie Enock, CEO of software company Capture and a board member of the Copyright Hub, showed how image management software can incorporate this process seamlessly into the workflow. The cost to the user should be minimal, depending on the software they are using.

Publishers can display the icon on images licensed for their web site, allowing users to find the creator without the involvement of – and risk to – the publisher.

Meanwhile, suppliers are working hard to create tracking and enforcement systems. We heard from Imatag, Copytrack, PIXRAY and Stockfood who produce solutions that include tracking and watermarking, legal enforcement and follow up.

Design follows devices

Images are increasingly viewed on phones and tablets as well as computers. Karl Csoknyay from Keystone-SDA spoke about responsive design and the challenges of designing interfaces for all environments. He argued that it is better to work from simple to complex, starting with design for the smartphone interface, and offering the same (simple) feature set for all environments.

Smart search engines and smart photos

Use of images in search engines was one of the big topics of the day, with Google running its own workshop as well as appearing in the IPTC afternoon workshop along with the French search engine QWANT.

Image search engines ‘scrape’ images from web sites for use in their image searches and display them in preview sizes. Sharing is encouraged, and original links are soon lost as images pass from one web site to the next.

CEPIC has been in discussion with Google for some time, and some improvements have been made, with general copyright notices more prominently placed, but there is still a way to go. The IPTC conference and Google workshop were useful, with comments from the floor stressing the damage done to photo businesses by use of images in search engines.

Attendees asked if IPTC metadata could be picked up and displayed by search engines. We at IPTC know the technology is possible; so the issue is one of will. Google appears to be taking the issue seriously. By their own admission, it is now in their interest to do so.

Google uses imagery to direct users to other non-image results, searching through images rather than for images. Users searching for ‘best Indian restaurant’ for example are more likely to be attracted to click through by sumptuous images than by dry text. Google wants to ‘drive high quality traffic to the web ecosystem’ and visual search plays an important part in that. Their aim is to operate in a ‘healthy image ecosystem’ which recognises the rights of creators. More dialogue is planned.

Search engines could drive the use of rights metadata

The fact that so few images on the web have embedded metadata (3% have copyright metadata according to a survey by Imatag) is sad but understandable. If search engines were to display the data, there is no doubt that creators and agents would press their software providers and customers to retain the data rather than stripping it, which again would encourage greater uptake. Professional photographers generally supply images with IPTC metadata; to strip or ignore copyright data of this kind is the greatest folly. Google, despite initial scepticism, has agreed to look at the possibilities offered by IPTC data, together with CEPIC and IPTC. That could represent a huge step forward for the industry.

As Isabel Doran pointed out, there is no one single solution which can stand on its own. For creators to benefit from their work, a network of affordable solutions needs to be built up; awareness of copyright needs support from governments and legal systems; social media platforms and search engines need to play their part in upholding rights.

Blueprints for the Smart Photo are out there; the Smart Photo will be easy to use and license, and will discourage freeloaders. Now’s the time to push for change.

By Jennifer Parrucci

Senior Taxonomist at The New York Times

Lead of IPTC’s NewsCodes Working Group

The New York Times has a proud history of metadata. Every article published since The Times’s inception in 1851 contains descriptive metadata. The Times continues this tradition by incorporating metadata assignment into our publishing process today so that we can tag content in real-time and deliver key services to our readers and internal business clients.

I shared an overview of The Times’s tagging process at a recent conference held by the International Press Telecommunications Council in Barcelona. One of the purposes of IPTC’s face-to-face meetings is for members and prospective members to gain insight on how other member organizations categorize content, as well as handle new challenges as they relate to metadata in the news industry.

Why does The New York Times tag content today?

The Times doesn’t just tag content just for tradition’s sake. Tags play an important role in today’s newsroom. Tags are used to create collections of content and send out alerts on specific topics. In addition, tags help boost relevance on our site search and send a signal to external search engines, as well as inform content recommendations for readers. Tags are also used for tracking newsroom coverage, archive discovery, advertising and syndication.

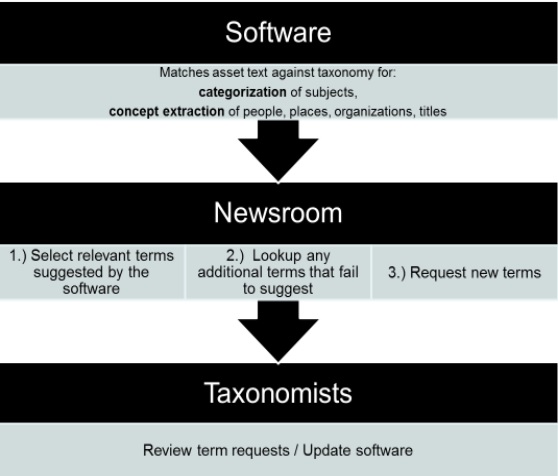

How does The New York Times tag content?

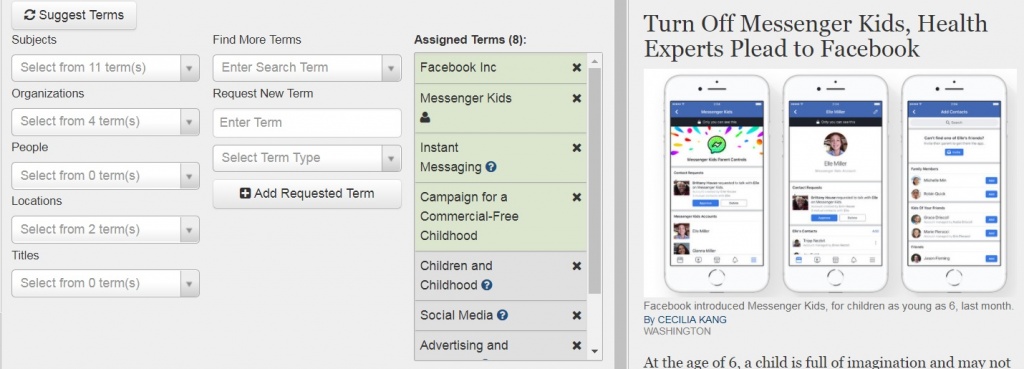

The Times employs rules-based categorization, rather than purely statistical tagging or hand tagging, to assign metadata to all published content, including articles, videos, slideshows and interactive features.

Rules-based classification involves the use of software that parses customized rules that look at text and suggest tags based on how well they match the conditions of those rules. These rules might take into account things like the frequency of words or phrases in an asset, the position of words or phrases, for example whether a phrase appears in the headline or lead paragraph, a combination of words appearing in the same sentence, or a minimum amount of names or phrases associated with a subject appearing in an asset.

Unlike many other publications that use rules-based classification, The Times adds a layer of human supervision to tagging. While the software suggests the relevant subject terms and entities, the metadata is not assigned to the article until someone in the newsroom selects and assigns tags from that list of suggestions to an asset.

Why does The Times use rules-based and human supervised tagging?

This method of tagging allows for more transparency in rule writing to see why a rule has or has not matched. Additionally it gives the ability to customize rules based on patterns specific to our publication. For example, The Times has a specific style for obituaries, whereby the first sentence usually states someone died, followed by a short sentence stating his or her age. This language pattern can be included in the rule to increase the likelihood of obituaries matching with the term “Deaths (Obituaries).” Rules-based classification also allows for the creation of tags without needing to train a system. This option allows taxonomists to create rules for low-frequency topics and breaking news, for which sufficient content to train the system is lacking.

These rules can then be updated and modified as a topic or story changes and develops. Additionally, giving the newsroom rule suggestions and a controlled vocabulary to choose from ensures a greater consistency in tagging, while the human supervision of the tagging ensures quality.

What does the tagging process at The New York Times look like?

Once an asset (an article, slideshow, video or interactive feature) is created in the content management system, the categorization software is called. This software runs the text against the rules for subjects and then through the rules for entities (proper nouns). Once this process is complete, editors are presented with suggestions for each term type within our schema: subjects, organizations, people, locations and titles of creative works. The subject suggestions also contain a relevancy score. The editor can then choose tags from these suggestions to be assigned to an article. If they do not see a tag that they know is in the vocabulary suggested to them, the editors have the option to search for that term within the vocabulary. If there are new entities in the news, the editors can request that they be added as new terms. Once the article is published/republished the tags chosen from the vocabulary are assigned to the article and the requested terms are sent to the Taxonomy Team.

The Taxonomy Team receives all of the tag requests from the newsroom in a daily report. Taxonomists review the suggestions and decide whether they should be added to the vocabulary, taking into account factors such as: news value, frequency of occurrence, and uniqueness of the term. If the verdict is yes, then the taxonomist creates a new entry for the tag in our internal taxonomy management tool and disambiguates the entry using Boolean rules. For example, there cannot be two entries both named “Adams, John” for the composer and the former United States president of the same name. To solve this, disambiguation rules are added so that the software knows which one to suggest based on context.

John Adams,_IF:{(OR,”composer”,”Nixon in China”,”opera”…)}::Adams, John (1947- )

John Adams,_IF:{(OR,”federalist”,”Hamilton”,”David McCullough”…)}:Adams, John (1735-1826)

Once all of these new terms are added into the system, the Taxonomy Team retags all assets with the new terms.

In addition to these term updates, taxonomists also review a selection of assets from the day for tagging quality. Taxonomists read the articles to identify whether the asset has all the necessary tags or has been over-tagged. The general rule is to tag the focus of the article and not everything mentioned. This method ensures that the tagging really gets to the heart of what the piece is about. When doing this review, taxonomists will notice subject terms that are either not suggesting or suggesting improperly. The taxonomist uses this opportunity to tweak the rules for that subject so that the software suggests the tag properly next time.

After this review of the tagging process at the New York Times, the Taxonomy Team compiles a daily report back to the newsroom that includes shoutouts for good tagging examples, tips for future tagging and a list of all the new term updates for that day. This email keeps the newsroom and the Taxonomy Team in contact and acts as a continuous training tool for the newsroom.

All of these procedures come together to ensure that The Times has a high quality of metadata upon which to deliver highly relevant, targeted content to readers.

Read more about taxomony and IPTC standard Media Topics.

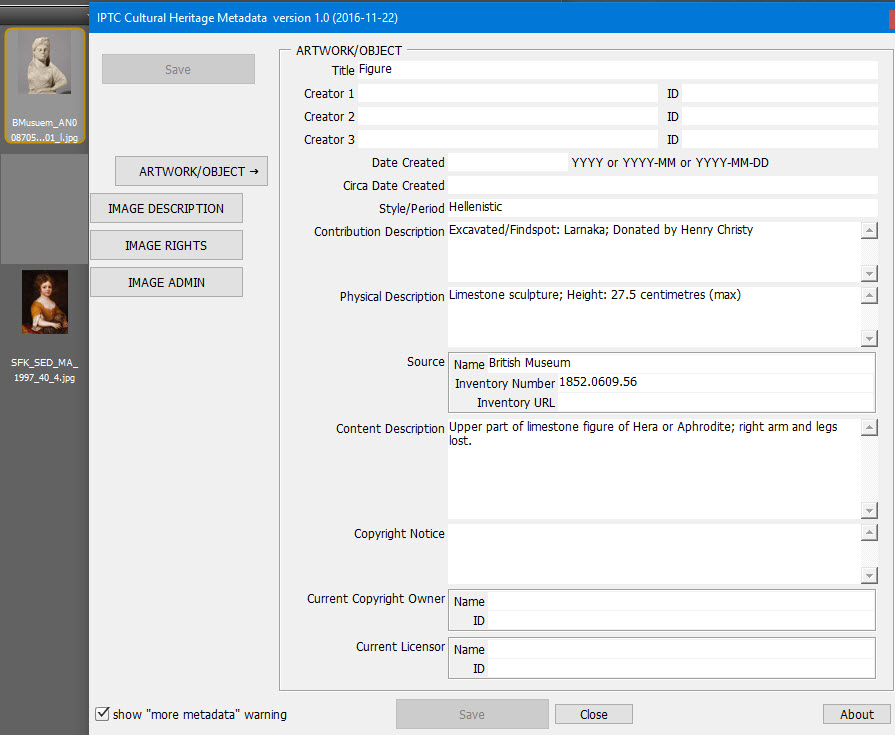

IPTC’s Photo Metadata Working Group has released the Cultural Heritage Panel plugin for Adobe Bridge, which focuses on fields relevant for images of artwork and other physical objects, such as artifacts, historical monuments, and books and manuscripts.

Sarah Saunders and Greg Reser, experts from the cultural heritage sector, conceived the IPTC Cultural Heritage Panel to address needs of the photo business and growing community of museums, art foundations, libraries, and archive organisations. Furthermore the panel fills a gap: Many imaging software products, including Bridge, do not support all metadata fields of the IPTC Photo Metadata Standard 2016 for artwork or objects.

The artwork or object fields – a special set of metadata fields developed by IPTC a few years ago – describe artworks and objects portrayed in the image (for example, a painting by Leonardo da Vinci). This means that descriptive and rights information about artworks or objects is recorded separately from information about the digital image in which they are shown. Multiple layers of rights and attribution can be expressed – copyright in the photo may be owned by a photographer or museum, while the copyright in the painting is owned by an artist or estate.

The new plugin for Bridge (CC versions up to 2016 and CS6 were tested) allows people to view the image data, and write into these fields using a simple panel, which has been tailor-made for use in the heritage sector. The panel includes fields for artwork/object attributes and also relevant digital image rights.

“The Cultural Heritage Panel will be very useful for people working in the heritage sector in museums and archives,” Saunders, a consultant specialising in digital imaging and archiving. “It allows them to manage and monitor data about objects and artworks that is embedded in the IPTC XMP fields in the image.”

The panel is especially helpful for small organisations without digital asset management systems, and large organisations with many individual contributors – all of whom may enter metadata into the standard fields using Adobe Bridge, said Reser, a metadata analyst for the University of California, San Diego, who wrote the JavaScript code for the project.

“The metadata can then be transferred into an organisation’s digital asset management system; the panel helps ease the ingest process,” Reser said.

Reser also noted that the panel helps incorporate more people into workflows, such as freelance photographers, who otherwise may not have access to an organisation’s digital asset management system. The Cultural Heritage Panel allows them to be an efficient part of the process of viewing the metadata included with an image, and adding to it when appropriate.

“IPTC is the most popular schema in embedded metadata,” Reser said. “Over time I bet we’ll see a lot of the cultural heritage fields creep into off-the-shelf programs and software.”

The panel is free, includes an easy-to-use interface, and includes key image administration fields. Image caption and keywords can be automatically generated from existing Artwork or Object data.

Download the IPTC Cultural Heritage Panel and User Guide for Adobe Bridge.

Questions? Contact us.

Twitter: @IPTC

LinkedIn: IPTC

It’s an Olympic year for IPTC’s SportsML 3.0 standard, the recently released update to the most comprehensive tech-industry XML format for sports data.

Norsk Telegrambyrå (NTB), in Norway, is one of the first news organizations to implement SportsML 3.0, in time for the 2016 Games in Rio.

“We figured, why not use the latest technology available?” said Trond Husø, system developer for NTB, who worked on the standard’s update, released in July. “SportsML 3.0’s use of controlled vocabularies for sport competitions and other subjects now provides many benefits, including more flexibility. Storing results is also more convenient.”

SportsML 3.0 is the ideal structure and back-end solution used by many major news organizations because it is the only open global standard for scores, schedules, standings and statistics. “It saves the time and cost of developing an in-house structure,” said Husø, also a member of IPTC’s Sports Content Working Party.

The Rio Games, which will host about 10,500 athletes from 206 countries, for 17 days and 306 events, are revolutionary for big data and new approaches for managing it. For the first time, the International Olympic Committee (IOC) used cloud-based solutions for work processes including volunteer recruitment and accreditation.

And consider the experimental technologies and apps launched by key broadcasters and Olympic Broadcasting Services, the Olympic committee responsible for coordinating TV coverage of the Games: virtual reality footage, online streaming, automated reporting, drone cameras, and Super-High Vision, which is supposedly 16 times clearer than HD.

Billions of Olympic spectators worldwide have naturally come to expect real-time results and accurate scores to be delivered to them, with a side of historical perspective. All with little thought as to how the information reaches the public, be it via tickers on websites, graphic stats on TV screens, or factoids offered by commentators.

Schedules, competitors’ names, bio information, times, rankings, medalists – how does all of this data get served up so quickly and uniformly among networks and news services? And how does it get integrated into existing news systems, namely SportsML 3.0?

It starts with the IOC – the non-profit, non-governmental body that organizes the Olympic Games and Youth Olympic Games. They act as a catalyst for collaboration for all parities involved, from athletes, organiser committees, and IT, to broadcast partners and United Nations agencies. The IOC generates revenue for the Olympic Movement through several major marketing efforts, including the sale of broadcast rights.

The IOC produces the Olympic Data Feed (ODF), the repository of live data about past and current games. The IOC is responsible for communicating the official results; they use the specific ODF format for their ODF data.

Paying media partners sign a licensing agreement to use ODF, to report on results through their own channels, and build new apps, services and analysis tools.

The goal of ODF is to define a unified set of messages valid for all sports and several different news systems – so that all partners are receiving the same data, at the same time. It was introduced for the Vancouver Games in 2010 and is an ongoing development effort.

According to the IOC’s website, ODF plays the part of messenger. From a technical standpoint, the data is machine-readable. ODF sends sports information from the moment it is generated to its final destination via Extensible Markup Language (XML). XML, a framework for storing metadata about files, is a flexible means to electronically share structured data via the Internet, as well as via corporate networks.

IPTC’s SportsML 3.0 easily imports data from ODF. Using SportsML to structure the ODF’s data is a broad and comprehensive solution to approaching all sports and competitions worldwide. ODF has identifiers for sports and awards (gold, silver, and bronze medals) executed at the Olympic Games; sports outside of ODF are identified by vocabulary terms of SportsML.

“SportsML 3.0 provides one structure for the data for developers to work in,” said Husø. “The structure will be the same, even if there are changes to ODF in future Olympic Games; the import and export process of the data will not change.”

Among content providers that use SportsML (various versions) are NTB, AP mobile (USA), BBC (UK), ESPN (USA), PA – Press Association (UK), Univision (USA, Mexico), Yahoo! Sports (USA), and Austria Presse Agentur (APA) (Austria), and XML Team Solutions (Canada).

SportsML 3.0 is based on its parent standard, NewsML-G2, the backbone of many news systems, and a single format for exchanging text, images, video, audio news and event or sports data – and packages thereof. SportsML 3.0 is fully compatibility with IPTC G2 structures.

For more information on SportsML 3.0:

SportsML 3.0 Standard, including Zip package

SportsML 3.0 Specification Documents, IPTC’s Developer site

NewsML-G2 Standard

Contact: Trond Husø @trondhuso, Trond.Huso@ntb.no

Mittmedia and Journalism++ Stockholm, two news organizations in Sweden, are successfully developing and incorporating AIPs, automation tools and robots into workflows to enhance to the capabilities of newsrooms, as reported at the International Press Communications Council’s (IPTC) Spring Meeting 2016.

Mittmedia and Journalism++ Stockholm, two news organizations in Sweden, are successfully developing and incorporating AIPs, automation tools and robots into workflows to enhance to the capabilities of newsrooms, as reported at the International Press Communications Council’s (IPTC) Spring Meeting 2016.

News organizations continue to experiment with bots as part of a frontier in automation journalism, as publishers draw on the benefits of the massive amounts of data available to newsrooms, including information about their own audiences. Despite some apprehension, the benefits of automating parts of the publishing process are many: aiding journalists in storytelling with the ability to sift through big data, refining workflows and reducing workloads, and more precise and faster content delivery to customers.

Mittmedia began their automation efforts in 2015 with a weather forecast text bot, which pulls data from the Swedish Meteorological and Hydrological Institute.

Set up initially as a testing tool based on a simple minimum viable product (MVP), it now delivers daily forecasts for 42 municipalities, soon to be 63.

Mittmedia’s next project was Rosalinda, a sports robot that transforms data into text for immediate publishing. Data is pulled from the Swedish website Everysport API, giving developers access to information on 90,000 teams and 1,500,000 matches. Rosalinda now reports all football, ice hockey and floor ball matches played in Sweden, which filled a need in the market. United Media, owned by Mittmedia and two other companies, developed the tool.

Mittmedia has adopted a data-driven mindset and work process to gain a competitive edge over other local news sources. “We aim to deliver more content – faster, and provide it to the right person, at the right time and at the right place,” said Mikael Tjernström, Mittmedia API Editor.

Faster publication and more personal and relevant content were also among the reason for Journalism++’s development of the automated news service Marple, which focuses on story finding and investigation, rather than text generation. according to Jens Finnäs, the organization’s founder.

One of four Swedish projects to receive funding from Google’s Digital News Initiative (DNI) this year, Marple is used for finding targeted local stories in public data. For example, Marple has analyzed monthly crime statistics and found a wave of bike thefts in Gothenburg and a record number of reported narcotics offences in Sollefteå.

“Open data has been a highly underutilized resource in journalism. We are hoping to change that,” Finnäs said. “We don’t think the robots will replace journalists, but we are positive that automation can make journalism smarter and more efficient, and that there are thousands of untold stories to be found.”

The grant from Google’s DNI gives Journalism++ a unique opportunity to test Marple and possibly turn it into a commercially viable product, Finnäs said.

More information:

Jens Finnäs: jens.finnas@gmail.com Twitter @jensfinnas

Mikael Tjernström: Twitter @micketjernstrom

Photo by Photo by CC/FLICKR/Peyri_Herrera.

When IPTC member Sourcefabric presents their flagship product Superdesk – an extensible end-to-end news production, curation and distribution platform – they always recognize the importance of IPTC standard NewsML-G2 as its backbone.

When IPTC member Sourcefabric presents their flagship product Superdesk – an extensible end-to-end news production, curation and distribution platform – they always recognize the importance of IPTC standard NewsML-G2 as its backbone.

As Sourcefabric CTO Holman Romero explained at the IPTC Summer Meeting in Stockholm (13 – 15 June 2016), Superdesk was built on the principles of the News Architecture part of the NewsML-G2 specification by the IPTC. This is because Superdesk is not a traditional Web CMS, but rather a platform developed from the ground up for journalists to manage the numerous processes of a newsroom.

“Superdesk is more than a news management tool built for journalists by journalists, for creation, archiving, distribution, workflow structure, and editorial communications,” Romero said. “We at Sourcefabric also see it as the cornerstone of the new common open-source code base for quality, professional journalism.”

NewsML-G2 is a blueprint that provides all the concepts and business logic for a news architecture framework. It also standardises the handling of metadata that ultimately enables all types of content to be linked, searched, and understood by end users. NewsML-G2 metadata properties are designed to comply with RDF, the data model of the Semantic Web, enabling the development of new applications and opportunities for news organisations in evolving digital markets.

There were several important factors that led Sourcefabric, Europe’s largest developer of open source tools for news media, to the decision to use NewsML-G2:

- IPTC has established credibility as a consortium of the world’s leading news agencies and publishers. Additionally, NewsML-G2 has been adopted by some of the world’s major news agencies as the standard de facto for news distribution. “Why reinvent the wheel?” Romero said. “IPTC’s standards are based on years of experience of top news industry professionals.”

- NewsML-G2 met the requirements of Sourcefabric’s content model: granularity, structured data, flexibility, and reusability.

- NewsML-G2 met the requirements for Sourcefabric’s design principals:

- Every piece of content is a News Item.

- Content types are text, image, video, audio.

- Content profiles support the creation of story profiles and templates.

- It can format items for content packages and highlights.

- Content can be created once and used in many places. Sourcefabric refers to this as the COPE model: Create Once, Publish Everywhere. This structure enables and frees the content to be used seamlessly and automatically across multiple channels and devices, and in a variety of previously impossible contexts.

Sourcefabric also stresses the importance of metadata, the building blocks of “structured journalism.” As explained by Romero in a recent blog post: “The foundation of structured journalism is built on the ability to access and locate enormous amounts of data from all over the web and from within the system itself (i.e. content from previous articles). Without providing valuable metadata for each of your stories and subsequent pieces of visual collateral, finding key information located inside of them becomes infinitely more difficult.”

News organizations that use Superdesk include the Australian news agency AAP and Norweigian News Agency NTB. Other IPTC standards supported by the platform are NewsML 1, ninjs, NITF, Subject Codes, IPTC 7901.

Source code repositories are publicly available in Github: https://github.com/superdesk

About Sourcefabric: Sourcefabric’s mission is to make professional-grade technology available to all who believe that quality independent journalism has a fundamental role to play in any healthy society. They generate revenue by IT services – managed hosting, SaaS, custom development, integration into existing workflows – as well as project-by-project funding, grants, donations.

This will be the title of IPTC’s presentation at the JPEG Privacy & Security Workshop held in Brussels (Belgium) on Tuesday, 13 October 2015.

The major goal for applying metadata to a photo is: associate permanently with it descriptions of its visual content and data for managing it properly. This is done for more than two decades by embedding the metadata into JPEG files. Now in an internet driven business world a JPEG file may take many hops in a supply chain and is exposed to actions by humans and/or software stripping off metadata values.

IPTC as the body behind the most widely used business metadata schema for photos is permanently asked by people from the photo business how their metadata could be protected against deletion. This presentation will show the requirements in detail and also considerations about different levels of protection to meet the needs of the originators of photos and the needs of parties downstream.

This topic will be presented by Michael Steidl, IPTC’s Managing Director and lead of the Photo Metadata work.