Categories

Archives

Family Tree magazine has published a guide on using embedded metadata for photographs in genealogy – the study of family history.

Rick Crume, a genealogy consultant and the article’s author, says IPTC metadata “can be extremely useful for savvy archivists […] IPTC standards can help future-proof your metadata. That data becomes part of the digital photo, contained inside the file and preserved for future software programs.”

Crume quotes Ken Watson from All About Digital Photos saying “[IPTC] is an internationally recognized standard, so your IPTC/XMP data will be viewable by someone 50 or 100 years from now. The same cannot be said for programs that use some proprietary labelling schemes.”

Crume then adds: “To put it another way: If you use photo software that abides by the IPTC/XMP standard, your labels and descriptive tags (keywords) should be readable by other programs that also follow the standard. For a list of photo software that supports IPTC Photo Metadata, visit the IPTC’s website.“

“[IPTC] is an internationally recognized standard, so your IPTC/XMP data will be viewable by someone 50 or 100 years from now”

The article goes on to recommend particular software choices based on IPTC’s list of photo software that supports IPTC Photo Metadata. In particular, Crume recommends that users don’t switch from Picasa to Google Photos, because Google Photos does not support IPTC Photo Metadata in the same way. Instead, he recommends that users stick with Picasa for as long as possible, and then choose another photo management tool from the supported software list.

Similarly, Crume recommends that users should not move from Windows Photo Gallery to the Windows 10 Photos app, because the Photos app does not support IPTC embedded metadata.

Crume then goes on to investigate popular genealogy sites to examine their support for embedded metadata, something that we do not cover in our photo metadata support surveys.

The full article can be found on FamilyTree.com.

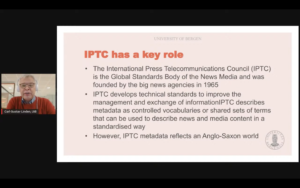

The IPTC took part in a panel on Diversity and Inclusion at the CEPIC Congress 2022, the picture industry’s annual get-together, held this year in Mallorca Spain.

Google’s Anna Dickson hosted the panel, which also included Debbie Grossman of Adobe Stock, Christina Vaughan of ImageSource and Cultura, and photographer Ayo Banton.

Unfortunately Abhi Chaudhuri of Google couldn’t attend due to Covid, but Anna presented his material on Google’s new work surfacing skin tone in Google image search results.

Brendan Quinn, IPTC Managing Director participated on behalf of the IPTC Photo Metadata Working Group, who put together the Photo Metadata Standard including the new properties covering accessibility for visually impaired people: Alt Text (Accessibility) and Extended Description (Accessibility).

Brendan also discussed IPTC’s other Photo Metadata properties concerning diversity, including the Additional Model Information which can include material on “ethnicity and other facets of the model(s) in a model-released image”, and the characteristics sub-property of the Person Shown in the Image with Details property which can be used to enter “a property or trait of the person by selecting a term from a Controlled Vocabulary.”

Some interesting conversations ensued around the difficulty of keeping diversity information up to date in an ever-changing world of diversity language, the pros and cons of using controlled vocabularies (pre-selected word lists) to cover diversity information, and the differences in covering identity and diversity information on a self-reported basis versus reporting by the photographer, photo agency or customer.

It’s a fascinating area and we hope to be able to support the photographic industry’s push forward with concrete work that can be implemented at all types of photographic organisations to make the benefits of photography accessible for as many people as possible, regardless of their cultural, racial, sexual or disability identity.

The National Association of Broadcasters (NAB) Show wrapped up its first face-to-face event in three years last week in Las Vegas. In spite of the name, this is an internationally attended trade conference and exhibition showcasing equipment, software and services for film and video production, management and distribution. There were 52,000 attendees, down from a typical 90-100k, with some reduction in booth density; overall the show was reminiscent of pre-COVID days. A few members of IPTC met while there: Mark Milstein (vAIsual), Alison Sullivan (MGM Resorts), Phil Avner (Associated Press) and Pam Fisher (The Media Institute). Kudos to Phil for working, showcasing ENPS on the AP stand, while others walked the exhibition stands.

NAB is a long-running event and several large vendors have large ‘anchor’ booths. Some such as Panasonic and Adobe reduced their normal NAB booth size, while Blackmagic had their normal ‘city block’-sized presence, teeming with traffic. In some ways the reduced booth density was ideal for visitors: plenty of tables and chairs populated the open areas making more meeting and refreshment space available. The NAB exhibition is substantially more widely attended than the conference, and this year several theatres were provided on the show floor for sessions any ‘exhibits only’ attendee could watch. Some content is now available here: https://nabshow.com/2022/videos-on-demand/

For the most part this was a show of ‘consolidation’ rather than ‘innovation’. For example, exhibitors were enjoying welcoming their partners and customers face-to-face rather than launching significant new products. Codecs standardised during the past several years were finally reaching mainstream support, with AV1, VP9 and HEVC well-represented across vendors. SVT-AV1 (Scalable Vector Technology) was particularly prevalent, having been well optimised and made available to use license-free by the standard’s contributors. VVC (Versatile Video Coding), a more recent and more advanced standard, is still too computationally intensive for commercial use, though a small set made mention of it on their stands (e.g. Fraunhofer).

IP is now fairly ubiquitous within broadcast ecosystems. To consolidate further, an IP Showcase booth illustrating support across standards bodies and professional organisations championed more sophisticated adoption. A pyramid graphic showing a cascade of ‘widely available’ to ‘rarely available’ sub-systems encouraged deeper adoption.

Super Resolution – raising the game for video upscaling

One of the show floor sessions – “Improving Video Quality with AI” – presented advances by iSIZE and Intel. The Intel technology may be particularly interesting to IPTC members, and concerns “Super Resolution.” Having followed the subject for over 20 years, for me this was a personal highlight of the show.

Super Resolution is a technique for creating higher resolution content from smaller originals. For example, achieving a professional quality 1080p video from a 480p source, or scaling up a social media-sized image for feature use.

A few years ago a novel and highly effective new Super Resolution method was innovated (“RAISR”, see https://arxiv.org/abs/1606.01299); this represented a major discontinuity in the field, albeit with the usual mountain of investment and work needed to take the ‘R’ (research) to ‘D’ (development).

This is exactly what Intel have done, and the resulting toolsets will be made available at no cost at the company’s Open Visual Cloud repository at the end of May.

Intel invested four years in improving the AI/ML algorithms (having created a massive ground truth library for learning), optimising to CPUs for performance and parallelisation, and then engineering the ‘applied’ tools developers need for integration (e.g. Docker containers, FFmpeg and GStreamer plug-ins). Performance will now be commercially robust.

The visual results are astonishing, and could have a major impact on the commercial potential of photographic and film/video collections needing to reach much higher resolutions or even to repair ‘blurriness’.

Next year’s event is the centennial of the first NAB Show and takes place from April 15th-19th in Las Vegas.

– Pam Fisher – Lead, IPTC Video Metadata Working Group

IPTC members will be appearing at imaging.org’s Imaging Science and Technology DigiTIPS 2022 meeting series tomorrow, April 26.

The session description is as follows:

Unmuting Your ‘Silent Images’ with Photo Metadata

Caroline Desrosiers, founder and CEO, Scribely

David Riecks and Michael Steidl, IPTC Photo Metadata Working GroupAbstract: Learn how embedded photo metadata can aid in a data-driven workflow from capture to publish. Discover what details exist in your images; and learn how you can affix additional information so that you and others can manage your collection of images. See how you can embed info to automatically fill in “Alt Text” to images shown on your website. Explore how you can test your metadata workflow to maximize interoperability.”

Registration is still open. You can register at https://www.imaging.org/Site/IST/Conferences/DigiTIPS/DigiTIPS_Home.aspx?Entry_CCO=3#Entry_CCO

A hot topic in media circles these days is “synthetic media”. That is, media that was created either partly or fully by a computer. Usually the term is used to describe content created either partly or wholly by AI algorithms.

IPTC’s Video Metadata Working Group has been looking at the topic recently and we concluded that it would be useful to have a way to describe exactly what type of content a particular media item is. Is it a raw, unmodified photograph, video or audio recording? Is it a collage of existing photos, or a mix of synthetic and captured content? Was it created using software trained on a set of sample images or videos, or is it purely created by an algorithm?

We have an existing vocabulary that suits some of this need: Digital Source Type. This vocabulary was originally created to be able to describe the way in which an image was scanned into a computer, but it also represented software-created images at a high level. So we set about expanding and modifying that vocabulary to cover more detail and more specific use cases.

It is important to note that we are only describing the way a media object has been created: we are not making any statements about the intent of the user (or the machine) in creating the content. So we deliberately don’t have a term “deepfake”, but we do have “trainedAlgorithmicMedia” which would be the term used to describe a piece of content that was created by an AI algorithm such as a Generative Adversarial Network (GAN).

Here are the terms we propose to include in the new version of the Digital Source Type vocabulary. (New terms and definition changes are marked in bold text. Existing terms are included in the list for clarity.)

| Term ID | digitalCapture |

| Term name | Original digital capture sampled from real life |

| Term description | The digital media is captured from a real-life source using a digital camera or digital recording device |

| Examples | Digital photo or video taken using a digital SLR or smartphone camera |

| Term ID | negativeFilm |

| Term name | Digitised from a negative on film |

| Term description | The digital media was digitised from a negative on film on any other transparent medium |

| Examples | Film scanned from a moving image negative |

| Term ID | positiveFilm |

| Term name | Digitised from a positive on film |

| Term description | The digital media was digitised from a positive on a transparency or any other transparent medium |

| Examples | Digital photo scanned from a photographic positive |

| Term ID | |

| Term name | Digitised from a print on non-transparent medium |

| Term description | The digital image was digitised from an image printed on a non-transparent medium |

| Examples | Digital photo scanned from a photographic print |

| Term ID | humanEdited |

| Term name | Original media with minor human edits |

| Term description | Minor augmentation or correction by a human, such as a digitally-retouched photo used in a magazine |

| Examples | Video camera recording, manipulated digitally by a human editor |

| Term ID | compositeCapture |

| Term name | Composite of captured elements |

| Term description | Mix or composite of several elements that are all captures of real life |

| Examples | * A composite image created by a digital artist in Photoshop based on several source images * Edited sequence or composite of video shots |

| Term ID | algorithmicallyEnhanced |

| Term name | Algorithmically-enhanced media |

| Term description | Minor augmentation or correction by algorithm |

| Examples | A photo that has been digitally enhanced using a mechanism such as Google Photos’ “de-noise” feature |

| Term ID | dataDrivenMedia |

| Term name | Data-driven media |

| Term description | Digital media representation of data via human programming or creativity |

| Examples | A representation of a distant galaxy created by analysing the outputs of a deep-space telescope (as opposed to a regular camera) An infographic created using a computer drawing tool such as Adobe Illustrator or AutoCAD |

| Term ID | digitalArt |

| Term name | Digital art |

| Term description | Media created by a human using digital tools |

| Examples | * A cartoon drawn by an artist into a digital tool using a digital pencil, a tablet and a drawing package such as Procreate or Affinity Designer * A scene from a film/movie created using Computer Graphic Imagery (CGI) * Electronic music composition using purely synthesised sounds |

| Term ID | virtualRecording |

| Term name | Virtual recording |

| Term description | Live recording of virtual event based on synthetic and optionally captured elements |

| Examples | * A recording of a computer-generated sequence, e.g. from a video game * A recording of a Zoom meeting |

| Term ID | compositeSynthetic |

| Term name | Composite including synthetic elements |

| Term description | Mix or composite of several elements, at least one of which is synthetic |

| Examples | * Movie production using a combination of live-action and CGI content, e.g. using Unreal engine to generate backgrounds * A capture of an augmented reality interaction with computer imagery superimposed on a camera video, e.g. someone playing Pokemon Go |

| Term ID | trainedAlgorithmicMedia |

| Term name | Trained algorithmic media |

| Term description | Digital media created algorithmically using a model derived from sampled content |

| Examples | * Image based on deep learning from a series of reference examples * A “speech-to-speech” generated audio or “deepfake” video using a combination of a real actor and an AI model * “Text-to-image” using a text input to feed an algorithm that creates a synthetic image |

| Term ID | algorithmicMedia |

| Term name | Algorithmic media |

| Term description | Media created purely by an algorithm not based on any sampled training data, e.g. an image created by software using a mathematical formula |

| Examples | * A purely computer-generated image such as a pattern of pixels generated mathematically e.g. a Mandelbrot set or fractal diagram * A purely computer-generated moving image such as a pattern of pixels generated mathematically |

We propose that the following term, which exists in the current DigitalSourceType CV, be retired:

| Term ID | RETIRE: softwareImage |

| Term name | Created by software |

| Term description | The digital image was created by computer software |

| Note | We propose that trainedAlgorithmicMedia or algorithmnicMedia be used instead of this term. |

We welcome all feedback from across the industry to these proposed terms.

Please contact Brendan Quinn, IPTC Managing Director at mdirector@iptc.org use the IPTC Contact Us form to send your feedback.

The IPTC has an ongoing project to the news and media industry deal with content credibility and provenance. As part of this, we have started working with Project Origin, a consortium of news and technology organisations who have come together to fight misinformation through the use of content provenance technologies.

On Tuesday 22nd February, Managing Director of IPTC Brendan Quinn spoke on a panel at an invite-only Executive Briefing event attended by leaders from news organisations around the world.

Other speakers at the event included Marc Lavallee, Head of R&D for The New York Times, Pascale Doucet of France Télévision, Eric Horvitz of Microsoft Research, Andy Parsons of Adobe, and Laura Ellis, Jamie Angus and Jatin Aythora of the BBC.

The event marks the beginning of the next phase of the industry’s work on content credibility. C2PA has now delivered the 1.0 version of its spec, so the next phase of the work is for the news industry to get together to create best practices around implementing it in news workflows.

IPTC and Project Origin will be working together with stakeholders from all parts of the news industry to establish guidelines for making provenance work in a practical way across the entire news ecosystem.

Bill Kasdorf, IPTC Individual Member, has written about IPTC Photo Metadata in his latest column for Publishers Weekly.

In the article, a double-page spread in the printed version of the 11/22/2021 issue of Publishers Weekly and an extended article online, Bill references Caroline Desrosiers of IPTC Startup member Scribely saying “if publications are born accessible, then their images should be born accessible, as well.”

The article describes how the new Alt Text (Accessibility) and Extended Description (Accessibility) properties in IPTC Photo Metadata can be used to make EPUBs more accessible.

Bill goes on to provide an example, supplied by Caroline Desrosiers, of how an image’s caption, alt text and extended description fulfil very different purposes, and mentions that it’s perfectly fine to leave alt text blank in some cases! For more details, read the article here.

“Metadata is the wheel in the digital business model,” according to Carl-Gustav Linden of University of Bergen in Norway. “We can use it to combine the right content with the right readers, listeners and viewers. That’s why metadata is so essential.”

Professor Linden was speaking at the JournalismAI Festival taking place this week, hosted by the Polis think-tank at the London School of Economics and Political Science. The JournalismAI project is a collaboration between POLIS and newsrooms and institutes around the world, funded by the Google News Initiative.

We are very happy to see several mentions of IPTC standards and IPTC members, particularly the New York Times and iMatrics. The New York Times is seen as a forerunner in content classification, with Jennifer Parrucci (lead of the IPTC NewsCodes Working Group) giving presentations recently about their work. iMatrics supplies an automated content classification system based on IPTC Media Topics which can be used as part of editorial workflows.

One thing we would like to note is that Professor Linden mentions that the IPTC vocabularies are influenced by our background in US-based news organisations, citing an example of the schools terms being focussed on the US system. We are happy to say that in a recent update to IPTC Media Topics we clarified our terms around school systems, making the label names and descriptions much more generic and based on the international schools classifications.

This change was the result of many IPTC member organisations working together from different parts of the world, including Scandinavia, to come to a result that hopefully works for everyone (and of course, each user of Media Topics is welcome to extend the vocabulary for their own purposes if necessary). This is an example of the great work that takes place when our members work together.

The JournalismAI festival continues until Friday this week. All sessions from the festival are available on YouTube.

Thanks again to Polis and the JournalismAI team for giving us a mention!

As previously announced, the IPTC are participating in the Coalition for Content Provenance and Authenticity (C2PA) project to create a specification to tackle online disinformation and misinformation.

After months of work by the C2PA Technical Working Group, the first public draft of the specification has been released. In particular, the spec defines how properties from the IPTC Photo Metadata Standard can be included in a C2PA manifest, creating a provenance trail that allows future viewers to validate the authenticity of a claim associated with a media asset (such as the location the photo was taken, the creator’s name or who is the person in a photo).

The full press release from C2PA follows:

SAN FRANCISCO, Calif. — September 1, 2021 — Today, the Coalition for Content Provenance and Authenticity (C2PA), a Joint Development Foundation project established to scale trust in online content, released its content provenance specifications – in draft form – for community review and feedback. Driven by a commitment to tackle online disinformation, the C2PA’s technical specifications are designed to be an open standard that will allow publishers, creators and consumers to trace the origin and evolution of a piece of media, including images, videos, audio and documents.

“C2PA was established to accelerate progress toward the broad adoption of content provenance standards that will enable a future of verifiable integrity in media,” said Andrew Jenks, C2PA Chair. “The release of this draft is an exciting and important milestone, representing a diverse and collaborative effort across industries to protect people from fabricated and manipulated media and drive transparency in the origin and history of content.”

Combatting online content fraud at scale requires transparency and an accessible and open approach that enables consumers to make informed decisions about what has been modified and what has not. The C2PA was launched in February 2021 with founding members Adobe, Arm, BBC, Intel, Microsoft and Truepic with the goal of developing an end-to-end open technical standard to address the rise of disinformation efforts leveraging tools for media fabrication and manipulations. The effort has expanded, bringing in additional members including Twitter, WITNESS, Akamai and Fastly.

Over the past six months, the C2PA has worked with industry experts and partner organizations, including the Project Origin Alliance and the Content Authenticity Initiative (CAI), to develop a standard for digital provenance that provides platforms with a method to define descriptive metadata, what information is associated with each type of asset, how that information is presented and stored, and how evidence of tampering can be identified. This group of contributors spans a spectrum of industries including social media, news publishing, software technology, semiconductors and more. All have contributed to building these new technical specifications through a process of gathering requirements, considerations of scenarios and technical design.

Following the review period, the C2PA working groups will finalize the 1.0 version of the technical standards and once published, the group will pursue adoption, prototyping and communication through coalition members and other external stakeholders, providing the foundation for a system of verifiable provenance on the internet.

“The power of C2PA’s open standard will rely on its broad adoption by producers and custodians of content, which makes this review phase so critical to the development and finalization of the specifications,” said Jenks. “This is why we are making the draft specification available to the public. We encourage rigorous review and feedback across industries, civil society, academia, and the general public to ensure the C2PA standards reflect the complex nature of this effort.”

The draft specification can be accessed through the C2PA website, and comments will be accepted through a web submission form and GitHub until November 30, 2021.

C2PA is accepting new members. To join, visit https://c2pa.org/membership/.

About C2PA

The Coalition for Content Provenance and Authenticity (C2PA) is an open, technical standards body addressing the prevalence of misleading information online through the development of technical standards for certifying the source and history (or provenance) of media content. C2PA is a Joint Development Foundation project, formed through an alliance between Adobe, Arm, BBC, Intel, Microsoft and Truepic. For more information, visit c2pa.org.

Last week, Brendan Quinn and Jennifer Parrucci presented about IPTC NewsCodes at the EBU’s Metadata Developer Network workshop.

Brendan Quinn of IPTC and Jennifer Parrucci of The New York Times present IPTC’s NewsCodes vocabularies, describing what they are, how they are maintained, how they can be used and a look into the future. Including a focus on IPTC MediaTopics, our leading vocabulary for topics of news content. Originally presented at the EBU Metadata Developers Network workshop, held online from 25 – 27 May 2021.

The full presentation slides are embedded below. A video recording of the session, including questions and answers, is available to EBU members via the EBU MDN website.

Categories

Archives

- February 2026

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- February 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- December 2019

- November 2019

- October 2019

- September 2019

- July 2019

- June 2019

- May 2019

- April 2019

- February 2019

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- January 2018

- November 2017

- October 2017

- September 2017

- August 2017

- June 2017

- May 2017

- April 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- June 2015

- April 2015

- March 2015

- February 2015

- November 2014