Categories

Archives

The 2024 IPTC Photo Metadata Conference takes place as a webinar on Tuesday 7th May from 1500 – 1800 UTC. Speakers hail from Adobe (makers of Photoshop), CameraBits (makers of PhotoMechanic), Numbers Protocol, Colorhythm, vAIsual and more.

First off, IPTC Photo Metadata Working Group co-leads, David Riecks and Michael Steidl, will give an overview of what has been happening in the world of photo metadata since our last Conference in November 2022, including IPTC’s work on metadata for AI labelling, “do not train” signals, provenance, diversity and accessibility.

Next, a panel session on AI and Image Authenticity: Bringing trust back to photography? discusses approaches to the problem of verifying trust and credibility for online images. The panel features C2PA lead architect Leonard Rosenthol (Adobe), Dennis Walker (Camera Bits), Neal Krawetz (FotoForensics) and Bofu Chen (Numbers Protocol).

Next, James Lockman of Adobe presents the Custom Metadata Panel, which is a plugin for Photoshop, Premiere Pro and Bridge that allows for any XMP-based metadata schema to be used – including IPTC Photo Metadata and IPTC Video Metadata Hub. James will give a demo and talk about future ideas for the tool.

Finally, a panel on AI-Powered Asset Management: Where does metadata fit in? discusses teh relevance of metadata in digital asset management systems in an age of AI. Speakers include Nancy Wolff (Cowan, DeBaets, Abrahams & Sheppard, LLP), Serguei Fomine (IQPlug), Jeff Nova (Colorhythm) and Mark Milstein (vAIsual).

The full agenda and links to register for the event are available at https://iptc.org/events/photo-metadata-conference-2024/

Registration is free and open to anyone who is interested.

See you there on Tuesday 7th May!

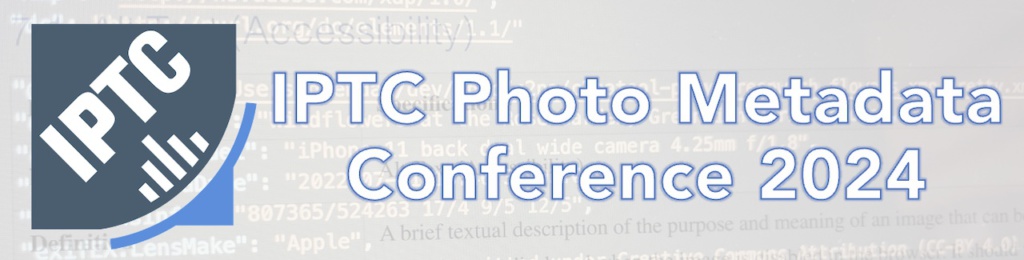

Google has added Digital Source Type support to Google Merchant Center, enabling images created by generative AI engines to be flagged as such in Google’s products such as Google search, maps, YouTube and Google Shopping.

In a new support post, Google reminds merchants who wish their products to be listed in Google search results and other products that they should not strip embedded metadata, particularly the Digital Source Type field which can be used to signal that content was created by generative AI.

We at the IPTC fully endorse this position. We have been saying for years that website publishers should not strip metadata from images. This should also include tools for maintaining online product inventories, such as Magento and WooCommerce. We welcome contact from developers who wish to learn more about how they can preserve metadata in their images.

Here’s the full text of Google’s recommendation:

Yesterday Nick Clegg, Meta’s President of Global Affairs, announced that Meta would be using IPTC embedded photo metadata to label AI-Generated Images on Facebook, Instagram and Threads.

Meta already uses the IPTC Photo Metadata Standard’s Digital Source Type property to label images generated by its platform. The image to the right was generated using Imagine with Meta AI, Meta’s image generation tool. Viewing the image’s metadata with the IPTC’s Photo Metadata Viewer tool shows that the Digital Source Type field is set to “trainedAlgorithmicMedia” as recommended in IPTC’s Guidance on metadata for AI-generated images.

Clegg said that “we do several things to make sure people know AI is involved, including putting visible markers that you can see on the images, and both invisible watermarks and metadata embedded within image files. Using both invisible watermarking and metadata in this way improves both the robustness of these invisible markers and helps other platforms identify them.”

This approach of both direct and indirect disclosure is in line with the Partnership on AI’s Best Practices on signalling the use of generative AI.

Also, Meta are building recognition of this metadata into their tools: “We’re building industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.”

We have previously shared the news that Google, Microsoft, Adobe, Midjourney and Shutterstock will use IPTC metadata in their generated images, either directly in the IPTC Photo Metadata block or using the IPTC Digital Source Type vocabulary as part of a C2PA assertion. OpenAI has just announced that they have started using IPTC via C2PA metadata to signal the fact that images from DALL-E are generated by AI.

A call for platforms to stop stripping image metadata

We at the IPTC agree that this is a great step towards end-to-end support of indirect disclosure of AI-generated content.

As the Meta and OpenAI posts points out, it is possible to strip out both IPTC and C2PA metadata either intentionally or accidentally, so this is not a solution to all problems of content credibility.

Currently, one of the main ways metadata is stripped from images is when they are uploaded to Facebook or other social media platforms. So with this step, we hope that Meta’s platforms will stop stripping metadata from images when they are shared – not just the fields about generative AI, but also the fields regarding accessibility (alt text), copyright, creator’s rights and other information embedded in images by their creators.

Video next?

Meta’s post indicates that this type of metadata isn’t commonly used for video or audio files. We agree, but to be ahead of the curve, we have added Digital Source Type support to IPTC Video Metadata Hub so videos can be labelled in the same way.

We will be very happy to work with Meta and other platforms on making sure IPTC’s standards are implemented correctly in images, videos and other areas.

Made with Bing Image Creator. Powered by DALL-E.

Following the IPTC’s recent announcement that Rights holders can exclude images from generative AI with IPTC Photo Metadata Standard 2023.1 , the IPTC Video Metadata Working Group is very happy to announce that the same capability now exists for video, through IPTC Video Metadata Hub version 1.5.

The “Data Mining” property has been added to this new version of IPTC Video Metadata Hub, which was approved by the IPTC Standards Committee on October 4th, 2023. Because it uses the same XMP identifier as the Photo Metadata Standard property, the existing support in the latest versions of ExifTool will also work for video files.

Therefore, adding metadata to a video file that says it should be excluded from Generative AI indexing is as simple as running this command in a terminal window:

exiftool -XMP-plus:DataMining="Prohibited for Generative AI/ML training" example-video.mp4

(Please note that this will only work in ExifTool version 12.67 and above, i.e. any version of ExifTool released after September 19, 2023)

The possible values of the Data Mining property are listed below:

| PLUS URI | Description (use exactly this text with ExifTool) |

| Unspecified – no prohibition defined | |

| Allowed | |

| Prohibited for AI/ML training | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-GENAIMLTRAINING |

Prohibited for Generative AI/ML training |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-EXCEPTSEARCHENGINEINDEXING |

Prohibited except for search engine indexing |

| Prohibited | |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEECONSTRAINT |

Prohibited, see plus:OtherConstraints |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEEEMBEDDEDRIGHTSEXPR |

Prohibited, see iptcExt:EmbdEncRightsExpr |

|

http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEELINKEDRIGHTSEXPR |

Prohibited, see iptcExt:LinkedEncRightsExpr |

A corresponding new property “Other Constraints” has also been added to Video Metadata Hub v1.5. This property allows plain-text human-readable constraints to be placed on the video when using the “Prohibited, see plus:OtherConstraints” value of the Data Mining property.

The Video Metadata Hub User Guide and Video Metadata Hub Generator have also been updated to include the new Data Mining property added in version 1.5.

We look forward to seeing video tools (and particularly crawling engines for generative AI training systems) implement the new properties.

Please feel free to discuss the new version of Video Metadata Hub on the public iptc-videometadata discussion group, or contact IPTC via the Contact us form.

The IPTC NewsML-G2 Working Group and the News Architecture Working Group are happy to announce the release of the latest version of our flagship XML-based news syndication standard: NewsML-G2 v2.33.

Changes in the latest version are small but significant. We have added support for the Digital Source Type property which is already being used in IPTC’s sister standards IPTC Photo Metadata Standard and IPTC Video Metadata Hub and ninjs. This property can be used to declare when content has been created or modified by software, including by Generative AI engines.

Examples of other possible values for the digital source type property using the recommended IPTC Digital Source Type NewsCodes vocabulary are:

| ID (in QCode format) | Name | Example |

| digsrctype:digitalCapture | Original digital capture sampled from real life:

The digital media is captured from a real-life source using a digital camera or digital recording device |

Digital video taken using a digital film, video or smartphone camera |

| digsrctype:negativeFilm | Digitised from a negative on film:

The digital image was digitised from a negative on film on any other transparent medium |

Digital photo scanned from a photographic negative |

| digsrctype:minorHumanEdits | Original media with minor human edits:

Minor augmentation or correction by a human, such as a digitally-retouched photo used in a magazine |

Original audio with minor edits (e.g. to eliminate breaks) |

| digsrctype:algorithmicallyEnhanced | Algorithmic enhancement: Minor augmentation or correction by algorithm |

A photo that has been digitally enhanced using a mechanism such as Google Photos’ “denoise” feature |

| digsrctype:dataDrivenMedia | Data-driven media: Digital media representation of data via human programming or creativity |

Textual weather report generated by code using readings from weather detection instruments |

| digsrctype:trainedAlgorithmicMedia | Trained algorithmic media: Digital media created algorithmically using a model derived from sampled content |

A “deepfake” video using a combination of a real actor and a trained model

|

The above list is a subset of the full list of recommended values. See the full IPTC Digital Source Type NewsCodes vocabulary for the complete list.

Guidance on using Digital Source Type

The IPTC Photo Metadata User Guide contains a section on Guidance for using Digital Source Type including examples for various types of media, including images, video, audio and text. The examples referenced in this guide can also apply to NewsML-G2 content.

Where Digital Source Type can be used in NewsML-G2 documents

The new <digitalSourceType> property can be added to the contentMeta section of any G2 NewsItem, PackageItem, KnowledgeItem, ConceptItem or PlanningItem to describe the digital source type of an item in its entirety.

It can also be used in the partMeta section of any G2 NewsItem, PackageItem or KnowledgeItem to describe the digital source type of a part of the item. In this way, content such as a video that includes some captured shots and AI-generated shots can be fully described using NewsML-G2.

Find out more about NewsML-G2 v2.33

All information related to NewsML-G2 2.33 is at https://iptc.org/std/NewsML-G2/2.33/.

The NewsML-G2 Specification document has been updated to cover the new version 2.33.

Example instance documents are at https://iptc.org/std/NewsML-G2/2.33/examples/.

Full XML Schema documentation is located at https://iptc.org/std/NewsML-G2/2.33/specification/XML-Schema-Doc-Power/

XML source documents and unit tests are hosted in the public NewsML-G2 GitHub repository.

The NewsML-G2 Generator tool has also been updated to produce NewsML-G2 2.33 files using the version 38 catalog.

For any questions or comments, please contact us via the IPTC Contact Us form or post to the iptc-newsml-g2@groups.io mailing list. IPTC members can ask questions at the weekly IPTC News Architecture Working Group meetings.

Updated in June 2024 to include an image containing the new metadata property

Many image rights owners noticed that their assets were being used as training data for generative AI image creators, and asked the IPTC for a way to express that such use is prohibited. The new version 2023.1 of the IPTC Photo Metadata Standard now provides means to do this: a field named “Data Mining” and a standardised list of values, adopted from the PLUS Coalition. These values can show that data mining is prohibited or allowed either in general, for AI or Machine Learning purposes or for generative AI/ML purposes. The standard was approved by IPTC members on 4th October 2023 and the specifications are now publicly available.

Because these data fields, like all IPTC Photo Metadata, are embedded in the file itself, the information will be retained even after an image is moved from one place to another, for example by syndicating an image or moving an image through a Digital Asset Management system or Content Management System used to publish a website. (Of course, this requires that the embedded metadata is not stripped out by such tools.)

Created in a close collaboration with PLUS Coalition, the publication of the new properties comes after the conclusion of a public draft review period earlier this year. The properties are defined as part of the PLUS schema and incorporated into the IPTC Photo Metadata Standard in the same way that other properties such as Copyright Owner have been specified.

The new properties are now finalised and published. Specifically, the new properties are as follows:

- Data Mining: a field with a value from a controlled value vocabulary. Values come from the PLUS Data Mining vocabulary, reproduced here:

- http://ns.useplus.org/ldf/vocab/DMI-UNSPECIFIED (Unspecified – no prohibition defined)

- http://ns.useplus.org/ldf/vocab/DMI-ALLOWED (Allowed)

- http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-AIMLTRAINING (Prohibited for AI/ML training)

- http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-GENAIMLTRAINING (Prohibited for Generative AI/ML training)

- http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-EXCEPTSEARCHENGINEINDEXING (Prohibited except for search engine indexing)

- http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED (Prohibited)

- http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEECONSTRAINT (Prohibited, see Other Constraints property)

- http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEEEMBEDDEDRIGHTSEXPR (Prohibited, see Embedded Encoded Rights Expression property)

- http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEELINKEDRIGHTSEXPR (Prohibited, see Linked Encoded Rights Expression property)

- Other Constraints: Also defined in the PLUS specification, this text property is to be used when the Data Mining property has the value “http://ns.useplus.org/ldf/vocab/DMI-PROHIBITED-SEECONSTRAINT“. It can specify, in a human-readable form, what other constraints may need to be followed to allow Data Mining, such as “Generative AI training is only allowed for academic purposes” etc.

The IPTC and PLUS Consortium wish to draw users attention to the following notice included in the specification:

Regional laws applying to an asset may prohibit, constrain, or allow data mining for certain purposes (such as search indexing or research), and may overrule the value selected for this property. Similarly, the absence of a prohibition does not indicate that the asset owner grants permission for data mining or any other use of an asset.

The prohibition “Prohibited except for search engine indexing” only permits data mining by search engines available to the public to identify the URL for an asset and its associated data (for the purpose of assisting the public in navigating to the URL for the asset), and prohibits all other uses, such as AI/ML training.

The IPTC encourages all photo metadata software vendors to incorporate the new properties into their tools as soon as possible, to support the needs of the photo industry.

ExifTool, the command-line tool for accessing and manipulating metadata in image files, already supports the new properties. Support was added in the ExifTool version 12.67 release, which is available for download on exiftool.org.

The new version of the specification can be accessed at https://www.iptc.org/std/photometadata/specification/IPTC-PhotoMetadata or from the navigation menu on iptc.org. The IPTC Get Photo Metadata tool and IPTC Photo Metadata Reference images been updated to use the new properties.

The IPTC and PLUS Coalition wish to thank many IPTC and PLUS member organisations and others who took part in the consultation process around these changes. For further information, please contact IPTC using the Contact Us form.

The IPTC NewsCodes Working Group has approved an addition to the Digital Source Type NewsCodes vocabulary.

The new term, “Composite with Trained Algorithmic Media“, is intended to handle situations where the “synthetic composite” term is not specific enough, for example a composite that is specifically made using an AI engine’s “inpainting” or “outpainting” operations.

The full Digital Source Type vocabulary can be accessed from https://cv.iptc.org/newscodes/digitalsourcetype. It can be downloaded in NewsML-G2 (XML), SKOS (RDF/XML, Turtle or JSON-LD) to be integrated into content management and digital asset management systems.

The new term can be used immediately with any tool or standard that supports IPTC’s Digital Source Type vocabulary, including the C2PA specification, the IPTC Photo Metadata Standard and IPTC Video Metadata Hub.

Information on the new term will soon be added to IPTC’s Guidance on using Digital Source Type in the IPTC Photo Metadata User Guide.

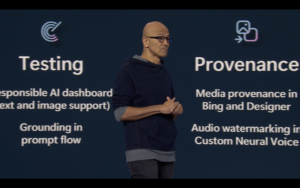

Following the recent announcements of Google’s signalling of generative AI content and Midjourney and Shutterstock the day after, Microsoft has now announced that it will also be signalling the provenance of content created by Microsoft’s generative AI tools such as Bing Image Creator.

Microsoft’s efforts go one step beyond those of Google and Midjourney, because they are adding the image metadata in a way that can be verified using digital certificates. This means that not only is the signal added to the image metadata, but verifiable information is added on who added the metadata and when.

As TechCrunch puts it, “Using cryptographic methods, the capabilities, scheduled to roll out in the coming months, will mark and sign AI-generated content with metadata about the origin of the image or video.”

The system uses the specification created by the Coalition for Content Provenance and Authenticity. a joint project of Project Origin and the Content Authenticity Initiative.

The 1.3 version of the C2PA Specification specifies how a C2PA Action can be used to signal provenance of Generative AI content. This uses the IPTC DigitalSourceType vocabulary – the same vocabulary used by the Google and Midjourney implementations.

This follows IPTC’s guidance on how to use the DigitalSourceType property, published earlier this month.

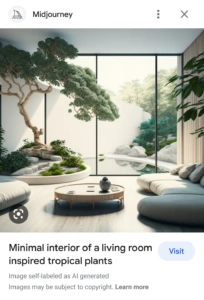

As a follow-up to yesterday’s news on Google using IPTC metadata to mark AI-generated content we are happy to announce that generative AI tools from Midjourney and Shutterstock will both be adopting the same guidelines.

According to a post on Google’s blog, Midjourney and Shutterstock will be using the same mechanism as Google – that is, using the IPTC “Digital Source Type” property to embed a marker that the content was created by a generative AI tool. Google will be detecting this metadata and using it to show a signal in search results that the content has been AI-generated.

A step towards implementing responsible practices for AI

We at IPTC are very excited to see this concrete implementation of our guidance on metadata for synthetic media.

We also see it as a real-world implementation of the guidelines on Responsible Practices for Synthetic Media from the Partnership on AI, and of the AI Ethical Guidelines for the Re-Use and Production of Visual Content from CEPIC, the alliance of European picture agencies. Both of these best practice guidelines emphasise the need for transparency in declaring content that was created using AI tools.

The phrase from the CEPIC transparency guidelines is “Inform users that the media or content is synthetic, through

labelling or cryptographic means, when the media created includes synthetic elements.”

The equivalent recommendation from the Partnership on AI guidelines is called indirect disclosure:

“Indirect disclosure is embedded and includes, but is not limited to, applying cryptographic provenance to synthetic outputs (such as the C2PA standard), applying traceable elements to training data and outputs, synthetic media file metadata, synthetic media pixel composition, and single-frame disclosure statements in videos”

Here is a simple, concrete way of implementing these disclosure / transparency guidelines using existing metadata standards.

Moving towards a provenance ecosystem

IPTC is also involved in efforts to embed transparency and provenance metadata in a way that can be protected using cryptography: C2PA, the Content Authenticity Initiative, and Project Origin.

C2PA provides a way of declaring the same “Digital Source Type” information in a more robust way, that can provide mechanisms to retrieve metadata even after the image was manipulated or after the metadata was stripped from the file.

However implementing C2PA technology is more complicated, and involves obtaining and managing digital certificates, among other things. Also C2PA technology has not been implemented by platforms or search engines on the display side.

In the short term, AI content creation systems can use this simple mechanism to add disclosure information to their content.

The IPTC is happy to help any other parties to implement these metadata signals: please contact IPTC via the Contact Us form.

The IPTC has updated its Photo Metadata User Guide to include some best practice guidelines for how to use embedded metadata to signal “synthetic media” content that was created by generative AI systems.

After our work in 2022 and the draft vocabulary to support synthetic media, the IPTC NewsCodes Working Group, Video Metadata Working Group and Photo Metadata Working Group worked together with several experts and organisations to come up with a definitive list of “digital source types” that includes various types of machine-generated content, or hybrid human and machine-generated media.

Since publishing the vocabulary, the work has been picked up by the Coalition for Content Provenance and Authenticity (C2PA) via the use of digitalSourceType in Actions and in the IPTC Photo and Video Metadata assertion. But the primary use case is for adding metadata to image and video files

Here is a direct link to the new section on Guidance for using Digital Source Type, including examples for how the various terms can be used to describe media created in different formats – audio, video, images and even text.

IPTC recommends that software creating images using trained AI algorithms uses the “Digital Source Type” value of “trainedAlgorithmicMedia” is added to the XMP data packet in generated image and video files. Alternatively, it may be included in a C2PA manifest as described in the IPTC assertion documentation in the C2PA specification.

The official URL for the full vocabulary is http://cv.iptc.org/newscodes/digitalsourcetype, so the complete URI for the recommended Trained Algorithmic Media term is http://cv.iptc.org/newscodes/digitalsourcetype/trainedAlgorithmicMedia.

Other terms in the vocabulary include:

- Composite with synthetic elements – https://cv.iptc.org/newscodes/digitalsourcetype/compositeSynthetic – covering a composite image that contains some synthetic and some elements captured with a camera;

- Digital Art – https://cv.iptc.org/newscodes/digitalsourcetype/digitalArt – covering art created by a human using digital tools such as a mouse or digital pencil, or computer-generated imagery (CGI) video

- Virtual recording – https://cv.iptc.org/newscodes/digitalsourcetype/virtualRecording – a recording of a virtual event which may or may not contain synthetic elements, such as a Fortnite game or a Zoom meeting

- and several other options – see the full list with examples in the IPTC Photo Metadata User Guide.

Of course, the original digital source type values covering photographs taken on a digital camera or phone (digitalCapture), scan from negative (negativeFilm), and images digitised from print (print) are also valid and may continue to be used. We have, however, retired the generic term “softwareImage” which is now deemed to be too generic. We recommend using one of the newer terms in its place.

If you are considering implementing this guidance in AI image generation software, we would love to hear about it so we can offer advice and tell others. Please contact us using the IPTC contact form.

Categories

Archives

- February 2026

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- February 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- December 2019

- November 2019

- October 2019

- September 2019

- July 2019

- June 2019

- May 2019

- April 2019

- February 2019

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- January 2018

- November 2017

- October 2017

- September 2017

- August 2017

- June 2017

- May 2017

- April 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- June 2015

- April 2015

- March 2015

- February 2015

- November 2014

![Preserving metadata tags for AI-generated images in Merchant Center

February 2024

If you’re using AI-generated images in Merchant Center, Google requires that you preserve any metadata tags which indicate that the image was created using generative AI in the original image file.

Don't remove embedded metadata tags such as trainedAlgorithmicMedia from such images. All AI-generated images must contain the IPTC DigitalSourceType trainedAlgorithmicMedia tag. Learn more about IPTC photo metadata.

These requirements apply to the following image attributes in Merchant Center Classic and Merchant Center Next:

Image link [image_link]

Additional image link [additional_image_link]

Lifestyle image link [lifestyle_image_link]

Learn more about product data specifications.](https://iptc.org/wp-content/uploads/2024/02/Screenshot-2024-02-20-at-09.56.52-1024x724.png)