Categories

Archives

AI in the Newsroom: A High-Level Panel

Brendan Quinn, Managing Director of the IPTC, joined a high-level panel to explore the transformative impact of AI on the global media landscape. He was joined on stage by Peter Kropsch, CEO of Deutsche Presse-Agentur (dpa) and Earl J. Wilkinson, CEO of the International News Media Association (INMA). The session was moderated by Najlaa Habriri, Senior Editor and Political Commentator at Asharq Al-Awsat, a Saudi newspaper based in London.

Key Discussion Points

The wide-ranging conversation addressed how news organisations can navigate the “smart media” era:

- Content Control: Leveraging technical standards to help publishers retain rights and control over their output.

- Editorial Integrity: Integrating AI into workflows while safeguarding accuracy, accountability, and editorial responsibility.

- The Modern Newsroom: How hybrid roles, blending editorial, data, and audience expertise, are reshaping recruitment and staff development.

International representation

The forum featured many speakers from the international media industry, including Ben Smith (Semafor), Tony Gallagher (The Times), Karen Elliott House (formerly of The Wall Street Journal), Julie Pace (Associated Press) and Vincent Peyrègne (formerly of WAN-IFRA), alongside prominent local media leaders from across the Middle East.

Following on from these events, the IPTC is proud to announce that the next Media Provenance Summit will take place in Toronto Canada on April 16th 2026 at the Reuters offices.

Bringing C2PA implementation experts together from media organisations in North America and beyond, the Media Provenance Summit will look at real-world implementation of C2PA media provenance technologies in newsrooms.

Agenda

Topics will include:

- Best-practice workflows for image and video production, from capture and ingest through to publishing and distribution

- Understanding how to use organisational identity certificates and tools that conform to the C2PA Conformance Program to achieve full compliance with the C2PA specification, enabling visibility of both C2PA conformance and publisher identity in C2PA validators

- Adopting and integrating software and hardware that incorporates C2PA signing into newsroom systems and workflows

Attendees and speakers

Attendees will include senior technology, editorial and product professionals from media organisations, global news agencies, technology suppliers (both hardware and software), service providers and industry bodies. For comparison, the summit in Bergen in September 2025 had over 80 attendees from the UK, Europe, Canada, USA, Australia and Japan.

IPTC membership is not required to attend the Media Provenance Summit.

The event is being held the day after the members-only IPTC Spring Meeting 2026, which will be held at the same venue from April 13 – 15. Attendees of the IPTC Spring Meeting will include technology professionals from Associated Press, Bloomberg, New York Times, Reuters, BBC and many more leading media organisations from around the world. Many of these attendees are expected to also attend the Media Provenance Summit.

Request an invitation

To be considered for an invitation, please fill out the Expression of Interest form. Attendees will be selected to ensure a productive balance of publishers, broadcasters, tool vendors and consultants.

Selected attendees will be notified by the end of February, to give sufficient notice for planning travel arrangements.

IPTC’s Managing Director Brendan Quinn spoke at the event Breaking the News? Global perspectives on the future of journalism in the age of AI in Berlin on Wednesday 28th January, an event organised by Deutsche Welle Akademie, an arm of IPTC member Deutsche Welle.

Barbara Massing, Director General, Deutsche Welle gave the opening presentation where she emphasised that all news organisations depended on earning, and keeping, the trust of their audience: “Trust is not a given. It must be earned. Every single day.”

Reem Alabali Radovan, Germany’s Federal Minister for Economic Cooperation and Development, gave her thoughts on the importance of media companies to global democracy.

Courtney Radsch of the the Open Markets Institute gave a keynote presentation where she encouraged media organisations to hold strong against the narrative pushed by AI vendors, asking them not to give in to the jargon of the industry. AI tools do not have “hallucinations”, they make “fabrications.”

IPTC’s Brendan Quinn spoke on a panel on the relationship between AI vendors and publishers, along with representatives from Open AI and Cloudflare (other AI companies were invited to attend but declined the invitation). Quinn spoke about the IPTC’s AI opt-out guidelines and discussed the complicated landscape and the lack of progress in the IETF AI Preferences Working Group, as documented in a recent Open Future Foundation paper.

A report from Deutsche Welle on the event summarised the following takeaways:

- Collaboration and solidarity: Media companies only have power together

- Tech companies need to be regulated – they won’t self-regulate

- Media need a clear understanding of tech business models

- Media can and should use public-interest AI tools

- We need a better dialogue with big tech – demonisation won’t help

- Journalism must be treated as critical infrastructure, not just an industry

Thanks to Deutsche Welle Akademie for hosting the event and inviting Brendan Quinn to speak.

The survey describes various technologies which could be used by content owners and rights holders to express opt-in or opt-out information regarding whether rights holders allow AI engines to train on media content. It asked our thoughts on how widely they have been adopted and how suitable they would be to be adopted as a mechanism for expressing machine-readable opt-out preferences.

This is the first step in a multi-stage process, which will culminate in the publication by the European Commission of the final list of generally agreed TDM opt-out protocols.

We feel that the IPTC is well-suited to participate in this work for several reasons:

- IPTC has created one such mechanism (the Data Mining property of the IPTC Photo Metadata Standard, created in conjunction with the PLUS Coalition)

- IPTC has been involved in the creation of other technologies in this area as such the W3C Community’s Text and Data Mining Reservation Protocol (TDMRep), C2PA, and the IETF’s work in the AIPrefs Working Group

- IPTC has published a guidance document for publishers on best practices for implementing AI opt-out technologies

We look forward to continuing work with the European Commission, and others, on this subject.

Defining the boundary between authentic news and synthetic content has never been more critical. In 2025, IPTC didn’t just participate in that conversation—we led it.

Through a record year of new memberships and global events spanning from Juan-les-Pins to New York, we connected thousands of professionals to the future of media technology. Whether through new AI opt-out mechanisms or robust provenance tools, our work is now empowering hundreds of the world’s leading organisations to face the challenges of tomorrow.

Here is a look at the milestones, events, and releases that defined our work in 2025.

Global Connections: A Year of Events

This year, we prioritised bringing the media community together to solve shared challenges. From exclusive member gatherings to public conferences, we held events around the world – and plan to be even more international in 2026. Our events included:

- Member Meetings: We kicked off the year with our Spring Meeting in Juan-les-Pins, France, held alongside CEPIC. It was a vital opportunity to bring IPTC members together and align with the licensed photography world. Later in the year, our Autumn Meeting was held online, allowing our global membership to convene efficiently to discuss strategy and standards updates.

- Media Provenance Series: Trust was a central theme of 2025. We partnered with leading organisations to host a series of high-impact events focused on Media Provenance:

- Paris: Held at the AFP offices, IPTC participated in this event that was organised by AFP along with the BBC and Media Cluster Norway.

- New York: The Content Authenticity Summit was held at the Cornell University campus. IPTC co-hosted the event in association with the Content Authenticity Initiative (CAI) and C2PA.

- Bergen, Norway: A landmark event held in association with Media Cluster Norway, the BBC, and the EBU.

- Photo Metadata Conference: Our annual conference remains a must-attend event for the industry, this year attracting more than 200 attendees to hear from industry legends such as Tim Bray on the technical evolution of visual media.

Critical Guidelines for the AI Era

As Generative AI continues to reshape the landscape, the IPTC provided the industry with the necessary guidance to adapt.

- AI Opt-Out: We published comprehensive guidelines allowing content owners to express their machine learning and data mining preferences, ensuring publishers retain control over how their content is ingested by AI crawlers.

- Implementing C2PA: While the demand for provenance is high, the technical path can be complex. We released specific guidelines on implementing C2PA for news publishers, helping newsrooms bridge the gap between technical specification and practical workflow application.

Powering the Industry: Standards and Tools

We continued to maintain and evolve the technical backbone of the news industry. 2025 saw significant updates across our portfolio to ensure our standards remain modern and accessible.

New Standard Versions We published updated versions of our core standards, including NewsML-G2, IPTC Video Metadata Hub, IPTC Photo Metadata Standard, and ninjs (News in JSON). We also made many updates to the IPTC NewsCodes controlled vocabularies, ensuring that our taxonomies keep pace with a rapidly changing world.

Open Source Tooling To lower the barrier to entry for developers, we expanded our open-source offerings. This year we released a new Python module for NewsML-G2 and a WordPress plugin for C2PA, making it easier than ever for CMS developers and newsrooms to implement IPTC standards directly.

Online Tools Tools such as the Simple Rights Service make it easier than ever for rightsholders to express complex rights statements in the form of simple URLs. And of course our Origin Verify Validator allows anyone to inspect content signed with C2PA metadata, including all of the metadata fields recommended by IPTC and showing when the publisher that signed the content is on the Origin Verified News Publishers List.

Be Part of the Future

As we look toward 2026, the intersection of AI, provenance, and metadata will only become more critical.

If you want to be part of the conversation rather than just following it, we invite you to join us. By becoming an IPTC member, you can contribute to the standards that run the global news ecosystem and network with the technical leaders of the world’s biggest media organisations.

Become a Member of IPTC and help us build the future of media standards

We would like to thank our members, Working Group leads, volunteers and invited experts for their contributions to IPTC’s vital work this year. We look forward to many more years of defining and influencing technology standards for the media and beyond.

IPTC member France Télévisions has started signing its daily news broadcasts using C2PA and FranceTV’s C2PA certificate, which is on the IPTC Origin Verified News Publisher List.

This makes France TV the first news provider in the world to routinely sign its daily news output with a C2PA certificate.

The work won FranceTV the EBU Technology & Innovation Award this year.

IPTC has assisted FranceTV in this work and continues to work with FranceTV along with other broadcasters and publishers on signing their content using the C2PA specification.

A specific page Retrouvez nos JT certifiés (“Find our certified news programmes”) is available on FranceTV’s site franceinfo.fr, where the latest 1pm and 8pm news programmes are published containing a C2PA signature. The page Pour vous informer en toute sécurité contains more information (in French) about FranceTV’s work on transparency and authenticity.

We congratulate FranceTV for their work and look forward to further collaboration in 2026 and beyond.

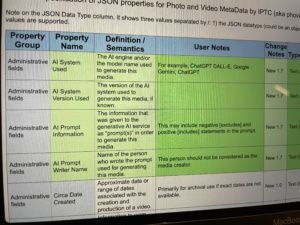

The IPTC Photo Metadata Working Group has released version 2025.1 of the IPTC Photo Metadata Standard, including properties that can be used for AI-generated content.

The new properties are:

- AI System Used

Definition: The AI engine and/or the model name used to generate this image.

User Note: For example, ChatGPT DALL-E, Google Gemini, ChatGPT

Suggest help text: Enter the name of the AI system and/or the model name used to generate this image. - AI System Version Used

Definition: The version of the AI system used to generate this image, if known.

Suggested help text: Enter the name or number of the version of the AI system used to generate this image. - AI Prompt Information

Definition: The information that was given to the generative AI service as “prompt(s)” in order to generate this image.

User Note: This may include negative [excludes] and positive [includes] statements in the prompt.

Suggested help text: Enter the information given to the generative AI service as “prompt(s)” in order to generate this image. - AI Prompt Writer Name

Definition: Name of the person who wrote the prompt used for generating this image.

User Note: This person should not be considered as the image creator.

Suggested help text: Enter the name of the person who wrote the prompt used for generating this image.

IPTC’s specification materials have been updated to accommodate the new properties:

- IPTC Photo Metadata Standard Specification 2025.1

- The IPTC Photo Metadata User Guide has been updated, particularly the guidance on Applying Metadata to AI-generated images

- The IPTC Photo Metadata Reference Image 2025.1 which contains values for all IPTC Photo Metadata properties, including the four properties added in version 2025.1

- IPTC Photo Metadata TechReference:

- The GetPMD photo metadata reading tool has been updated to read the new fields.

The new properties are expected to be implemented in software tools soon. The popular open-source tool Exiftool already supports the new properties, since version 13.40 which was released on October 24th 2025.

Thanks to everyone who contributed to our request for comments on these new properties. We made several changes based on feedback from IPTC members and others, so your contributions were well appreciated.

For more information please contact IPTC or join our public iptc-photometadata@groups.io mailing list.

The IPTC News in JSON Working Group today released the latest in each version of the ninjs: 3.2, 2.3 and 1.7.

ninjs is IPTC’s standard for storage and distribution of news in JSON format. Using JSON means that ninjs works well for fast and simple exchange of news content for APIs, search engine platforms such as Elasticsearch and AWS OpenSearch, and for lightweight storage in databases, CMSs and cloud storage. It can be used to distribute news content in any format such as text, audio, video or images; can handle event and news coverage planning, rich metadata descriptions including relevant people, places, subjects and events; and can include packages of related news content via an associations mechanism.

The IPTC News in JSON Working Group maintains three parallel versions of ninjs so that those who implement the 1.x, 2.x or 3.x branch can all receive the latest additions in a backwards-compatible manner.

The changes adopt a new structure within the renditions array. Each rendition can now have resources associated, which allows a ninjs feed to express multiple channels or tracks within a media stream. For example, a live video stream from the EU Parliament may include several different audio tracks featuring live translations in different spoken languages; a subtitle track in WebVTT or TTML format, audio description tracks and more.

The changes have been added to all three versions of ninjs in accordance with the conventions of each version.

- https://www.iptc.org/std/ninjs/ninjs-schema_3.2.json

- https://www.iptc.org/std/ninjs/ninjs-schema_2.3.json

- https://www.iptc.org/std/ninjs/ninjs-schema_1.7.json

The ninjs User Guide, ninjs Generator tool has also been updated for the new version.

For any comments or suggestions for new properties that we should add to future versions of ninjs,

The IPTC Video Metadata Working Group is happy to announce that version 1.7 of its flagship standard, Video Metadata Hub, has now been released.

The new version has four new properties which allow users and tools to embed metadata about AI prompt information that was used to generate the image, if applicable.

The new properties in detail are:

- AI System Used (text string, single-valued, optional)

The AI engine and/or the model name used to generate this media.

Note: For example, ChatGPT DALL-E, Google Gemini, ChatGPT - AI System Version Used (text string, single-valued, optional)

The version of the AI system used to generate this media, if known. - AI Prompt Information (text string, single-valued, optional)

The information that was given to the generative AI service as “prompt(s)” in order to generate this media.

Note: This may include negative [excludes] and positive [includes] statements in the prompt. - AI Prompt Writer Name (text string, single-valued, optional)

Name of the person who wrote the prompt used for generating this media.

Note: this person should not be considered as the media creator.

Please note that users should not expect that the contents of the “AI Prompt Information” property could be given directly to an AI system to generate the same video; there are many reasons why generated media files differ even for the same input information. The Working Group decided to add “AI Prompt Information” as a generic property that creators could use to describe the process that was used to prompt an AI system to create the content.

The official IPTC Video Metadata Hub recommendation files and user documentation have all been updated for the new version:

- IPTC Video Metadata Hub 1.7 properties table

- IPTC Video Metadata Hub 1.7 full mappings table

- IPTC Video Metadata Hub 1.7 user guide

- IPTC Video Metadata Hub generator – A tool that can help developers to understand how Video Metadata Hub works and to generate example files

- IPTC Video Metadata Hub 1.7 JSON Schema

The Video Metadata Working Group welcomes feedback. Please post to the IPTC Video Metadata public discussion group or use the IPTC Contact Us form.

Categories

Archives

- February 2026

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- February 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- December 2019

- November 2019

- October 2019

- September 2019

- July 2019

- June 2019

- May 2019

- April 2019

- February 2019

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- January 2018

- November 2017

- October 2017

- September 2017

- August 2017

- June 2017

- May 2017

- April 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- June 2015

- April 2015

- March 2015

- February 2015

- November 2014