Categories

Archives

Last week saw the 60th Annual General Meeting of IPTC, held as part of the IPTC Autumn Meeting 2025. The event was held online from Tuesday 14th to Thursday 16th October.

As one could imagine, AI was the hot topic, being mentioned in almost every presentation.

Highlights included:

- Hearing the latest on the TEMS Media Dataspace project, including an overview of the data model, which is of interest to IPTC members due to its mappings to many IPTC standards;

- Hearing the latest from the IPTC Media Provenance Committee and its three working groups, with updates on the Verified News Publisher List and the recent Media Provenance Summits;

- A presentation on “vibe coding” and AI-assisted development, including it being used to update many of IPTC’s tools and services;

- Discussions on a possible new publisher metadata best practice, an update on our AI preferences work and the IETF AI Preferences Working Group;

- We also heard from StoryGo, Copyright Exchange, Global Media Identifier, Time Addressable Media Store, and the IBC Accelerator “Stamping Your Content” project that focused on bringing C2PA metadata to broadcast video content, with many IPTC member organisations as participants.

At the Standards Committee meeting, members voted to approve three new IPTC standard versions: Video Metadata Hub 1.7, Photo Metadata 2025.1, and ninjs version updates. The Photo and Video Metadata updates are to add new properties for AI Prompts and AI models used to create synthetic content. The ninjs updates were to add “resources” to renditions, to cover for example multiple audio tracks or a subtitle feed as part of a live video stream. These updates will be announced separately when they are published.

At the 2025 Annual General Meeting, the Board was re-elected by all Voting Members. Members heard updates from IPTC Managing Director Brendan Quinn and the Chair of IPTC’s Board of Directors, Robert Schmidt-Nia. The 2026 budget was approved and a change to IPTC’s Articles of Association was voted through.

Thanks very much to all who participated and presented their work, and to all IPTC Working Group and Committee members who contributed to the event.

We’re already looking forward to the IPTC Spring Meeting 2026, which will be held in Toronto, Canada, hosted by Thomson Reuters.

The NewsCodes Working Group is pleased to release the Q3 2025 update to our NewsCodes vocabularies.

MediaTopics updates

As usual most of the updates are to the Media Topic vocabulary.

New concepts (3)

Retired concepts (1)

- water –

Label changes (3)

- hazardous materials -> hazardous material

- waste materials -> waste material

- pests -> pest and pest control

Definition changes (16)

- environment

- environmental pollution

- air pollution

- environmental clean-up

- hazardous material

- water pollution

- nature

- ecosystem

- endangered species

- invasive species

- animal disease

- plant disease

- animal

- flowers and plants

- pest and pest control

- sustainability

Modified notes (2)

animal disease,

pest and pest control

No concepts were retired, had hierarchy moves or modified wikidata mappings this time.

Translation updates

Many terms and definitions in the German translation were updated to reflect recent changes in the English versions.

Norwegian and Swedish terms were updated to reflect recent changes in the English versions.

2025-Q2 updates recap

It seems that we didn’t post a news item about the 2025-Q2 changes to Media Topics. Here’s a summary:

- New term vegetarianism and veganism

- climate change – definition changed

- global warming retired. Use climate change instead.

- conservation – definition changed

- energy saving – retired. Use medtop:20001374 sustainability instead.

- parks -> nature preserve

- New Term: park and playground (Child of leisure venue medtop:20000553)

- natural resources -> natural resource

- energy resources retired. Use the relevant term from the business branch instead.

- land resources retired.

- forests -> forest. Moved to be child of nature.

- mountains -> mountain. Moved to be child of nature.

- population growth – retired. Use “population and census” instead.

- oceans -> ocean. Moved to be child of nature.

- rivers -> river. Moved to be child of nature.

- wetlands -> wetland. Moved to be child of nature.

- mankind -> demographic group.

Trust Indicator updates

The Trust Indicator vocabulary had one hierarchy change: factCheckingPolicy was made a child of editorialPolicy.

Also, the notes on terms have been updated to give credit to The Trust Project for their work and to indicate that the term “Trust Indicators®” is now a registered trademark of The Trust Project.

Working with IPTC NewsCodes

See the official Media Topic vocabulary on the IPTC Controlled Vocabulary server, and an easier-to-navigate tree view. An Excel version of IPTC Media Topics is also available. Other NewsCodes are available via the CV Server .

See the IPTC NewsCodes Guidelines document for information on our vocabularies and how you can use them in your projects.

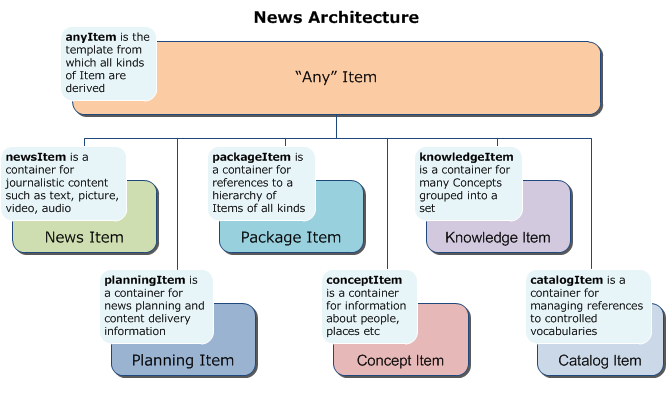

IPTC’s Python library for creating manipulating and managing NewsML-G2 documents, python-newsmlg2, has reached version 1.0.

The earliest versions of the library were created back in 2021, but the code has seen significant changes over that period and we are happy to endorse the latest version as a production-ready 1.0 release.

Created as free, open source library that can be integrated into any Python code, the library supports all parts of the NewsML-G2 specification:

- multi-media news stories (NewsItem)

- packages of news content (PackageItem)

- planned news coverage and information about upcoming and past events (PlanningItem and EventsML-G2)

- news content classification concepts and sets of concepts (knowledge graphs) (ConceptItem, KnowledgeItem and CatalogItem)

- syndicated news content transactions (NewsMessage)

The 1.0 version has 98% unit test coverage, which can give users confidence that future changes will not introduce regression bugs.

The code can also handle non-NewsML-G2 content embedded within NewsML-G2 files using XML Schema’s “xs:any” construct. This is a feature of NewsML-G2 that allows any type of markup, such as but not limited to XHTML, NITF or RightsML, to be carried as the payload in a NewsML-G2 NewsItem. The 1.0 version adds “round-trip” support of all xs:any constructs allowing additional markup to be captured, retained and output verbatim, without any loss of fidelity.

The library’s documentation also gives examples of how the library can be used to create, process, manipulate and output NewsML-G2 documents.

The code offers some “helper functions” that make working with NewsML-G2 easier, such as:

- Automatic resolution between QCodes and URIs, two equivalent formats for controlled vocabulary terms, that can now be used interchangeably. The code uses NewsML-G2 Catalogs to look up QCode prefixes and resolve them to URI format.

- Automatic handling of repeatable items and traversal of the NewsML-G2 element structure to provide easy access to child elements such as “

digsrctype = newsitem.contentmeta.digitalsourcetype.uri“

The library can be installed by any Python user using PyPI: pip install newsmlg2.

The source code of the library is freely available, licensed under the open-source MIT licence, at https://github.com/iptc/python-newsmlg2.

Feedback on the library is very welcome. Please let us know what you think on the IPTC Contact Us page or the public NewsML-G2 discussion list.

Software industry legend Tim Bray gave a resounding call to IPTC and to others working on media provenance and C2PA: his verdict was that while the specification and its implementation had issues, they were slowly being resolved and he lauded the project’s goal of, in Tim’s words, “making it harder for liars to lie and easier for truth tellers to be believed”.

The 2025 Photo Metadata Conference, held on September 18th, was a great success, with 280 registered attendees from hundreds of organisations around the world. Video recordings from the event are now available.

Speakers included:

- David Riecks, lead of the IPTC Photo Metadata Working Group, describing some new IPTC Photo Metadata properties concerning Generative AI models and prompts that will be proposed for a vote at the next Standards Committee meeting to be held at the IPTC Autumn Meeting.

- Brendan Quinn, Managing Director of IPTC, gave an update on the IPTC’s guidelines for opting out of Generative AI training and ongoing work to standardise AI training preferences at the Internet Engineering Task Force (IETF)

- Ottar A. B. Anderson, Head of Photography at SEDAK, the GLAM imaging service of Møre og Romsdal County in Norway, spoke about Metadata for Image Quality in Galleries, Libraries, Archives and Museums (GLAM) and his work on the Digital Object Authenticity Working Group.

- David Riecks gave an update on IPTC Photo Metadata Panel in Adobe Custom Metadata.

- AI caption tagging for the Superbowl – Jerry Lai, Senior Director of Content, Imagn Images – Download Jerry’s slides

- Paul Reinitz, previously with Getty Images and now a consultant on business and legal issues around copyright, spoke about recent developments in the area including updates in the US, EU and China

- Brendan Quinn spoke again to give an update on the IPTC’s work with C2PA and the world of Media Provenance, including our work on the Verified News Publishers List

- Tim Bray, creator of tech standards like XML and Atom and companies like OpenText

- Marcos Armstrong of CBC / Radio Canada spoke about his work on mapping image publishing workflows at CBC.

Feedback from the event was almost universally positive:

- “While I knew I wouldn’t understand all of the terms, I was so impressed the amount of topics that were touched upon. I had no problem following along. I loved the passion and the openess to different perspectives”

- “Great topic choices- perfect level of beginner/more advanced content presentation.”

- “It was a good critical look at the pluses and minuses of various decisions being made, ultimately pointing to developing public trust about authorship.”

- “Informative, I really liked the expertise all the speaker brought to the virtual table”

- “Learning about strategies to protect from and tools for blocking AI, as well as metadata fields to record AI use”

- “Informative, good presentations and presenters. Very relevant to today – AI.”

- “Focus on Content Credentials and AI. Range of speaker roles provided different perspectives on the topic area. Excellent organization, presentation quality and management of the zoom space.”

- “Three things in particular stood out. Tim Bray’s talk was great as it brought everything to my world as a photographer and is pretty much what I’ve found. Brendan Quinn’s opt out information was definitely worth knowing and now I’m going to look at it. Finally, David Riecks talk about Adobe’s Metadata Panel gave me more insight into it and if it should be included in my workflow but his information for the proposed new properties for Generative AI was very good to hear.”

Thanks to everyone who attended and to our speakers David, Brendan, Paul, Ottar, Tim and Marcos.

Special thanks to David and the IPTC Photo Metadata Working Group for organising the event.

We look forward to seeing even more attendees next year!

To be sure of being notified about next year’s event, subscribe to the (very low volume) “Friends of IPTC Newsletter”.

The Media Provenance Summit brought together leading experts, journalists and technologists from across the globe to Mount Fløyen in Bergen, Norway, to address some of the most pressing challenges facing news media today.

Hosted by Media Cluster Norway, and organised together with the BBC, the EBU and IPTC, the full-day summit on September 23 convened participants from major news organisations, technology providers and international standards bodies to advance the implementation of the C2PA content provenance standard, also known as Content Credentials, in real-world newsroom workflows. The ultimate aim is to strengthen the signal of authentic news media content in a time where it is challenged by generative AI.

“We need to work together to tackle the big problems that the news media industry is facing, and we are very grateful for everyone who came together here in Bergen to work on solutions. I believe we made important progress,” said Helge O. Svela, CEO of Media Cluster Norway.

The program focused on three critical questions:

- How to preserve C2PA information throughout editorial workflows when not all tools yet support the technology.

- When to sign content as it moves through the workflow at device level, organisational level, or both.

- How to handle confidentiality and privacy issues, including the protection of sources and sensitive material.

“We were very happy to see a focus on real solutions, with some great ideas and tangible next steps,” said IPTC’s Managing Director, Brendan Quinn. “With participants from across the media ecosystem, it was exciting to see vendors, publishers, broadcasters and service providers working together to address issues in practically applying C2PA to media

workflows in today’s newsrooms.”

Speakers included Charlie Halford (BBC), Andy Parsons (CAI/Adobe), François-Xavier Marit (AFP), Kenneth Warmuth (WDR), Lucille Verbaere (EBU), Marcos Armstrong and Sébastien Testeau (CBC/Radio-Canada), and Mohamed Badr Taddist (EBU).

“The BBC welcomes this focus on protecting access to trustworthy news. We are proud to have been founder members of the media provenance work carried out under the auspices of C2PA and we are delighted to see it moving forward with such strong industry support,” said Laura Ellis, Head of Technology Forecasting at BBC Research.

Participants travelled to participate in the summit from as far away as Japan, Australia, the US and Canada.

“We’re pleased to collectively have taken a few hurdles on the way to enabling a broader adoption of Content Provenance and Authenticity”, said Hans Hoffmann, Deputy Director at EBU Technology and Innovation Department. “The definition of common practices for signing content in workflows, retrieving provenance information thanks to soft binding, and better safeguards for the privacy of sources address important challenges. Public service media are committed to fight disinformation and improve transparency, and EBU members were well represented in Bergen. The broad participation from across the industry and globe

smooths the path towards adoption. Thanks to Media Cluster Norway for hosting the event!”

The summit emphasised moving from problem analysis to solution exploration. Through structured sessions, participants defined key blockers, sketched practical solutions and developed action plans aimed at strengthening trust in digital media worldwide.

About the Summit

The Media Provenance Summit was organised jointly by Media Cluster Norway, the EBU, the BBC and IPTC, and made possible with the support of Agenda Vestlandet.

For more information, please contact: helge@medieklyngen.no

The IPTC has joined the BBC (UK), YLE (Finland), RTÉ (Ireland), ITV (UK), ITN (UK), EBU (Europe), AP (USA/Global), Comcast (USA/Global), ASBU (Africa and Middle East), Channel 4 (UK) and the IET (UK) as a “champion” in the Stamping Your Content project, run by the IBC Accelerator as part of this year’s IBC Conference in Amsterdam.

The IPTC has joined the BBC (UK), YLE (Finland), RTÉ (Ireland), ITV (UK), ITN (UK), EBU (Europe), AP (USA/Global), Comcast (USA/Global), ASBU (Africa and Middle East), Channel 4 (UK) and the IET (UK) as a “champion” in the Stamping Your Content project, run by the IBC Accelerator as part of this year’s IBC Conference in Amsterdam.

These “Champions” represent the content creator side of the equation. The project also includes “participants” from the vendor and integrator community: CastLabs, TCS, Videntifier, Media Cluster Norway, Open Origins, Sony, Google Cloud and Trufo.

This project aims to develop open-source tools that enable organisations to integrate Content Credentials (C2PA) into their workflows, allowing them to sign and verify media provenance. As interest in authenticating digital content grows, broadcasters and news organisations require practical solutions to assert source integrity and publisher credibility. However, implementing Content Credentials remains complex, creating barriers to adoption. This project seeks to lower the entry threshold, making it easier for organisations to embed provenance metadata at the point of publication and verify credentials on digital platforms.

The initiative has created a proof-of-concept open source ‘stamping’ tool that links to a company’s authorisation certificate, inserting C2PA metadata into video content at the time of publishing. Additionally, a complementary open-source plug-in is being developed to decode and verify these credentials, ensuring compliance with C2PA standards. By providing these tools, the project enables media organisations to assert content authenticity, helping to combat misinformation and reinforce trust in digital media.

This work builds upon the “Designing Your Weapons in the Fight Against Disinformation” initiative at last year’s IBC Accelerator, which mapped the landscape of digital misinformation. The current phase focuses on practical implementation, ensuring that organisations can start integrating authentication measures in real-world workflows. By fostering an open and standardised approach, the project supports the broader media ecosystem in adopting content provenance solutions that enhance transparency and trustworthiness.

Attend the project’s panel presentation session at the International Broadcasting Convention, IBC2025 in Amsterdam on Monday, Sept 15 at 09:45 – 10:45.

The speakers on the panel on Monday September 15 are all from IPTC member organisations:

- Henrik Cox, Solutions Architect – OpenOrigins

- Judy Parnall, Principal Technologist, BBC Research & Development – BBC

- Mohamed Badr Taddist, Cybersecurity Master graduate, content provenance and authenticity – European Broadcasting Union (EBU)

- Tim Forrest, Head of Content Distribution and Commercial Innovation – ITN

See more detail on the IBC Show site.

Many of the participating organisations are also IPTC members, so the work started in the project will continue after IBC through the IPTC Media Provenance Committee and its Working Groups.

We are already planning to carry this work forward at the next Media Provenance Summit which will be held later in September in Bergen, Norway.

The IPTC is pleased to announce the full agenda for the 2025 IPTC Photo Metadata Conference, which will be held online on Thursday September 18th from 15.00 to 18.00 UTC. The focus this year is on how image metadata can improve real-world workflows.

We are excited to be joined by the following speakers:

- Brendan Quinn, IPTC Managing Director, presenting two sessions: presenting IPTC’s AI Opt-Out Best Practices guidelines and also an update on IPTC’s work with C2PA and the Media Provenance Committee

- David Riecks, Lead of the IPTC Photo Metadata Working Group, presenting two sessions: the latest on IPTC’s proposed new properties for Generative AI, and also an update on the Adobe Custom Metadata Panel plugin and how it makes the complete IPTC Photo Metadata Standard available in Adobe products

- Paul Reinitz, consultant previously with Getty Images, discussing AI opt-out and copyright issues

- Ottar A. B. Anderson, previously a photographer with the Royal Norwegian Air Force and with over 15 years of experience as a commercial photographer, on proposals for metadata for image archiving and his work on the Digital Object Authenticity Working Group (DOAWG)

- Jerry Lai, previously a photographer for Getty Images, Reuters and Associated Press and now with Imagn, presenting a case study on using AI for captioning huge numbers of images for Super Bowl LIX

- Marcos Armstrong, Senior Specialist, Content Provenance at CBC/Radio-Canada, speaking about CBC’s project to map editorial workflows and identify where content authenticity technologies can be used in the newsroom

- Tim Bray, creator of XML and founder of OpenText Corporation, among many others, speaking on his experiences with C2PA and his ideas for how it can be adopted in the future

This year’s conference promises to be a great one, with topics ranging from Generative AI and media provenance technology to the technical details of scanning historical documents, but always with a focus on how new technologies can be applied in the real world.

Registration is free and open to anyone.

See more information at the event page on the IPTC web site or simply sign up at the Zoom webinar page.

We look forward to seeing you there!

Google has announced the launch of its latest phone in the Pixel series, including support for IPTC Digital Source Type in its industry-leading C2PA implementation.

Many existing C2PA implementations focus on signalling AI-generated content, adding the IPTC Digital Source Type of “Generated by AI” to content that has been created by a trained model.

Google’s implementation in the new Pixel 10 phone differs by adding a Digital Source Type to every image created using the phone, using the “computational capture” Digital Source Type to denote photos taken by the phone’s camera. In addition, images edited using the phone’s AI manipulation tools show the “Edited using Generative AI” value in the Digital Source Type field.

Note that the Digital Source Type information is added using the “C2PA Actions” assertion in the C2PA manifest; unfortunately it is not yet added to the regular IPTC metadata section in the XMP metadata packet. So it can only be read by C2PA-compatible tools.

Background: what is “Computational Capture”?

The IPTC added Computational Capture as a new term in the Digital Source Type vocabulary in September 2024. It represents a “digital capture” that does involve some extra work using an algorithm, as opposed to simply recording the encoded sample hitting the phone sensor, as with simple digital cameras.

For example, a modern smartphone doesn’t simply take one photo when you press the shutter button. Usually the phone captures several images from the phone sensor using different exposure levels and then an algorithm merges them together to create a visually improved image.

This of course is very different from a photo that was created by AI or even one that was edited by AI at a human’s instruction, so we wanted to be able to capture this use case. Therefore we introduced the term “computational capture”.

For more information and examples, see the Digital Source Type guidance in the IPTC Photo Metadata User Guide.

The IPTC Photo Metadata Working Group is proposing a draft set of properties for recording details of images created using generative AI systems. The group presents a draft of these fields for your comments and feedback. After comments are reviewed the group intends to add new properties to a new version of the IPTC Photo Metadata Standard which would be released later in 2025.

Use Cases

The proposals detailed here are intended to address these scenarios, among others:

- How do you know which system/model generated this image? For instance, if you wanted to compare how different systems—or versions of systems—interpret a given prompt, where would you look?

- How can you know what prompt text was entered, or image shared as a starting point? If you want to recreate similar images in the future with the same look, where should that info be stored?

- How can you tell who was involved in the creation of a generative AI image?

Example Scenario

You are the new designer for an organisation, and need to create an image for a monthly column. You are told to use generative AI, but your boss wants the end result to have the same “look and feel” as images used previously in the column. If you needed to find those that were published previously—what information would be most useful in locating and retrieving the image(s) in your organization’s image collection?

Proposed Properties:

- AI Model

Name: AI Model

Definition: The foundational model name and version used to generate this image.

User Note: For example “DALL-E 2”, “Google Genesis 1.5 Pro”

Basic Specs: Data type: Text / Cardinality: 0..1 - AI Text Prompt Description

Name: AI Text Prompt Description

Definition: The information that was given to the generative AI service as “prompt(s)” in order to generate this image.

User Note: This may include negative [excludes] and positive [includes] statements in the prompt.

Basic Specs: Data type: Text / Cardinality: 0..1 - AI Prompt Writer Name

Name: AI Prompt Writer Name

Definition: Name of the person who wrote the prompt used for generating this image.

Basic Specs: Data type: Text / Cardinality: 0..1 - Reference Image(s)

Name: Reference Image

Definition: Image(s) used as a starting point to be refined by the generative AI system (sometimes referred to as “base image”).

Basic Specs: Data type: URI / Cardinality: 0..unbounded

All of these properties are of course optional, not required, but we would recommend that AI engines fill in the properties whenever possible.

Request for Comment

The intent is for a new standard version including these fields to be proposed at the IPTC Autumn Meeting 2025 in October to be voted on by IPTC member organisations. If approved by members, the new version would be published in November 2025.

Please send your comments or suggestions for improvements using the IPTC Contact Us form or via a post to the public iptc-photometadata@groups.io discussion list by Friday 29th August 2025.

The IPTC participated in a “design team” workshop for the Internet Engineering Task Force (IETF)’s AI Preferences Working Group. Brendan Quinn, IPTC Managing Director attended the workshop in London along with representatives from Mozilla, Google, Microsoft, Cloudflare, Anthropic, Meta, Adobe, Common Crawl and more.

As per the group’s charter, “The AI Preferences Working Group will standardize building blocks that allow for the expression of preferences about how content is collected and processed for Artificial Intelligence (AI) model development, deployment, and use.” The intent is that this will take the form of an extension to the commonly-used Robots Exclusion Protocol (RFC9309). This document defines the way that web crawlers should interact with websites.

The idea is that the Robots Exclusion Protocol would specify how website owners would like content to be collected, and the AI Preferences specification defines the statements that rights-holders can use to express how they would like their content to be used.

The Design Team is discussing and iterating the group’s draft documents: the Vocabulary for Expressing AI Usage Preferences and the “attachment” definition document, Indicating Preferences Regarding Content Usage. The results of the discussions will be taken to the IETF plenary meeting in Madrid next week, and

Discussions have been wide-ranging and include use cases for varying options of opt-in and opt-out, the ability to opt out of generative AI training but to allow search engine indexing, and the difference between preferences for training and preferences for how content can be used at inference time (also known as prompt time or query time, such as RAG or “grounding” use cases) and the varying mechanisms for attaching these preferences to content, i.e. a website’s robots.txt file, HTTP headers and embedded metadata.

The IPTC has already been looking at this area and defined a data mining usage vocabulary in conjunction with the PLUS Coalition in 2023. There is a possibility that our work will change to reflect the IETF agreed vocabulary.

The work also relates to IPTC’s recently-published guidance for publishers on opting out of Generative AI training. Hopefully we will be able to publish a much simpler version of this guidance in the future because of the work from the IETF.

Categories

Archives

- February 2026

- January 2026

- December 2025

- November 2025

- October 2025

- September 2025

- August 2025

- July 2025

- June 2025

- May 2025

- April 2025

- March 2025

- February 2025

- January 2025

- December 2024

- November 2024

- October 2024

- September 2024

- August 2024

- July 2024

- June 2024

- May 2024

- April 2024

- March 2024

- February 2024

- December 2023

- November 2023

- October 2023

- September 2023

- August 2023

- July 2023

- June 2023

- May 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- February 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- December 2019

- November 2019

- October 2019

- September 2019

- July 2019

- June 2019

- May 2019

- April 2019

- February 2019

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- January 2018

- November 2017

- October 2017

- September 2017

- August 2017

- June 2017

- May 2017

- April 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- June 2015

- April 2015

- March 2015

- February 2015

- November 2014